What's the difference between lvmcache and dm-cache?

Solution 1

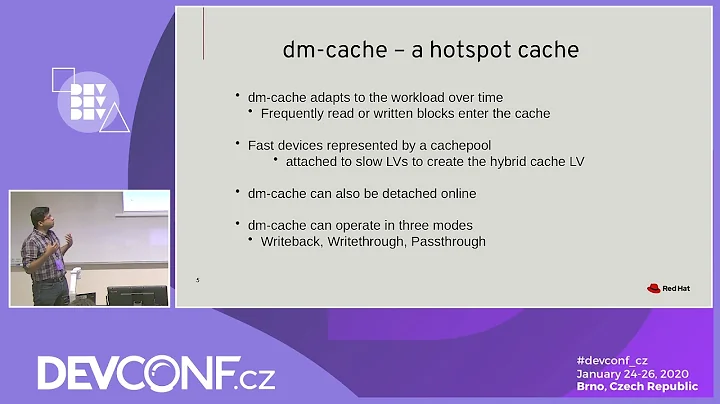

lvmcache is built on top of dm-cache; it sets dm-cache up using logical volumes, and avoids having to calculate block offsets and sizes. Everything is documented in the manpage; the basic idea is to use

- the original LV (slow, to be cached)

- a new cache data LV

- a new cache meta-data LV

The two cache LVs are grouped into a "cache pool" LV, then the original LV and cache pool LV are grouped into a cached LV which you use instead of the original LV.

lvmcache also makes it easy to set up redundant caches, change the cache mode or policy, etc.

Solution 2

@stephen-kitt summarizes the difference well. On every more or less current system, use lvmcache(7) for everything you can, it will save you tones of time and effort. It is also integrated and supported in RHEL 7.2+ (backported from kernel 4.2) and SLESs recent releases. Of course Debian and Ubuntu should be fine.

I gave a talk on the matter at LinuxDays 2017 in Prague recently: https://www.youtube.com/watch?v=6W_xK5Ks-Lw

the slides: https://www.linuxdays.cz/2017/video/Adam_Kalisz-SSD_cache_testing.pdf

Related videos on Youtube

Lapsio

Updated on September 18, 2022Comments

-

Lapsio almost 2 years

Recently I found article mentioning that recently

dm-cachesignificantly improved in linux. I also found that in userspace you see it aslvmcache. And it's quite confusing for me. I thought that LVM caching mechanism is something different thandm-cache. On my server I'm usingdm-cacheset up directly on device mapper level usingdmsetupcommands. No LVM commands involved.So what is it in the end? Is

lvmcachejust CLI for easierdm-cachesetup? Is it better idea to use it insdead of rawdmsetupcommands?My current script looks like this:

#!/bin/bash CACHEPARAMS="512 1 writethrough default 0" CACHEDEVICES="o=/dev/mapper/storage c=/dev/mapper/suse-cache" MAPPER="storagecached" if [ "$1" == "-u" ] ; then { for i in $CACHEDEVICES ; do if [ "`echo $i | grep \"^c=\"`" != "" ] ; then __CACHEDEV=${i:2} elif [ "`echo $i | grep \"^o=\"`" != "" ] ; then __ORIGINALDEV=${i:2} fi done dmsetup suspend $MAPPER dmsetup remove $MAPPER dmsetup remove `basename $__CACHEDEV`-blocks dmsetup remove `basename $__CACHEDEV`-metadata } else { for i in $CACHEDEVICES ; do if [ "`echo $i | grep \"^c=\"`" != "" ] ; then __CACHEDEV=${i:2} elif [ "`echo $i | grep \"^o=\"`" != "" ] ; then __ORIGINALDEV=${i:2} fi done __CACHEDEVSIZE="`blockdev --getsize64 \"$__CACHEDEV\"`" __CACHEMETASIZE="$(((4194304 + (16 * $__CACHEDEVSIZE / 262144))/512))" if [ "$__CACHEMETASIZE" == ""$(((4194303 + (16 * $__CACHEDEVSIZE / 262144))/512))"" ] ; then __CACHEMETASIZE="$(($__CACHEMETASIZE + 1))" ; fi __CACHEBLOCKSSIZE="$((($__CACHEDEVSIZE/512) - $__CACHEMETASIZE))" __ORIGINALDEVSIZE="`blockdev --getsz $__ORIGINALDEV`" dmsetup create `basename $__CACHEDEV`-metadata --table "0 $__CACHEMETASIZE linear /dev/mapper/suse-cache 0" dmsetup create `basename $__CACHEDEV`-blocks --table "0 $__CACHEBLOCKSSIZE linear /dev/mapper/suse-cache $__CACHEMETASIZE" dmsetup create $MAPPER --table "0 $__ORIGINALDEVSIZE cache /dev/mapper/`basename $__CACHEDEV`-metadata /dev/mapper/`basename $__CACHEDEV`-blocks $__ORIGINALDEV $CACHEPARAMS" dmsetup resume $MAPPER } fiWould

lvmcachedo it better? I feel kinda okay with doing it this way because I see what's going on I don't value ease of use more than clarity of setup. However if cache set up using lvmcache would be better optimized then i think it's no brainer to use it instead.