What is the max number of files that can be kept in a single folder, on Win7/Mac OS X/Ubuntu Filesystems?

Solution 1

In Windows (assuming NTFS): 4,294,967,295 files

In Linux (assuming ext4): also 4 billion files (but it can be less with some custom inode tables)

In Mac OS X (assuming HFS): 2.1 billion

But I have put around 65000 files into a single directory and I have to say just loading the file list can kill an average PC.

Solution 2

This depends on the filesystem. The lowest common denominator is likely FAT32 which only allows 65,534 files in a directory.

These are the numbers I could find:

- FAT16 (old format, can be ignored): 512

- FAT32 (still used a lot, especially on external media): 65,534

- NTFS: 4,294,967,295

- ext2/ext3 (Linux): Depends on configuration at format time, up to 4,294,967,295

- HFS+ (Mac): "up to 2.1 billion"

Solution 3

Most modern OSes have no upper limit, or a very high upper limit. However, performance usually begins to degrade when you have something on the order of 10,000 files; it's a good idea to break your directory into multiple subdirectories before this point.

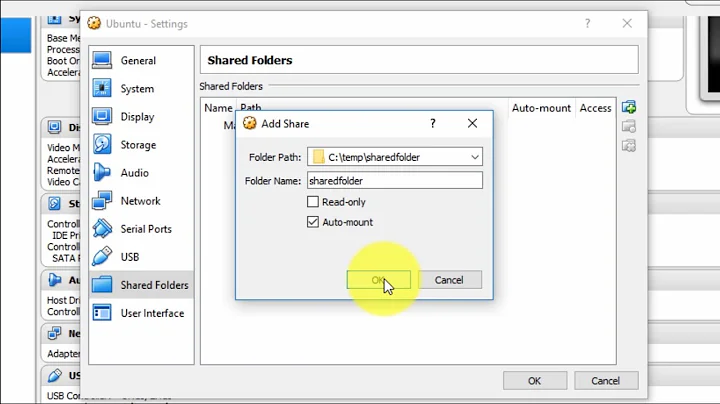

Related videos on Youtube

TCSGrad

Updated on November 22, 2020Comments

-

TCSGrad over 3 years

I'm wondering about what is the maximum number of files that can be present in a single folder, in the file systems used by all the prevalent OSes mentioned. I need this information in order to decide the lowest common denominator, so that the folder I'm building can be opened and accessed in any OS.

-

Eugene Mayevski 'Callback over 12 yearsFor some scenarios you get more performance when you keep all files in one folder (this has been measured on NTFS with 400K files in the folder). These scenarios include various servers which read the directory just once and then just open and rarely create new files. Then open operation is faster on one directory than with subdirectories.

Eugene Mayevski 'Callback over 12 yearsFor some scenarios you get more performance when you keep all files in one folder (this has been measured on NTFS with 400K files in the folder). These scenarios include various servers which read the directory just once and then just open and rarely create new files. Then open operation is faster on one directory than with subdirectories. -

ytg over 12 yearsI'm just curious, couldn't it be faster if the contents of those files were put into a single database file?

-

DarkDust over 12 yearsAlmost all FS do have an upper limit, most often the maximum number of files for the FS although these can be ridiculously high. ZFS allow a maximum of 2^48 files, for ext2/3 it's 2^32 and Btrfs has a maximum of 2^64. I guess one needs to subtract 1 from all of these for the root directory ;-)

-

Eugene Mayevski 'Callback over 12 yearsmost likely no - DBMS adds an extra layer of data transfer. DBMS are generally not well-suited for large amounts of large BLOBs.

Eugene Mayevski 'Callback over 12 yearsmost likely no - DBMS adds an extra layer of data transfer. DBMS are generally not well-suited for large amounts of large BLOBs. -

Ztyx about 11 yearsAlso don't forget that the number of inodes also puts a limit on the number of files.

-

Spooky over 9 yearsWhat does "kill an average PC" mean? What are the actual consequences?

-

ytg over 9 yearsIn my case it meant that the machine worked but I couldn't get any meaningful response. Maybe it used too much CPU, maybe it swapped too much, (maybe it wasn't the entire PC, only explorer.exe?). Basically I don't know, thus the dubious description.

-

Robert almost 9 yearsOn OS X with brand new hardware ~80k images will cause the finder to crash and doing "info" on the directory shows 0 files. Command line still works fine though, although using xargs for everything is tedious (too many arguments for mv,cp,etc).

-

Pacerier over 6 years@EugeneMayevski'AlliedBits, How does this make sense? To find a filename in a folder with 400k names via bruteforce would surely take more time then doing it the subdirectory "tree" way?

-

Pacerier over 6 yearsApple APFS is 64bit inode, thus 9 quintillion max instead of 2.1b.

-

Eugene Mayevski 'Callback over 6 years@Pacerier Do the tests yourself. I did some time ago, specifically on NTFS with 400K files (I even posted the details in one of the answers here on SO). NTFS doesn't brute-force all filenames - it has a tree inside, so the name lookup is quite fast.. And opening subdirectories appears to slow down the process quite significantly.

Eugene Mayevski 'Callback over 6 years@Pacerier Do the tests yourself. I did some time ago, specifically on NTFS with 400K files (I even posted the details in one of the answers here on SO). NTFS doesn't brute-force all filenames - it has a tree inside, so the name lookup is quite fast.. And opening subdirectories appears to slow down the process quite significantly.

![How to Fix USB Files Not Showing But Space Used Issue? [5 Solutions]](https://i.ytimg.com/vi/Z3hTqHrIY-w/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAaNU0MDbfbQ8xs61RaRkrsvWS0cQ)

![PERBEZAAN FILE SYSTEM [windows,linux dan mac os]](https://i.ytimg.com/vi/Ar9L23atsbc/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLC4bUZHeXfHM7qlC3GYJzRFQjhPLA)