Why does malloc not work sometimes?

Solution 1

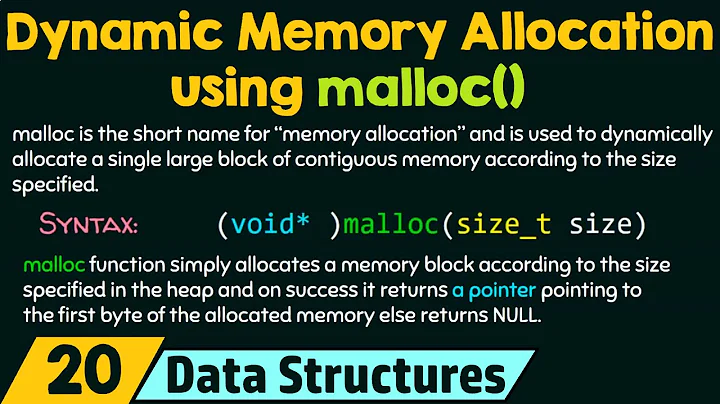

malloc() returns an invalid pointer of NULL when it is unable to service a memory request. In most cases the C memory allocation routines manage a list or heap of memory available memory with calls to the operating system to allocate additional chunks of memory when a malloc() call is made and there is not a block on the list or heap to satisfy the request.

So the first case of malloc() failing is when a memory request can not be satisfied because (1) there is not a usable block of memory on the list or heap of the C runtime and (2) when the C runtime memory management requested more memory from the operating system, the request was refused.

Here is an article about Pointer Allocation Strategies.

This forum article gives an example of malloc failure due to memory fragmentation.

Another reason why malloc() might fail is because the memory management data structures have become corrupted probably due to a buffer overflow in which a memory area that was allocated was used for an object larger than the size of the memory allocated. Different versions of malloc() can use different strategies for memory management and determining how much memory to provide when malloc() is called. For instance a malloc() may give you exactly the number of bytes requested or it may give you more than you asked for in order to fit the block allocated within memory boundaries or to make the memory management easier.

With modern operating systems and virtual memory, it is pretty difficult to run out of memory unless you are doing some really large memory resident storage. However as user Yeow_Meng mentioned in a comment below, if you are doing arithmetic to determine the size to allocate and the result is a negative number you could end up requesting a huge amount of memory because the argument to malloc() for the amount of memory to allocation is unsigned.

You can run into the problem of negative sizes when doing pointer arithmetic to determine how much space is needed for some data. This kind of error is common for text parsing that is done on text that is unexpected. For example the following code would result in a very large malloc() request.

char pathText[64] = "./dir/prefix"; // a buffer of text with path using dot (.) for current dir

char *pFile = strrchr (pathText, '/'); // find last slash where the file name begins

char *pExt = strrchr (pathText, '.'); // looking for file extension

// at this point the programmer expected that

// - pFile points to the last slash in the path name

// - pExt point to the dot (.) in the file extension or NULL

// however with this data we instead have the following pointers because rather than

// an absolute path, it is a relative path

// - pFile points to the last slash in the path name

// - pExt point to the first dot (.) in the path name as there is no file extension

// the result is that rather than a non-NULL pExt value being larger than pFile,

// it is instead smaller for this specific data.

char *pNameNoExt;

if (pExt) { // this really should be if (pExt && pFile < pExt) {

// extension specified so allocate space just for the name, no extension

// allocate space for just the file name without the extension

// since pExt is less than pFile, we get a negative value which then becomes

// a really huge unsigned value.

pNameNoExt = malloc ((pExt - pFile + 1) * sizeof(char));

} else {

pNameNoExt = malloc ((strlen(pFile) + 1) * sizeof(char));

}

A good run time memory management will try to coalesce freed chunks of memory so that many smaller blocks will be combined into larger blocks as they are freed. This combining of chunks of memory reduces the chances of being unable to service a memory request using what is already available on the list or heap of memory being managed by the C memory management run time.

The more that you can just reuse already allocated memory and the less you depend on malloc() and free() the better. If you are not doing a malloc() then it is difficult for it to fail.

The more that you can change many small size calls to malloc() to fewer large calls to malloc() the less chance you have for fragmenting the memory and expanding the size of the memory list or heap with lots of small blocks that can not be combined because they are not next to each other.

The more that you can malloc() and free() contiguous blocks at the same time, the more likely that the memory management run time can coalesce blocks.

There is no rule that says you must do a malloc() with the specific size of an object, the size argument provided to malloc() can be larger than the size needed for the object for which you are allocating memory. So you may want to use some kind of a rule for calls to malloc () so that standard sized blocks are allocated by rounding up to some standard amount of memory. So you may allocate in blocks of 16 bytes using a formula like ((size / 16) + 1) * 16 or more likely ((size >> 4) + 1) << 4. Many script languages use something similar so as to increase the chance of repeated calls to malloc() and free() being able to match up a request with a free block on the list or heap of memory.

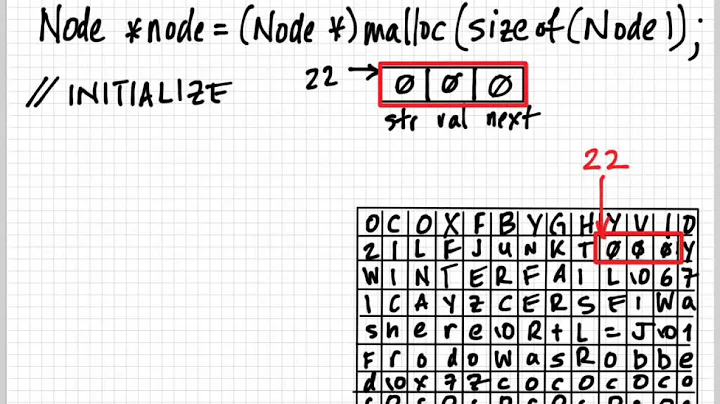

Here is a somewhat simple example of trying to reduce the number of blocks allocated and deallocated. Lets say that we have a linked list of variable sized blocks of memory. So the struct for the nodes in the linked list look something like:

typedef struct __MyNodeStruct {

struct __MyNodeStruct *pNext;

unsigned char *pMegaBuffer;

} MyNodeStruct;

There could be two ways of allocating this memory for a particular buffer and its node. The first is a standard allocation of the node followed by an allocation of the buffer as in the following.

MyNodeStruct *pNewNode = malloc(sizeof(MyNodeStruct));

if (pNewNode)

pNewNode->pMegaBuffer = malloc(15000);

However another way would be to do something like the following which uses a single memory allocation with pointer arithmetic so that a single malloc() provides both memory areas.

MyNodeStruct *pNewNode = malloc(sizeof(myNodeStruct) + 15000);

if (pNewNode)

pNewNode->pMegaBuffer = ((unsigned char *)pNewNode) + sizeof(myNodeStruct);

However if you are using this single allocation method, you will need to make sure that you are consistent in the use of the pointer pMegaBuffer that you do not accidently do a free() on it. And if you are having to change out the buffer with a larger buffer, you will need to free the node and reallocate buffer and node. So there is more work for the programmer.

Solution 2

Another reason for malloc() to fail on Windows is if your code allocates in one DLL and deallocates in a different DLL or EXE.

Unlike Linux, in Windows a DLL or EXE has its own links to the runtime libraries. That means that you can link your program, using the 2013 CRT to a DLL compiled against the 2008 CRT.

The different runtimes might handle the heap differently. The Debug and Release CRTs definitely handle the heap differently. If you malloc() in Debug and free() in Release, it will break horribly, and this might be causing your problem.

Related videos on Youtube

Pedro Alves

Bachelor's degree in Applied mathematics from Universidade de Campinas(2013), MSc (2016) and currently a PhD student in Compute science at the same university. His research is focused on information security, specifically cryptography and homomorphic encryption, and high performance computing. He has strong experience with parallel programming using CUDA and common parallel libraries for CPU such as PThreads, OpenMP and MPI. Moreover, has works in geophysics and biology on the development of specialized software using CUDA platform.

Updated on September 07, 2020Comments

-

Pedro Alves almost 4 years

I'm porting a C project from Linux to Windows. On Linux it is completely stable. On Windows, it's working well most times, but sometimes I got a segmentation fault.

I'm using Microsoft Visual Studio 2010 to compile and debug and looks like sometimes my malloc calls simply doesn't allocate memory, returning NULL. The machine has free memory; it already passed through that code a thousand times, but it still happens in different locations.

Like I said, it doesn't happen all the time or in the same location; it looks like a random error.

Is there something I have to be more careful on Windows than on Linux? What can I be doing wrong?

-

Daniel Pryden almost 12 yearsAre you sure you really have free memory? IIRC, the default behavior on Linux is very aggressive about overcommitting memory, which can lead to

mallocreturning fine but then your app thrashing when you try to actually touch the pages it returned. -

Jonathan Leffler almost 12 yearsOne problem appears to be that you are not taking into account the possibility of a NULL pointer being returned. Have you run the program under

Jonathan Leffler almost 12 yearsOne problem appears to be that you are not taking into account the possibility of a NULL pointer being returned. Have you run the program undervalgrindon Linux? -

wkl almost 12 yearsThere could be multiple reasons - here are some related threads: stackoverflow.com/questions/1609669/…

-

Daniel Pryden almost 12 years

-

Adrian Cornish almost 12 yearsThere may be free memory but it may be fragmented so that the OS cannot return a block for the size you requested. Windows/Linux manage memory differently

Adrian Cornish almost 12 yearsThere may be free memory but it may be fragmented so that the OS cannot return a block for the size you requested. Windows/Linux manage memory differently -

cababunga almost 12 yearsAre you compiling it into 32-bit binary and then trying to use more then 4 GB?

-

Steve Jessop almost 12 years@cababunga: you don't even get 4GB on 32-bit processes.

-

GreekMustard almost 12 yearsWhat do you mean it looks? Assert your calls properly and find out properly. Wrap malloc in your own function at logs the input and output values. More than Likly you are passing invalid values to Malloc, maybe corrupt 64bit integers. Windows seldom returns null. The virtual memory setup means the system can Even give your more memory than the of even has, it's not until you start writig to the memory that windows bothers to do anything.

-

-

Jay D almost 12 yearssecond allocation strategy is better because then you will have a consecutive memory chunk of

(sizeof(myNodeStruct) + 15000)and then you are correctly adjusting thepMegaBufferpointer accordingly. first strategy is highly discouraged for various reasons. -

Pedro Alves almost 12 yearsThanks for your answer. Now I understand much better how works the memory allocation.

-

Jeff Mercado over 10 yearsHow in the world is this supposed to be "safe?"

-

CCC over 10 yearsLearn recursive functions and you will know

-

Jeff Mercado over 10 yearsI know recursive... That's not my point. If an allocation fails because you're out of memory, doing another (recursive) call to allocate again will not help at all.

-

mity about 10 yearsFurthermore if you are out of heap memory, your recursive function shall also eat all memory reserved for the stack of the current thread, likely leading to crash of the process.

-

codenheim about 10 years-1 Terrible answer. Instead of crashing with a failed malloc, we crash with stack overflow and go off on wild goose chase. I would downvote -10 if I could just so noone tries this. Why dont people delete dangerous answers with bad bugs?

codenheim about 10 years-1 Terrible answer. Instead of crashing with a failed malloc, we crash with stack overflow and go off on wild goose chase. I would downvote -10 if I could just so noone tries this. Why dont people delete dangerous answers with bad bugs? -

codenheim about 10 yearsmalloc doesnt care about the content (the address) in pNewNode. By the time pNewNode is assigned the return value, malloc has already returned. Likely you misinterpreted another issue. Possibly you were using C++ and pNewNode was a member of a class, and you called this code in a member function using a pointer that was itself invalid. Or pNewNode was a local var, your stack got corrupted prior to this call.

codenheim about 10 yearsmalloc doesnt care about the content (the address) in pNewNode. By the time pNewNode is assigned the return value, malloc has already returned. Likely you misinterpreted another issue. Possibly you were using C++ and pNewNode was a member of a class, and you called this code in a member function using a pointer that was itself invalid. Or pNewNode was a local var, your stack got corrupted prior to this call. -

Yeow_Meng about 8 years

malloc(-1)or other negative numbers could fail becausemalloctakes typesize_twhich is an unsigned data type. Hence your requested size is implicitly cast to a positive data type. The implicit cast can yield a very large positive number and your call tomallocfails because the OS can't (or won't) give you that much memory. -

Richard Chambers about 8 years@Yeow_Meng thanks for the specific example of how a size for

Richard Chambers about 8 years@Yeow_Meng thanks for the specific example of how a size formalloc()may become very large accidentally. I added an illustration of a programming error that could do that. -

Keith Thompson about 8 yearsA simple

whileloop would exhibit the same useless behavior without the danger of overflowing the stack. -

Chris Watts over 7 yearsThis answer is actually hilarious. I love it

-

hmijail almost 7 years@KeithThompson To be fair, a

whiledoesn't provide the "malloc or die trying" effect. -

carefulnow1 over 6 yearsNot to be picky and while I'm very aware there are enough issues with the answer, should you really be casting the result of malloc? ;) stackoverflow.com/questions/605845/…

carefulnow1 over 6 yearsNot to be picky and while I'm very aware there are enough issues with the answer, should you really be casting the result of malloc? ;) stackoverflow.com/questions/605845/…