Why is gzip slow despite CPU and hard drive performance not being maxed out?

Solution 1

I found it out:

The reason is that gzip operates on (in terms of CPU speed vs HD seek speed these days) extremely low buffer sizes.

It reads a few KB from from the input file, compresses it, and flushes it to the output file. Given the fact that this requires a hard drive seek, only a few operations can be done per seconds.

The reason my performance did not scale is because already one gzip was seeking like crazy.

I worked around this by using the unix buffer utility:

buffer -s 100000 -m 10000000 -p 100 < file1.json | gzip > file1.json.gz

By buffering a lot of input before sending it to gzip, the number of small seeks can be dramatically reduced. The options:

-

-sand-mare to specify the size of the buffer (I believe it is in KB, but not sure) -

-p 100makes sure that the data is only passed to gzip once the buffer is 100% filled

Running four of these in parallel, I could get 4 * 25 MB/s throughput, as expected.

I still wonder why gzip doesn't allow to increase the buffer size - this way, it is pretty useless if run on a spinning disk.

EDIT: I tried out a few more compression programs behaviour:

-

bzip2only processes 2 MB/s due to its stronger / more CPU intensive compression -

lzopseems to allow larger buffers: 70 MB/s per core, and 2 cores can max out my HD without over-seeking

Solution 2

After looking at the first five or so lectures in the MIT OpenCourseware for 6.172: "Performance Engineering of Software Systems", I ran the Linux performance analyzer 'perf' on a moderately large test file. The result appears to show pipeline stalls where one instruction has to wait for the result of a preceding one.

│ while (lookahead != 0) {

│ /* Insert the string window[strstart .. strstart+2] in the

│ * dictionary, and set hash_head to the head of the hash chain:

│ */

│ INSERT_STRING(strstart, hash_head);

2.07 │ movzbl 0x8096d82(%edx),%eax

3.99 │ mov %edx,%ebp

│ shl $0x5,%ecx

0.03 │ and $0x7fff,%ebp

1.94 │ xor %ecx,%eax

1.43 │ and $0x7fff,%eax

2.01 │ mov %eax,0x805e588

2.40 │ add $0x8000,%eax

0.88 │ movzwl 0x8062140(%eax,%eax,1),%ecx

23.79 │ movzwl %cx,%edi

│ /* Find the longest match, discarding those <= prev_length.

The second last instruction is copying to %ecx and the last one has to wait (stalling the pipeline) until the %cx register has data ready to use. This pipeline stall holds up the containing loop.

This is a result of some really obscure 'old-school' C programming style.

Solution 3

A tip that might take it to yet another level of speed on a multi-core/hyperthreading CPU:

(assuming Ubuntu)

sudo apt-get install moreutils

moreutils contains among other things "gnu parallel" - which has a lot of options to help use more of your CPU.

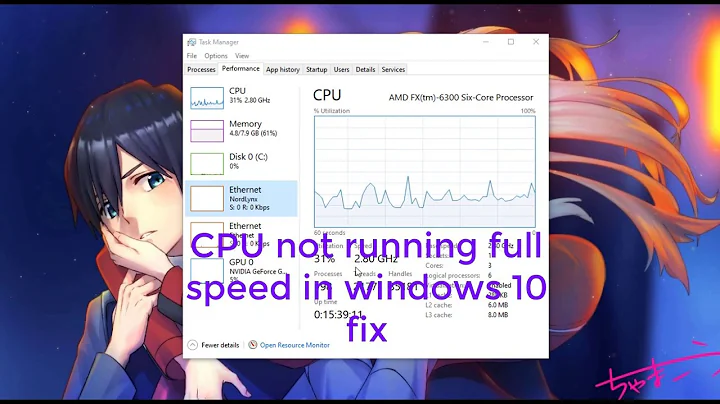

Related videos on Youtube

nh2

Updated on September 18, 2022Comments

-

nh2 over 1 year

I have some JSON files, 20 GB each, that I want to compress with

gzip:gzip file1.jsonThis takes up one full CPU core, all fine.

It processes around 25 MB/s (checked in

atop), my hard drive can read 125 MB/s and I have 3 free processor cores, so I expect to get a speed-up when compressing multiple files in parallel. So I run in other terminals:gzip file2.json gzip file3.json gzip file4.jsonSurprisingly, my throughput does not increase; CPU is around 25% on each core, and my HD still only reads at 25 MB/s.

Why and how to address it?

-

nh2 almost 10 years@SimonKuang I suspect that

ddcan do the same with itsbs=option, yes. -

Dave L. over 8 yearsSounds like an interesting coincidence that for a single file the block size happened to fully utilize both a single CPU core and a drive's IOPS.