Why is swappiness set to 60 by default?

Solution 1

Since kernel 2.6.28, Linux uses a Split Least Recently Used (LRU) page replacement strategy. Pages with a filesystem source, such as program text or shared libraries belong to the file cache. Pages without filesystem backing are called anonymous pages, and consist of runtime data such as the stack space reserved for applications etc. Typically pages belonging to the file cache are cheaper to evict from memory (as these can simple be read back from disk when needed). Since anonymous pages have no filesystem backing, they must remain in memory as long as they are needed by a program unless there is swap space to store them to.

It is a common misconception that a swap partition would somehow slow down your system. Not having a swap partition does not mean that the kernel won't evict pages from memory, it just means that the kernel has fewer choices in regards to which pages to evict. The amount of swap available will not affect how much it is used.

Linux can cope with the absence of a swap space because, by default, the kernel memory accounting policy may overcommit memory. The downside is that when physical memory is exhausted, and the kernel cannot swap anonymous pages to disk, the out-of-memory-killer (OOM-killer) mechanism will start killing off memory-hogging "rogue" processes to free up memory for other processes.

The vm.swappiness option is a modifier that changes the balance between swapping out file cache pages in favour of anonymous pages. The file cache is given an arbitrary priority value of 200 from which vm.swappiness modifier is deducted (file_prio=200-vm.swappiness). Anonymous pages, by default, start out with 60 (anon_prio=vm.swappiness). This means that, by default, the priority weights stand moderately in favour of anonymous pages (anon_prio=60, file_prio=200-60=140). The behaviour is defined in mm/vmscan.c in the kernel source tree.

Given a vm.swappiness of 100, the priorities would be equal (file_prio=200-100=100, anon_prio=100). This would make sense for an I/O heavy system if it is not wanted that pages from the file cache being evicted in favour of anonymous pages.

Conversely setting the vm.swappiness to 0 will prevent the kernel from evicting anonymous pages in favour of pages from the file cache. This might be useful if programs do most of their caching themselves, which might be the case with some databases. In desktop systems this might improve interactivity, but the downside is that I/O performance will likely take a hit.

The default value has most likely been chosen as an approximate middleground between these two extremes. As with any performance parameter, adjusting vm.swappiness should be based on benchmark data comparable to real workloads, not just a gut feeling.

Solution 2

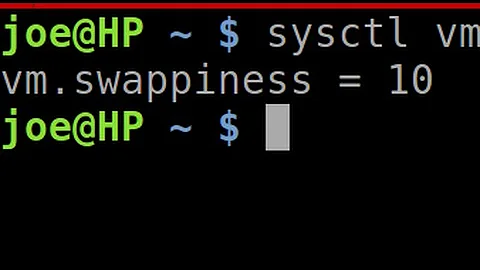

The problem is that there is no one default value that will suit all needs. Setting the swappiness option to 10 may be an appropriate setting for desktops, but the default value of 60 may be more suitable for servers. In other words swappiness needs to be tweaked according the use case - desktop vs. server, application type and so on.

Furthermore, the Linux kernel uses memory for disk cache otherwise the RAM wouldn't be used and this is not efficient and intended. Having disk data in the cache means that if something needs the same data again, it will likely get it from the memory. Fetching the data from there is much more quicker than getting it from the disk again. And the swappiness option is a mechanism how much the Linux kernel prefers swapping out to disk to shrinking the disk cache. Should it rather remove older data from the cache or should it swap out some program pages?

This article may shed some light on the topic as well. Especially, how the swapping tendency is estimated.

Solution 3

Adding more detail to the answers above.

As we're using VM's more and more, a linux host may be a vm on one of these cloud environments. In both examples 1 & 2 we've got a good idea of the applications running and so how much RAM they consume. In 3, not so much

- Example 1

A high performance private cloud (think the sort most banks would pay millions for) one where the disk is provided by a very expensive storage array with VERY good IO. Part of that storage may be in RAM (in the disk array) backed by SSD disks, backed by regular disks with spindles. In this situation the disk that the VM sees might be only a little slower than the RAM it can access. For a single vm there isn't much difference between swap and ram. - Example 2

The same as example 1 but instead of a single vm you have hundreds, thousands or more. In this situation we find out that server (hypervisor) RAM is cheap and plentiful where storage RAM is expensive (relatively speaking). If we split the RAM requirements between Hypervisor RAM and SWAP provided by our very expensive storage array we find we quickly use all of the RAM in the storage array, blocks are then served by the SSD's and finally by the spindles. Suddenly every starts getting really slow. In this case we probably want to assign plenty of RAM (from the hypervisor) to the VM and set swappiness to 0 (only swap to avoid out of memory conditions) as the cumulative effect of all those vm's will have an effect on the performance of the storage, where setting the swappiness higher may give a perceived performance boost as there will be more unused RAM because apps that are not currently being interacted with have been (mostly) swapped out. - Example 3 A modern laptop or desktop probably with a SSD. The memory requirements are considered unknown. Which browser will the user use, how many tabs will they have open, will they also be editing a document, a RAW image or possibly a video, they will all consume RAM. Setting the swappiness to a low value and performing other file system tweaks will mean that there are fewer writes to the SSD and so it will last longer.

Related videos on Youtube

mcfish

Updated on September 18, 2022Comments

-

mcfish over 1 year

I just read some stuff about swappiness on Linux. I don't understand why the default is set to 60.

According to me this parameter should be set to 10 in order to reduce swap. Swap is on my hard drives so it us much slower than my memory.

Why did they configure the kernel like that?

-

Geremia about 7 years@Mat See this for how to do swappiness benchmarking.

-

-

mcfish over 10 yearsI don't understand why 60 is more suitable for servers. I do have servers and some processes go in swap even if we have 40% of freem RAM. doesn't make sense for me.

-

replay over 10 yearsIt makes sense to move parts of the memory into swap if it is very unlikely that they are going to be accessed, that way Linux keeps as much of the actual ram free as possible to be ready for situations when it actually needs it.

-

gerrit almost 8 yearsHow does installing the OS on a solid state device affect the tradeoff?

gerrit almost 8 yearsHow does installing the OS on a solid state device affect the tradeoff? -

Thomas Nyman over 7 years@gerrit The type of underlying storage medium is irrelevant. That kind of detail is not visible to the memory management subsystem.

-

MatrixManAtYrService over 7 yearsThe type of underlying storage medium is irrelevant from a memory usage perspective. You might consider lowering the swappiness that medium supports a limited amount of read/writes (i.e. flash memory) in order to increase its longevity.

MatrixManAtYrService over 7 yearsThe type of underlying storage medium is irrelevant from a memory usage perspective. You might consider lowering the swappiness that medium supports a limited amount of read/writes (i.e. flash memory) in order to increase its longevity. -

Thomas Nyman over 7 years@MatrixManAtYrService Thanks to internal wear-leveling and built-in redundancy, modern SSDs (which the question in the previous comment refers to) have been shown to last up to 2 PB (!) of writes before exhibiting errors. Even the cheaper drives in those experiments lasted for 300TB before errors occuring, far beyond the official warranty rating of around 100TB. At least in my opinion adjusting swappiness to accomodate for an SSD on workstations or laptops isn't really warranted.

-

MatrixManAtYrService over 7 years@ThomasNyman you make a good point, for most users it's not worth worrying about. The case that brought me to this post involved swap space on an SD card, which I recognize is a bit of an edge case.

MatrixManAtYrService over 7 years@ThomasNyman you make a good point, for most users it's not worth worrying about. The case that brought me to this post involved swap space on an SD card, which I recognize is a bit of an edge case. -

Thomas Nyman about 7 years@MatrixManAtYrService That's a fair point as well. This question has several examples of anecdotal evidence pointing to SD card failures in Raspberry Pis under long-term use, although it is difficult to tell in these cases how much the swapping has contributed to the failures. Other contributing factors could be for instance logging and temporary files. There might be a good new question in here, but probably not relevant to the OPs question.

-

Jules almost 7 yearsSSD write endurance concerns for an end-user system are overrated. Modern SSDs typically survive write volumes of many hundreds of terabytes. A typical desktop system even with heavy swap usage is unlikely to use that much for many years of operation.

-

Petr over 4 yearsNot sure if something has changed in recent kernel versions, but setting swappiness to 0, doesn't seem to have any significant effect on it. On app server with 16GB of RAM, while there is 14GB used (2GB being used disk caches / buffers), kernel already start swapping processes out, in favor of disk caches, even with swappiness set to 0.

-

X.LINK about 3 yearsWrong, they may do, but the writes-based OEMs warranties says otherwise. Looking at the drives datasheets will give some nasty surprises, since some are only rated for a 100TB of writes before the warranty is void (yes, void) even before the "years-based" warranty. Writing 250GB per week is very easy nowadays, even for a granny since Youtube and JavaScript infested websites are the norm. In short words: Above the rated write-based warranty, you're playing a game with your data.

-

X.LINK about 3 yearsWe did have "field-tested" reviews of SSD endurance, but they are far outdated now and were done with empty drives, which do not represent reality. Those were made with "3D" 40nm TLC SSDs (roughtly 2/3 of 20nm "2D" SSDs lifespan, deceptively sold as "lasts as long as MLC SSDs" then), which aren't sold anymore since 2016 as they were quickly replaced by 20nm 3D TLC (the lower the nm, the worse). Nowadays SSDs are 1xnm "3D" TLCs (even worse) while QLCs (worst of the worst) are replacing the latter. So to speak: we have no real data about SSDs lifespan since 2016, let aside data retention.