ZFS - Impact of L2ARC cache device failure (Nexenta)

Solution 1

ZFS does not do disk I/O, device drivers below ZFS do disk I/O. If the device does not respond in a timely manner, or as in this case, disrupts all other devices on the expander, then it is not visible as a failure to ZFS. All ZFS sees is a slow I/O.

There is a bug in Intel X-25M firmware that affects their behaviour during heavy loads and can cause reset storms. This problem affects all OSes and cannot be solved at the OS layer. Please contact your hardware supplier for fixes or remediation.

If a read is expected to be satisfied by the L2ARC, then the read will be attempted there. ZFS then relies on the lower-layer drivers to report an error. For this case, the drive continues to reset and retry for as many as 5 minutes before declaring the I/O as failed, depending on the driver, device, and default timeout settings. Only after the lower layer drivers declare the I/O as failed will ZFS retry on the pool.

NexentaStor's volume-check and disk-check runners look for additional error messages and alert you via email and fault logging. The disk-check runner has been improved in the 3.1 release to help alert you for specifically the conditions exhibited by broken firmware in SSDs.

Bottom line: your hardware is faulty and will need to be fixed or replaced.

Solution 2

Are you connecting the X25-M SSD to the backplane? There's a known issue with Nexenta and accessing the L2ARC over a backplane. Your best bet is to connect the SSD directly into a SATA port on the motherboard. Make sure it's configured to use AHCI as well.

If you're running anything mission critical on this server I would switch to a SLC SSD (like the X25-E or a STEC SSD). That being said, you'll probably be ok with the X25-M if it's not.

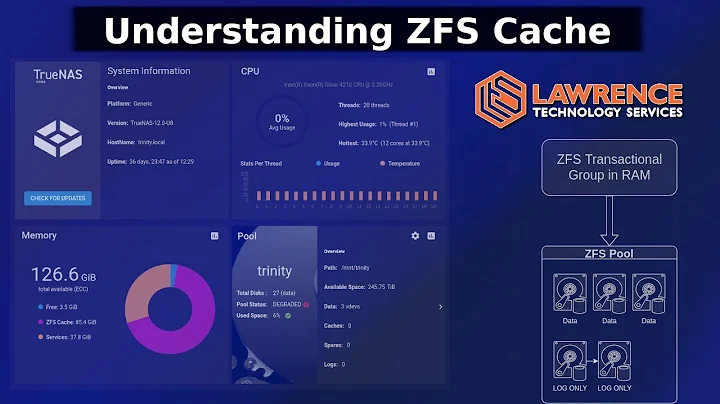

Related videos on Youtube

ewwhite

Updated on September 18, 2022Comments

-

ewwhite almost 2 years

ewwhite almost 2 yearsI have an HP ProLiant DL380 G7 server running as a NexentaStor storage unit. The server has 36GB RAM, 2 LSI 9211-8i SAS controllers (no SAS expanders), 2 SAS system drives, 12 SAS data drives, a hot-spare disk, an Intel X25-M L2ARC cache and a DDRdrive PCI ZIL accelerator. This system serves NFS to multiple VMWare hosts. I also have about 90-100GB of deduplicated data on the array.

I've had two incidents where performance tanked suddenly, leaving the VM guests and Nexenta SSH/Web consoles inaccessible and requiring a full reboot of the array to restore functionality. In both cases, it was the Intel X-25M L2ARC SSD that failed or was "offlined". NexentaStor failed to alert me on the cache failure, however the general ZFS FMA alert was visible on the (unresponsive) console screen.

The

zpool statusoutput showed:pool: vol1 state: ONLINE scan: scrub repaired 0 in 0h57m with 0 errors on Sat May 21 05:57:27 2011 config: NAME STATE READ WRITE CKSUM vol1 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 c8t5000C50031B94409d0 ONLINE 0 0 0 c9t5000C50031BBFE25d0 ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 c10t5000C50031D158FDd0 ONLINE 0 0 0 c11t5000C5002C823045d0 ONLINE 0 0 0 mirror-2 ONLINE 0 0 0 c12t5000C50031D91AD1d0 ONLINE 0 0 0 c2t5000C50031D911B9d0 ONLINE 0 0 0 mirror-3 ONLINE 0 0 0 c13t5000C50031BC293Dd0 ONLINE 0 0 0 c14t5000C50031BD208Dd0 ONLINE 0 0 0 mirror-4 ONLINE 0 0 0 c15t5000C50031BBF6F5d0 ONLINE 0 0 0 c16t5000C50031D8CFADd0 ONLINE 0 0 0 mirror-5 ONLINE 0 0 0 c17t5000C50031BC0E01d0 ONLINE 0 0 0 c18t5000C5002C7CCE41d0 ONLINE 0 0 0 logs c19t0d0 ONLINE 0 0 0 cache c6t5001517959467B45d0 FAULTED 2 542 0 too many errors spares c7t5000C50031CB43D9d0 AVAIL errors: No known data errorsThis did not trigger any alerts from within Nexenta.

I was under the impression that an L2ARC failure would not impact the system. But in this case, it surely was the culprit. I've never seen any recommendations to RAID L2ARC. Removing the bad SSD entirely from the server got me back running, but I'm concerned about the impact of the device failure (and maybe the lack of notification from NexentaStor as well).

Edit - What's the current best-choice SSD for L2ARC cache applications these days?

-

Admin about 13 yearsIs it possible that your SSD or SATA port is having hardware issues?

Admin about 13 yearsIs it possible that your SSD or SATA port is having hardware issues? -

Admin about 13 yearsIt's an HP SAS backplane. I've never seen one fail or have trouble in many (Linux) deployments, but I'm pretty sure that the failure is a function of the consumer-class SSD in place. I can accept the failure, but the impact on the remaining disks and overall storage system is the bigger problem.

Admin about 13 yearsIt's an HP SAS backplane. I've never seen one fail or have trouble in many (Linux) deployments, but I'm pretty sure that the failure is a function of the consumer-class SSD in place. I can accept the failure, but the impact on the remaining disks and overall storage system is the bigger problem. -

Admin about 13 yearsNotably, Pogo Linux (who I understand to be Nexenta's largest integrator/reseller) no longer offers Intel X25 devices as an option for L2ARC or ZIL due to problems with later versions of Intel's firmware.

Admin about 13 yearsNotably, Pogo Linux (who I understand to be Nexenta's largest integrator/reseller) no longer offers Intel X25 devices as an option for L2ARC or ZIL due to problems with later versions of Intel's firmware. -

Admin about 13 yearsAnd the recommended replacement is (make, model, price)?

Admin about 13 yearsAnd the recommended replacement is (make, model, price)? -

Admin about 13 yearsThis question really hasn't been answered. I'm looking for a recommendation on what device to use for L2ARC in a ZFS-based system.

Admin about 13 yearsThis question really hasn't been answered. I'm looking for a recommendation on what device to use for L2ARC in a ZFS-based system. -

Admin about 13 yearsPogo is selling two different options: STEC Zeus IOPS and Pliant LB150/LB200M/LB400M. Either is an order of magnitude more expensive than the Intel X25 series had been.

Admin about 13 yearsPogo is selling two different options: STEC Zeus IOPS and Pliant LB150/LB200M/LB400M. Either is an order of magnitude more expensive than the Intel X25 series had been. -

Admin about 13 yearsBy the way, the new Intel 320 series may be interesting to try as an L2ARC or even ZIL device: it is capacitor-backed, and although the write endurance is limited (up to 60 terabytes depending on model), the wear percentage remaining can be tracked using SMART attribute E9 (starts at 100 and counts down to 1). I suspect that many ZFS users could replace this device as frequently as needed to prevent E9 from approaching 1, without the cumulative expense ever even approaching the cost of a comparably sized SLC drive.

Admin about 13 yearsBy the way, the new Intel 320 series may be interesting to try as an L2ARC or even ZIL device: it is capacitor-backed, and although the write endurance is limited (up to 60 terabytes depending on model), the wear percentage remaining can be tracked using SMART attribute E9 (starts at 100 and counts down to 1). I suspect that many ZFS users could replace this device as frequently as needed to prevent E9 from approaching 1, without the cumulative expense ever even approaching the cost of a comparably sized SLC drive. -

Admin about 13 yearsSo, at say, $3800 for a 150GB Pliant device, and I better off just buying more RAM and foregoing the L2ARC?

Admin about 13 yearsSo, at say, $3800 for a 150GB Pliant device, and I better off just buying more RAM and foregoing the L2ARC?

-

-

ewwhite about 13 yearsYes, I'm connecting through a normal drive bay. I have other installations with the same Intel SSD running as L2ARC (in Sun and HP hardware). This particular one has given me trouble, though. My research seemed to indicate that L2ARC didn't need to be as robust as the ZIL (hence the use of SLC and PCI-based ZIL solutions and a consumer drive for L2ARC). Has this changed?

ewwhite about 13 yearsYes, I'm connecting through a normal drive bay. I have other installations with the same Intel SSD running as L2ARC (in Sun and HP hardware). This particular one has given me trouble, though. My research seemed to indicate that L2ARC didn't need to be as robust as the ZIL (hence the use of SLC and PCI-based ZIL solutions and a consumer drive for L2ARC). Has this changed? -

miroque about 13 yearsI would try plugging the SSD directly to the motherboard and see if that works. If you have a spare working X25-M you could try replacing the current one and see if the SSD itself is bad. On the SLC SSD: It's dependent on your level of risk. If you're running software on a SLA that can never go down and has to run fast, it may be cheaper to buy a high end SSD.

miroque about 13 yearsI would try plugging the SSD directly to the motherboard and see if that works. If you have a spare working X25-M you could try replacing the current one and see if the SSD itself is bad. On the SLC SSD: It's dependent on your level of risk. If you're running software on a SLA that can never go down and has to run fast, it may be cheaper to buy a high end SSD. -

ewwhite about 13 yearsI'm trying to say that the Intel X25-M has been recommended for L2ARC in most of the articles and discussions I've seen online. If that's no longer the case, what's the preferred device?

ewwhite about 13 yearsI'm trying to say that the Intel X25-M has been recommended for L2ARC in most of the articles and discussions I've seen online. If that's no longer the case, what's the preferred device? -

Naman Bansal about 13 years@ewwhite: In theory a failure of an L2ARC device should be non-disruptive because ZFS can just fall back to reading off disk (obviously performance would take a hit). In practice.. well, it sounds like you've hit a ZFS or scsi driver bug that gets triggered by SSD behaviour.

-

ewwhite about 13 years@Tom Shaw: Well, the Intel X-25M really did fail. I've removed it from the system. I'm more interested in a better recommendation for replacement. I'll have Nexenta support address the lack of notifications on cache device failure. And yes, I was under the assumption that the L2ARC failure would be non-disruptive as you suggested. I'd like to know if the small amount of deduplicated data in the zpool could have been a factor here.

ewwhite about 13 years@Tom Shaw: Well, the Intel X-25M really did fail. I've removed it from the system. I'm more interested in a better recommendation for replacement. I'll have Nexenta support address the lack of notifications on cache device failure. And yes, I was under the assumption that the L2ARC failure would be non-disruptive as you suggested. I'd like to know if the small amount of deduplicated data in the zpool could have been a factor here. -

miroque about 13 years@ewwhite: Again it comes down to your level of acceptable risk.

miroque about 13 years@ewwhite: Again it comes down to your level of acceptable risk. -

ewwhite about 13 yearsThank you. So I will not use the Intel X-25 any longer. I'd like a tested, recommendation for a new L2ARC SSD device to replace it.

ewwhite about 13 yearsThank you. So I will not use the Intel X-25 any longer. I'd like a tested, recommendation for a new L2ARC SSD device to replace it.