ZFS pool slow sequential read

I managed to get speeds very close to the numbers I was expecting.

I was looking for 400MB/sec and managed 392MB/sec. So I say that is problem solved. With the later addition of a cache device, I managed 458MB/sec read (cached I believe).

1. This at first was achieved simply by increasing the ZFS dataset recordsize value to 1M

zfs set recordsize=1M pool2/test

I believe this change just results in less disk activity, thus more efficient large synchronous reads and writes. Exactly what I was asking for.

Results after the change

- bonnie++ = 226MB write, 392MB read

- dd = 260MB write, 392MB read

- 2 processes in parallel = 227MB write, 396MB read

2. I managed even better when I added a cache device (120GB SSD). The write is a tad slower, I'm not sure why.

Version 1.97 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

igor 63G 208325 48 129343 28 458513 35 326.8 16

The trick with the cache device was to set l2arc_noprefetch=0 in /etc/modprobe.d/zfs.conf. It allows ZFS to cache streaming/sequential data. Only do this if your cache device is faster than your array, like mine.

After benefiting from the recordsize change on my dataset, I thought it might be a similar way to deal with poor zvol performance.

I came across severel people mentioning that they obtained good performance using a volblocksize=64k, so I tried it. No luck.

zfs create -b 64k -V 120G pool/volume

But then I read that ext4 (the filesystem I was testing with) supports options for RAID like stride and stripe-width, which I've never used before. So I used this site to calculate the settings needed: https://busybox.net/~aldot/mkfs_stride.html and formatted the zvol again.

mkfs.ext3 -b 4096 -E stride=16,stripe-width=32 /dev/zvol/pool/volume

I ran bonnie++ to do a simple benchmark and the results were excellent. I don't have the results with me unfortunately, but they were atleast 5-6x faster for writes as I recall. I'll update this answer again if I benchmark again.

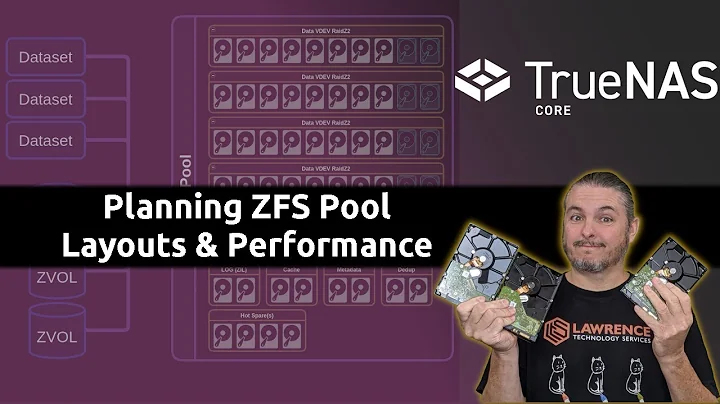

Related videos on Youtube

Ryan Babchishin

I'm not a jerk. You can email me and I'm available for hire. Email: [email protected] Site: https://www.artisanix.com Nearly two decades of experience gives me significant confidence when dealing with technical issues. I don't immediately know the exact answers to most of the questions I answer here. I'm just experienced enough to figure them out. A good amount of the time, a Google search or man page is involved. Seriously, it's important to learn how to learn before you learn anything else. Things to look into: Any other operating system - You know what I'm talking about... NOT using words like "Cloud" & "NAS" & "Blog" - They're just buzz words for things that have always existed Programming - You know, that thing you're supposed to know how to do? Linux - Because, look it up... Quotes from our fearless leaders: “If you can’t make it good, at least make it look good.” Bill Gates Microsoft "My name is Linus Torvalds and I am your god." Linus Torvalds Linux "You already have zero privacy - get over it." Scott McNealy Sun Microsystems

Updated on September 18, 2022Comments

-

Ryan Babchishin almost 2 years

Ryan Babchishin almost 2 yearsI have a related question about this problem, but it got too complicated and too big, so I decided I should split up the issue into NFS and local issues. I have also tried asking about this on the zfs-discuss mailing list without much success.

Slow copying between NFS/CIFS directories on same server

Outline: How I'm setup and what I'm expecting

- I have a ZFS pool with 4 disks. 2TB RED configured as 2 mirrors that are striped (RAID 10). On Linux, zfsonlinux. There are no cache or log devices.

- Data is balanced across mirrors (important for ZFS)

- Each disk can read (raw w/dd) at 147MB/sec in parallel, giving a combined throughput of 588MB/sec.

- I expect about 115MB/sec write, 138MB/sec read and 50MB/sec rewrite of sequential data from each disk, based on benchmarks of a similar 4TB RED disk. I expect no less than 100MB/sec read or write, since any disk can do that these days.

- I thought I'd see 100% IO utilization on all 4 disks when under load reading or writing sequential data. And that the disks would be putting out over 100MB/sec while at 100% utilization.

- I thought the pool would give me around 2x write, 2x rewrite, and 4x read performance over a single disk - am I wrong?

- NEW I thought a ext4 zvol on the same pool would be about the same speed as ZFS

What I actually get

I find the read performance of the pool is not nearly as high as I expected

bonnie++ benchmark on pool from a few days ago

Version 1.97 ------Sequential Output------ --Sequential Input- --Random- Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks-- Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP igor 63G 99 99 232132 47 118787 27 336 97 257072 22 92.7 6

bonnie++ on a single 4TB RED drive on it's own in a zpool

Version 1.97 ------Sequential Output------ --Sequential Input- --Random- Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks-- Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP igor 63G 101 99 115288 30 49781 14 326 97 138250 13 111.6 8

According to this the read and rewrite speeds are appropriate based on the results from a single 4TB RED drive (they are double). However, the read speed I was expecting would have been about 550MB/sec (4x the speed of the 4TB drive) and I would at least hope for around 400MB/sec. Instead I am seeing around 260MB/sec

bonnie++ on the pool from just now, while gathering the below information. Not quite the same as before, and nothing has changed.

Version 1.97 ------Sequential Output------ --Sequential Input- --Random- Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks-- Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP igor 63G 103 99 207518 43 108810 24 342 98 302350 26 256.4 18

zpool iostat during write. Seems OK to me.

capacity operations bandwidth pool alloc free read write read write -------------------------------------------- ----- ----- ----- ----- ----- ----- pool2 1.23T 2.39T 0 1.89K 1.60K 238M mirror 631G 1.20T 0 979 1.60K 120M ata-WDC_WD20EFRX-68AX9N0_WD-WMC300004469 - - 0 1007 1.60K 124M ata-WDC_WD20EFRX-68EUZN0_WD-WCC4MLK57MVX - - 0 975 0 120M mirror 631G 1.20T 0 953 0 117M ata-WDC_WD20EFRX-68AX9N0_WD-WCC1T0429536 - - 0 1.01K 0 128M ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M0VYKFCE - - 0 953 0 117Mzpool iostat during rewrite. Seems ok to me, I think.

capacity operations bandwidth pool alloc free read write read write -------------------------------------------- ----- ----- ----- ----- ----- ----- pool2 1.27T 2.35T 1015 923 125M 101M mirror 651G 1.18T 505 465 62.2M 51.8M ata-WDC_WD20EFRX-68AX9N0_WD-WMC300004469 - - 198 438 24.4M 51.7M ata-WDC_WD20EFRX-68EUZN0_WD-WCC4MLK57MVX - - 306 384 37.8M 45.1M mirror 651G 1.18T 510 457 63.2M 49.6M ata-WDC_WD20EFRX-68AX9N0_WD-WCC1T0429536 - - 304 371 37.8M 43.3M ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M0VYKFCE - - 206 423 25.5M 49.6MThis is where I wonder what's going on

zpool iostat during read

capacity operations bandwidth pool alloc free read write read write -------------------------------------------- ----- ----- ----- ----- ----- ----- pool2 1.27T 2.35T 2.68K 32 339M 141K mirror 651G 1.18T 1.34K 20 169M 90.0K ata-WDC_WD20EFRX-68AX9N0_WD-WMC300004469 - - 748 9 92.5M 96.8K ata-WDC_WD20EFRX-68EUZN0_WD-WCC4MLK57MVX - - 623 10 76.8M 96.8K mirror 651G 1.18T 1.34K 11 170M 50.8K ata-WDC_WD20EFRX-68AX9N0_WD-WCC1T0429536 - - 774 5 95.7M 56.0K ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M0VYKFCE - - 599 6 74.0M 56.0Kiostat -x during the same read operation. Note how IO % is not at 100%.

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util sdb 0.60 0.00 661.30 6.00 83652.80 49.20 250.87 2.32 3.47 3.46 4.87 1.20 79.76 sdd 0.80 0.00 735.40 5.30 93273.20 49.20 251.98 2.60 3.51 3.51 4.15 1.20 89.04 sdf 0.50 0.00 656.70 3.80 83196.80 31.20 252.02 2.23 3.38 3.36 6.63 1.17 77.12 sda 0.70 0.00 738.30 3.30 93572.00 31.20 252.44 2.45 3.33 3.31 7.03 1.14 84.24

zpool and test dataset settings:

- atime is off

- compression is off

- ashift is 0 (autodetect - my understanding was that this was ok)

- zdb says disks are all ashift=12

- module - options zfs zvol_threads=32 zfs_arc_max=17179869184

- sync = standard

Edit - Oct, 30, 2015

I did some more testing

- dataset bonnie++ w/recordsize=1M = 226MB write, 392MB read much better

- dataset dd w/record size=1M = 260MB write, 392MB read much better

- zvol w/ext4 dd bs=1M = 128MB write, 107MB read why so slow?

- dataset 2 processess in parallel = 227MB write, 396MB read

- dd direct io makes no different on dataset and on zvol

I am much happier with the performance with the increased record size. Almost every file on the pool is way over 1MB. So I'll leave it like that. The disks are still not getting 100% utilization, which makes me wonder if it could still be much faster. And now I'm wondering why the zvol performance is so lousy, as that is something I (lightly) use.

I am happy to provide any information requested in the comments/answers. There is also tons of information posted in my other question: Slow copying between NFS/CIFS directories on same server

I am fully aware that I may just not understand something and that this may not be a problem at all. Thanks in advance.

To make it clear, the question is: Why isn't the ZFS pool as fast as I expect? And perhaps is there anything else wrong?

-

Ryan Babchishin over 8 yearsMy expectations were almost identical to what I got - 400MB/sec. I get 392MB/sec. Seems reasonable. very reasonable. I also ran multiple dd and bonnie++ processes in parallel and saw no performance improvement at all. You have not explained why the zvol performance is so poor either.

Ryan Babchishin over 8 yearsMy expectations were almost identical to what I got - 400MB/sec. I get 392MB/sec. Seems reasonable. very reasonable. I also ran multiple dd and bonnie++ processes in parallel and saw no performance improvement at all. You have not explained why the zvol performance is so poor either. -

shodanshok over 8 yearsYou get 392 MB/s only using Bonnie++ with a large recordsize (>= 1MB/s), and I explained you why. EXT4 over ZVOL is a configuration that I never tested, so I left it for other people to comment.

shodanshok over 8 yearsYou get 392 MB/s only using Bonnie++ with a large recordsize (>= 1MB/s), and I explained you why. EXT4 over ZVOL is a configuration that I never tested, so I left it for other people to comment. -

YwH almost 8 yearsIf I could give you an extra +1 for coming back almost a year later and writing such a detailed answer, I would. Thanks!

-

Paul over 2 yearsWelcome to Server Fault! It looks like you may have a workable solution to the question, but as written it is difficult to discover. Please edit your question and reformat it using complete sentences, separate paragraphs, inline code, and code blocks, as appropriate.