Code execution in embedded systems

Solution 1

Being a microprocessor architect, I have had the opportunity to work at a very low level for software. Basically, low-level embedded is very different from general PC programming only at the hardware specific level.

Low-level embedded software can be broken down into the following:

- Reset vector - this is usually written in assembly. It is the very first thing that runs at start-up and can be considered hardware-specific code. It will usually perform simple functions like setting up the processor into a pre-defined steady state by configuring registers and such. Then it will jump to the startup code. The most basic reset vector merely jumps directly to the start-up code.

-

Startup code - this is the first software-specific code that runs. Its job is basically to set up the software environment so that C code can run on top. For example, C code assumes that there is a region of memory defined as stack and heap. These are usually software constructs instead of hardware. Therefore, this piece of start-up code will define the stack pointers and heap pointers and such. This is usually grouped under the 'c-runtime'. For C++ code, constructors are also called. At the end of the routine, it will execute

main(). edit: Variables that need to be initialised and also certain parts of memory that need clearing are done here. Basically, everything that is needed to move things into a 'known state'. -

Application code - this is your actual C application starting from the

main()function. As you can see, a lot of things are actually under the hood and happen even before your first main function is called. This code can usually be written as hardware-agnostic if there is a good hardware abstraction layer available. The application code will definitely make use of a lot of library functions. These libraries are usually statically linked in embedded systems. - Libraries - these are your standard C libraries that provide primitive C functions. There are also processor specific libraries that implement things like software floating-point support. There can also be hardware-specific libraries to access the I/O devices and such for stdin/stdout. A couple of common C libraries are Newlib and uClibc.

- Interrupt/Exception handler - these are routines that run at random times during normal code execution as a result of changes in hardware or processor states. These routines are also typically written in assembly as they should run with minimal software overhead in order to service the actual hardware called.

Hope this will provide a good start. Feel free to leave comments if you have other queries.

Solution 2

Generally, you're working at a lot lower level than general purpose computers.

Each CPU will have certain behaviour on power up, such as clearing all registers and setting the program counter to 0xf000 (everything here is non-specific, as is your question).

The trick is to ensure your code is at the right place.

The compilation process is usually similar to general purpose computers in that you translate C into machine code (object files). From there, you need to link that code with:

- your system start-up code, often in assembler.

- any runtime libraries (including required bits of the C RTL).

System start-up code generally just initialises the hardware and sets up the environment so that your C code can work. Runtime libraries in embedded systems often make the big bulky stuff (like floating point support or printf) optional so as to keep down code bloat.

The linker in embedded systems also usually is a lot simpler, outputting fixed-location code rather than relocatable binaries. You use it to ensure the start-up code goes at (e.g.) 0xf000.

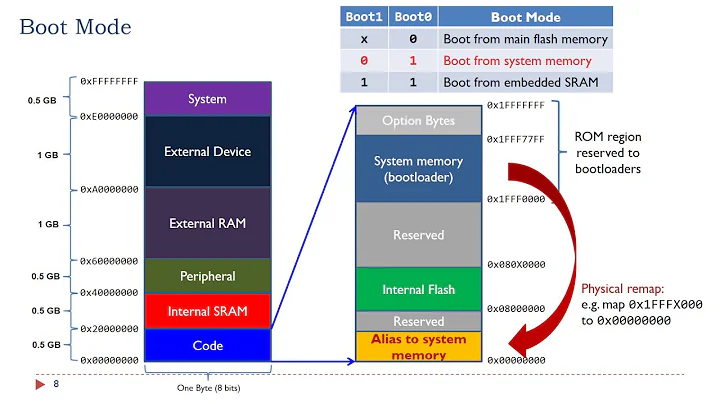

In embedded systems, you generally want the executable code to be there from the start so you may burn it into EPROM (or EEPROM or Flash or other device that maintains contents on power-down).

Of course, keep in mind my last foray was with 8051 and 68302 processors. It may be that 'embedded' systems nowadays are full blown Linux boxes with all sorts of wonderful hardware, in which case there'd be no real difference between general purpose and embedded.

But I doubt it. There's still a need for seriously low-spec hardware that needs custom operating systems and/or application code.

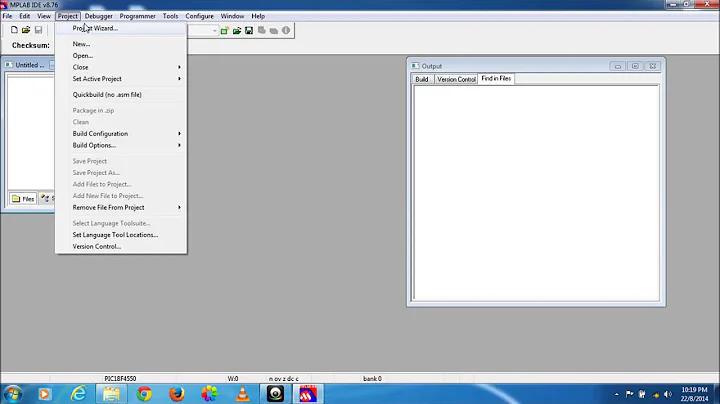

SPJ Embedded Technologies has an downloadable evaluation of their 8051 development environment that looks to be what you want. You can create programs up to 2K in size but it seems to go through the entire process (compiling linking, generation of HEX or BIN files for dumping on to target hardware, even a simulator which gives access to the on-chip stuff and external devices).

The non-evaluation product costs 200 Euro but, if all you want is a bit of a play, I'd just download the evaluation - other than the 2K limit, it's the full product.

Solution 3

I get the impression you're most interested in what sybreon calls "step 2." Lots can happen there, and it varies greatly by platform. Usually, this stuff is handled by some combination of bootloader, board-support package, C Runtime (CRT), and if you've got one, the OS.

Typically, after the reset vector, some sort of bootloader will execute from flash. This bootloader might just set up hardware and jump into your app's CRT, also in flash. In this case, the CRT would probably clear the .bss, copy the .data to RAM, etc. In other systems, the bootloader can scatter-load the app from a coded file, like an ELF, and the CRT just sets up other runtime stuff (heap, etc.). All of this happens before the CRT calls the app's main().

If your app is statically linked, linker directives will specify the addresses where .data/.bss and stack are initialized. These values are either linked into the CRT or coded into the ELF. In a dynamically-linked environment, app loading is usually handled by an OS which re-targets the ELF to run in whatever memory the OS designates.

Also, some targets run apps from flash, but others will copy the executable .text from flash to RAM. (This is usually a speed/footprint tradeoff, since RAM is faster/wider than flash on most targets.)

Solution 4

Ok, I'll give this a shot...

First off architectures. Von Neumann vs. Harvard. Harvard architecture has separate memory for code and data. Von Neumann does not. Harvard is used in many microcontrollers and it is what I'm familiar with.

So starting with your basic Harvard architecture you have program memory. When the microcontroller first starts up it executes the instructions at memory location zero. Usually this is a JUMP to address command where the main code starts.

Now, when I say instructions I mean opcodes. Opcodes are instructions encoded into binary data - usually 8 or 16 bits. In some architectures each opcode is hardcoded to mean specific things, in others each bit can be significant (ie, bit 1 means check carry, bit 2 means check zero flag, etc). So there are opcodes and then parameters for the opcodes. A JUMP instruction is an opcode and an 8 or 16 or 32 bit memory address which the code 'jumps' to. Ie, control is transferred to the instructions at that address. It accomplishes this by manipulating a special register that contains the address of the next instruction to be executed. So to JUMP to memory location 0x0050 it would replace the contents of that register with 0x0050. On the next clock cycle the processor would read the register and locate the memory address and execute the instruction there.

Executing instructions causes changes in the state of the machine. There is a general status register that records information about what the last command did (ie, if it's an addition then if there was a carry out required, there's a bit for that, etc). There is an 'accumulator' register where the result of the instruction is placed. The parameters for instructions can either go in one of several general purpose registers, or the accumulator, or in memory addresses (data OR program). Different opcodes can only be executed on data in certain places. For instance, you might be able to ADD data from two general purpose registers and have the result show up in the accumulator, but you can't take data from two data memory locations and have the result show up in another data memory location. You'd have to move the data you want to the general purpose registers, do the addition, then move the result to the memory location you want. That's why assembly is considered difficult. There are as many status registers as the architecture is designed for. More complex architectures may have more to allow more complex commands. Simpler ones may not.

There is also an area of memory known as the stack. It's just an area in memory for some microcontrollers (like the 8051). In others it can have special protections. There is a register called a stack pointer that records what memory location the 'top' of the stack is at. When you 'push' something on to the stack from the accumulator then the 'top' memory address is incremented and the data from the accumulator is put into the former address. When retrieving or popping data from the stack, the reverse is done and the stack pointers is decremented and the data from the stack is put into the accumulator.

Now I have also sort of glazed over how instructions are 'executed'. Well, this is when you get down to digital logic - VHDL type of stuff. Multiplexers and decoders and truth tables and such. That's the real nitty gritty of design - kind of. So if you want to 'move' the contents of a memory location into the accumulator you have to figure out addressing logic, clear the accumulator register, AND it with the data at the memory location, etc. It's daunting when placed all together but if you've done separate parts (like addressing, a half-adder, etc) in VHDL or in any digital logic fashion you might have an idea what's required.

How does this relate to C? Well, a compiler will take the C instructions and turn them into a series of opcodes that perform the requested operations. All of that is basically hex data - one's and zeros that get placed at some point in program memory. This is done with compiler/linker directives that tell what memory location is used for what code. It's written to the flash memory on the chip, and then when the chip restarts it goes to code memory location 0x0000 and JUMPs to the start address of the code in program memory, then starts plugging away at opcodes.

Solution 5

You can refer to the link https://automotivetechis.wordpress.com/.

The following sequence overviews the sequence of controller instruction executions:

1) Allocates primary memory for the program’s execution.

2) Copies address space from secondary to primary memory.

3) Copies the .text and .data sections from the executable into primary memory.

4) Copies program arguments (e.g., command line arguments) onto the stack.

5) Initializes registers: sets the esp (stack pointer) to point to top of stack, clears the rest.

6) Jumps to start routine, which: copies main()‘s arguments off of the stack, and jumps to main().

Related videos on Youtube

inquisitive

Updated on June 04, 2022Comments

-

inquisitive 5 months

inquisitive 5 monthsI am working in embedded system domain. I would like to know how a code gets executed from a microcontroller(uC need not be subjective, in general), starting from a C file. Also i would like to know stuffs like startup code, object file, etc. I couldnt find any online documentations regarding the above stuff. If possible, please provide links which explains those things from scratch. Thanks in advance for your help

-

Warren P over 12 yearsthe C files don't execute! :-) They are compiled to object files, and linked into a final executable image, which is either loaded to flash or RAM and run from there.

Warren P over 12 yearsthe C files don't execute! :-) They are compiled to object files, and linked into a final executable image, which is either loaded to flash or RAM and run from there.

-

-

inquisitive about 13 yearsThanks Cube. I now understand that an executable will be created in the host PC and will be put in non-volatile memory of the uC. I would like to know how does the actual execution starts in the actual target from thereafter. Any online documentation reg this or a case study is preferable.

inquisitive about 13 yearsThanks Cube. I now understand that an executable will be created in the host PC and will be put in non-volatile memory of the uC. I would like to know how does the actual execution starts in the actual target from thereafter. Any online documentation reg this or a case study is preferable. -

inquisitive about 13 yearsThanks for the quick reply, pax. If possible, can u try providing any good links available for explaining the above process (doenst matter what the actual uC is)

inquisitive about 13 yearsThanks for the quick reply, pax. If possible, can u try providing any good links available for explaining the above process (doenst matter what the actual uC is) -

inquisitive about 13 yearsBang on the target!! Thanks sybreon. Now what about the memory allocation? Assuming my uC has a flash memory, the static and global variables used by the program would be stored in RAM (.bss and Data section) by the system startup code, local variables in stack (again RAM) and code remains in flash (ROM). The actual execution occurs by executing each instruction from flash. Am i right?

inquisitive about 13 yearsBang on the target!! Thanks sybreon. Now what about the memory allocation? Assuming my uC has a flash memory, the static and global variables used by the program would be stored in RAM (.bss and Data section) by the system startup code, local variables in stack (again RAM) and code remains in flash (ROM). The actual execution occurs by executing each instruction from flash. Am i right? -

simon about 13 years@Guru_newbie: "The actual execution occurs by executing each instruction from flash." Some processors run the code directly from flash and some do not. I believe the 8051 will run the code from flash. Higher end (32bit's)embedded processors, like a PC will copy the application code into RAM and execute from RAM.

simon about 13 years@Guru_newbie: "The actual execution occurs by executing each instruction from flash." Some processors run the code directly from flash and some do not. I believe the 8051 will run the code from flash. Higher end (32bit's)embedded processors, like a PC will copy the application code into RAM and execute from RAM. -

simon about 13 years@sybreon: Step 2 will also setup static variables in RAM and copy over initialization data too.

simon about 13 years@sybreon: Step 2 will also setup static variables in RAM and copy over initialization data too. -

Craig McQueen about 13 yearsNot quite accurate about ELF/PE. Many linkers for embedded systems output ELF, it's just that the binary code within it is fixed-address, not position-independent. So then it's possible to generate a hex file (Motorola S-record or Intel Hex) or straight binary dump (assuming you know the starting address) to load into Flash.

Craig McQueen about 13 yearsNot quite accurate about ELF/PE. Many linkers for embedded systems output ELF, it's just that the binary code within it is fixed-address, not position-independent. So then it's possible to generate a hex file (Motorola S-record or Intel Hex) or straight binary dump (assuming you know the starting address) to load into Flash. -

Craig McQueen about 13 yearsJust note: ARM is among the more complex embedded systems. Start-up code is especially complex compared to smaller uCs e.g. AVR.

Craig McQueen about 13 yearsJust note: ARM is among the more complex embedded systems. Start-up code is especially complex compared to smaller uCs e.g. AVR. -

sybreon about 13 years@simon - yes, that's usually the case. Sometimes, a compiler flag can control things like zeroing .bss and what nots.

sybreon about 13 years@simon - yes, that's usually the case. Sometimes, a compiler flag can control things like zeroing .bss and what nots. -

sybreon about 13 yearsTo add on about memory allocation, that is usually controlled by the linker. If the .text section is configured as flash, that's where the code will come from.

sybreon about 13 yearsTo add on about memory allocation, that is usually controlled by the linker. If the .text section is configured as flash, that's where the code will come from. -

tkyle almost 13 yearsOn reset, the processor begins execution at the restart vector which may or may not be at location 0x0000. You have to look at the specific processors data sheet for the location of the restart vector.

tkyle almost 13 yearsOn reset, the processor begins execution at the restart vector which may or may not be at location 0x0000. You have to look at the specific processors data sheet for the location of the restart vector. -

Warren P over 12 yearsI think inquisitive may mean dynamic runtime memory allocation, like malloc. If so, then that stuff is in the "Libraries" section and is often called "the standard C libraries", of which Newlib and uCLibc are particular implementations of the standard C libraries, meant for microcontroller use.

Warren P over 12 yearsI think inquisitive may mean dynamic runtime memory allocation, like malloc. If so, then that stuff is in the "Libraries" section and is often called "the standard C libraries", of which Newlib and uCLibc are particular implementations of the standard C libraries, meant for microcontroller use. -

Ben Gartner over 12 yearsI like you answer sybreon, but (in item 2) I don't think C assumes there will be a heap. Many embedded c compilers will preallocate memory for static variables and allocate space for local variables from the stack. In that case, the heap would only come into play if you were using libraries with malloc(). Not sure about C++ constructors.

Ben Gartner over 12 yearsI like you answer sybreon, but (in item 2) I don't think C assumes there will be a heap. Many embedded c compilers will preallocate memory for static variables and allocate space for local variables from the stack. In that case, the heap would only come into play if you were using libraries with malloc(). Not sure about C++ constructors. -

nonsensickle over 9 years@Ben Gartner but malloc() is a part of the C standard library which is a part of C. Hence, if that library assumes there's a heap, so does C as a whole. If you disregard that library than you're talking about a "subset" of C.

nonsensickle over 9 years@Ben Gartner but malloc() is a part of the C standard library which is a part of C. Hence, if that library assumes there's a heap, so does C as a whole. If you disregard that library than you're talking about a "subset" of C. -

Nazim almost 3 years@WarrenP In application code which in processor memory there is no main() function it is represented as hex file, my question with that respect how processor know where to start the execution of the code.

Nazim almost 3 years@WarrenP In application code which in processor memory there is no main() function it is represented as hex file, my question with that respect how processor know where to start the execution of the code.