Comparison between timsort and quicksort

Solution 1

TimSort is a highly optimized mergesort, it is stable and faster than old mergesort.

when comparing with quicksort, it has two advantages:

- It is unbelievably fast for nearly sorted data sequence (including reverse sorted data);

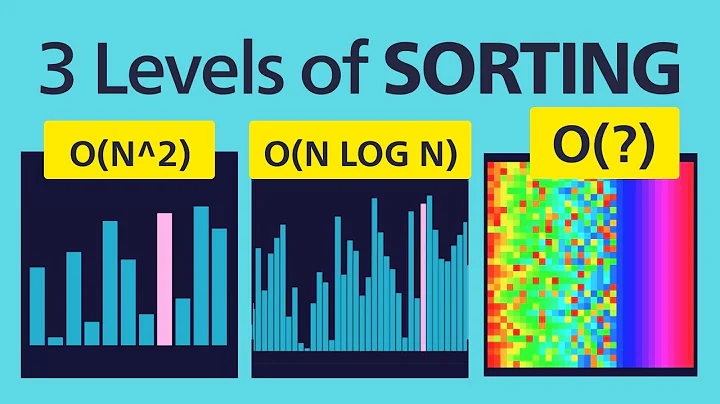

- The worst case is still O(N*LOG(N)).

To be honest, I don't think #1 is a advantage, but it did impress me.

Here are QuickSort's advantages

- QuickSort is very very simple, even a highly tuned implementation, we can write down its pseduo codes within 20 lines;

- QuickSort is fastest in most cases;

- The memory consumption is LOG(N).

Currently, Java 7 SDK implements timsort and a new quicksort variant: i.e. Dual Pivot QuickSort.

If you need stable sort, try timsort, otherwise start with quicksort.

Solution 2

More or less, it has to do with the fact that Timsort is a hybrid sorting algorithm. This means that while the two underlying sorts it uses (Mergesort and Insertion sort) are both worse than Quicksort for many kinds of data, Timsort only uses them when it is advantageous to do so.

On a slightly deeper level, as Patrick87 states, quicksort is a worst-case O(n2) algorithm. Choosing a good pivot isn't hard, but guaranteeing an O(n log n) quicksort comes at the cost of generally slower sorting on average.

For more detail on Timsort, see this answer, and the linked blog post. It basically assumes that most data is already partially sorted, and constructs "runs" of sorted data that allow for efficient merges using mergesort.

Solution 3

Generally speaking quicksort is best algorithm for primitive array. This is due to memory locality and cache.

JDK7 uses TimSort for Object array. Object array only holds object reference. The object itself is stored in Heap. To compare object, we need to read object from heap. This is like reading from one part of the heap for one object, then randomly reading object from another part of heap. There will be a lot of cache miss. I guess for this reason memory locality is not important any more. This is may be why JDK only uses TimSort for Object array instead if primitive array.

This is only my guess.

Solution 4

Here are benchmark numbers from my machine (i7-6700 CPU, 3.4GHz, Ubuntu 16.04, gcc 5.4.0, parameters: SIZE=100000 and RUNS=3):

$ ./demo

Running tests

stdlib qsort time: 12246.33 us per iteration

##quick sort time: 5822.00 us per iteration

merge sort time: 8244.33 us per iteration

...

##tim sort time: 7695.33 us per iteration

in-place merge sort time: 6788.00 us per iteration

sqrt sort time: 7289.33 us per iteration

...

grail sort dyn buffer sort time: 7856.67 us per iteration

The benchmark comes from Swenson's sort project in which he as implemented several sorting algorithms in C. Presumably, his implementations are good enough to be representative, but I haven't investigated them.

So you really can't tell. Benchmark numbers only stay relevant for at most two years and then you have to repeat them. Possibly, timsort beat qsort waaay back in 2011 when the question was asked, but the times have changed. Or qsort was always the fastest, but timsort beat it on non-random data. Or Swenson's code isn't so good and a better programmer would turn the tide in timsort's favor. Or perhaps I suck and didn't use the right CFLAGS when compiling the code. Or... You get the point.

Solution 5

Tim Sort is great if you need an order-preserving sort, or if you are sorting a complex array (comparing heap-based objects) rather than a primitive array. As mentioned by others, quicksort benefits significantly from the locality of data and processor caching for primitive arrays.

The fact that the worst case of quicksort is O(n^2) was raised. Fortunately, you can achieve O(n log n) time worst-case with quicksort. The quicksort worst-case occurs when the pivot point is either the smallest or largest value such as when the pivot is the first or last element of an already sorted array.

We can achieve O(n log n) worst-case quicksort by setting the pivot at the median value. Since finding the median value can be done in linear time O(n). Since O(n) + O(n log n) = O(n log n), that becomes the worst-case time complexity.

In practice, however, most implementations find that a random pivot is sufficient so do not search for the median value.

Related videos on Youtube

chenglou

Updated on January 20, 2022Comments

-

chenglou over 2 years

Why is it that I mostly hear about Quicksort being the fastest overall sorting algorithm when Timsort (according to wikipedia) seems to perform much better? Google didn't seem to turn up any kind of comparison.

-

Michael Petrotta over 12 yearsWith a little more thought and some references, this could be a good question.

-

Patrick87 over 12 yearsBecause people choose to ignore that quicksort is O(n^2) worst case.

-

flolo over 12 yearsOne possible answer would be: You speak to the wrong persons. But as an other answer already implied: qsort is much older, so its used in far more libraries - and you know: Never touch a running system. If the average running time (meaning: in the use cases of the people using it) is not much worse than the run time of a different algorithm (like timsort) the people are too lazy (or have better things to do) than to change something, that does the same in the same time. And in some applications (it seems e.g. python) timsort is already default.

-

Rob Neuhaus over 12 years@Patrick87: The truth is much different. You are ignoring the O(n) best case. It's not about worst cases that basically never happen, it's about best cases that actually do. timsort does a good job when it encounters a sorted range.

-

brc over 12 years@rrenaud worst cases "basically" never happen, but they do "actually" happen, sometimes. It is an important consideration, especially when hitting a worst case O(n<sup>2</sup>) means bad things happen.

-

Martin Thoma almost 10 years@RobNeuhaus: I think the worst case of (a simple implementation of) Quicksort actually happens quite often. Just sort a list that is (almost) sorted already.

-

Jeremy West over 6 years@MartinThoma Sorted lists only produce the worst-case result for a really bad implementation of Quicksort. Random or median-of-three partition selection implementations avoid worst-case for sorted or nearly sorted lists. But they still don't achieve the O(n) behavior of Timsort. Timsort's stability is also a key property that people overlook. It is very useful in a lot of situations, and in particular for multi-key sorts.

-

user3814357 almost 6 yearsI cannot use standard java sort in codeforces programming competitions, because java uses double pivot quicksort for integer and double arrays, and so there exist arrays which require O(n^2) time to run. And some test data is often composed with these arrays, so program takes too long time and fails. So I have to switch to my own mergeSort instead. It cannot happen with timsort algorithm.

-

garbagecollector over 4 yearsThere's more to just "fast". Quicksort is still better in practice because it is in-place, requires O(1) space, and only swap values. Its O(n^2) can be easily prevented. It is also old and has been researched and reimplemented many many times. For sorting just numbers where you do not need stability, definitely Quicksort. Timsort is just a hybrid of natural mergesort + insertion sort. Scientifically and academically, it's nothing new. A lot of the complexities in Timsort is just impelementation details.

-

-

Jeremy West over 6 years#1 can be a huge advantage. If you maintain a list of data that you must re-sort frequently (because items are inserted, appended, or modified), having an algorithm that allows you to very cheaply re-order that data is extremely useful. Whether it is useful depends on the situation, for sure, but it is huge in some cases and also feels obvious: nearly sorted lists shouldn't be hard to sort.

-

Eric Duminil over 6 years@JeremyWest: If you know that data is already sorted, you should use binary search to insert new values. Don't sort it again and again.

Eric Duminil over 6 years@JeremyWest: If you know that data is already sorted, you should use binary search to insert new values. Don't sort it again and again. -

Jeremy West over 6 years@EricDuminil Binary search is fast, but insertions in the middle of an array aren't. There are many applications where the simplest (and often most efficient) solution is to re-sort a mostly sorted list when you need it to be sorted, but to let it get unsorted otherwise. Or cases where you read in data that is mostly sorted, and then need to sort it. I'm not suggesting this is always the best solution, but that sometimes it is. And it is one reason why sorts that perform well on mostly sorted lists are preferable, particularly for standard libraries.

-

Eric Duminil over 6 years@JeremyWest: Thanks for the insightful answer.

Eric Duminil over 6 years@JeremyWest: Thanks for the insightful answer. -

Qwertie over 5 yearsTimsort's performance is dependent on the kind of data being sorted: it is slowest on random data and fastest on sorted data. Strange, then, that this answer and the project readme say nothing about the nature of the data. So I looked at the code and noticed that the data is random. (Quicksort by contrast has consistent speed in most cases, except in specially-crafted adversarial cases and in cases where the quicksort algorithm is implemented badly - e.g. always taking the first or last element as the pivot is a big no-no).

-

Qwertie over 5 yearsI would add that the purpose of Timsort was never to beat Quicksort, but rather to be a fast stable sort (Quicksort is not stable) that minimizes comparisons (which are slow in Python). It should, however, beat Quicksort when the data is sorted, or almost sorted. (see also)

-

Björn Lindqvist over 5 yearsDo you know of any benchmarks in which Timsort is the fastest? I'm opposed to all claims of performance that does not come with benchmark numbers. :) Good point on the Swenson benchmark using randomized data -- I didn't check it very closely.

-

ShadowRanger about 5 years@Qwertie: TimSort's handling of already (partially) sorted data was also important given that Python does not have an obvious way to merge sorted data, and the non-obvious way (

ShadowRanger about 5 years@Qwertie: TimSort's handling of already (partially) sorted data was also important given that Python does not have an obvious way to merge sorted data, and the non-obvious way (heapq.merge) isn't all that efficient (large parts of it are implemented in Python, not C). So the common way to merge already sorted data, or add unsorted data to sorted data is to just do:sortedlist += newdata; sortedlist.sort()(or one-lined,sortedlist = sorted(sortedlist + newdata)). This would be really inefficient if TimSort didn't use the existing ordering. -

Alan Evangelista over 4 yearsI assume that is one of the reasons why Timsort is Python's standard sorting algorithm, as everything is an object in Python.

Alan Evangelista over 4 yearsI assume that is one of the reasons why Timsort is Python's standard sorting algorithm, as everything is an object in Python. -

Groo over 4 years@Jeremy: if it's mostly sorted, timsort is the algorithm of choice. But binary search + insertion is still O(N + logN), which should beat O(N logN).

-

Jeremy West over 4 years@Groo Yes, if you are only inserting a single item into an already sorted list. That's not the only application I mentioned. Obviously, your mileage will vary depending on the problem you are solving.

-

johan over 2 yearsIf doing insertions for n items, binary search + insertion is O(N^2 + N logN) = O(N^2) while insert all and sort once is O(N + N logN) = O(N logN).