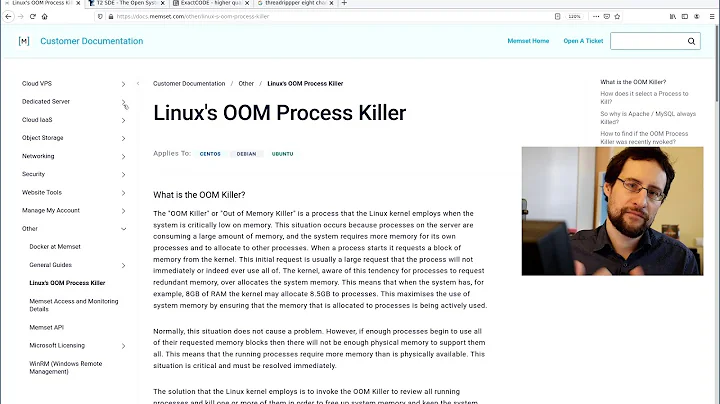

Disabling OOM killer on Ubuntu 14.04

Solution 1

This is a XY problem; that is, you do have a problem (X), whether you know it or not, and believe you can solve things by solving a different problem, which is problem Y.

Why is it that you want to disable the oomkiller? That's the X problem you need to solve. What you should do is investigate and detail problem X. Give the details of that, and it is possible we can help you.

Going after problem Y and disabling the oomkiller is generally a very bad idea which would surely create much bigger problems that it might solve.

Invoking the oomkiller is a desperate, last-ditch resort when things have gone awry memory-wise, and doom is imminent. Your system has six passengers, one hour to go, and only five man-hours of oxygen supply. You might think that killing one passenger at random (not at random - the one with higher metabolism actually) is a terrible thing to do, but the alternative is having all six passengers dead by suffocation ten long minutes before docking.

Suppose you do that

You succeed in disabling the oomkiller. The system restarts and goes its merry way. Now two things can happen:

- the oomkiller never requires invocation. You did it all for naught.

- the oomkiller does require invocation.

But why was the oomkiller required? That was because some process needed memory, and the system discovered that it could not serve that memory.

There is a process which we call "memoryhog", which would have been killed by oomkiller, and now this is not what happens. What does happen?

Option 1: OOM means death

You issued these commands at boot

sysctl vm.panic_on_oom=1

sysctl kernel.panic=5

or added this to /etc/sysctl.conf and rebooted

vm.panic_on_oom=1

kernel.panic=5

and as soon as the system is hogged, it will panic and reboot after 5 seconds.

Option 2: kill someone else if possible

You classify at boot some processes as "expendable" and some others as "not expendable". For example Apache could be expendable, not so MySQL. So when Apache encounters some absurdly large request (on LAMP systems, usually ensuing from the "easy fix" of setting PHP memory allocation to ludicrously large values instead of changing some memory-bound or leaky approach), the Apache child is killed even if MySQL is a larger hog. This way, the customer will simply see a blank page, hit RELOAD, get another... and finally desist.

You do so by recording MySQL's PID at startup and adjusting its OOM rating:

echo -15 > /proc/$( pidof mysql )/oom_adj

In this situation however I'd also adjust Apache's child conf.

Note that if this option solves your problem, it means you have a problem in the other processes (for example, a too-large PHP process. Which derives from a sub-optimal algorithm or data selection stage. You follow the chain until you arrive at the one problem which is really the one to solve).

Probably, installing more RAM would have had the same effect.

In this option, if MySQL itself grows too much (e.g. you botched the innodb_pool_size value), then MySQL will be killed anyway.

Option 3: never ever kill the $MYPRECIOUSSS process

As above, but now the adjustment is set to -17.

I'm now talking about MySQL, but most programs will behave in the same way. I know for sure that both PostgreSQL and Oracle do (and they're entitled to. You abuse RDBMS's at your peril).

This option is dangerous in that oomkiller will kill someone else, possibly repeatedly, but above all, possibly because of $MYPRECIOUSSS itself.

So, a runaway process will evict everyone else from memory until the system is unusable and you can't even log in to check or do anything. Soon after, MySQL will corrupt its own status information and crash horrifically, and you'll be left with a long fsck session, followed by painstaking table repair, followed by careful transaction reconstruction.

I can tell you from my own experience that management will be extremely unhappy with the one that set up what is, in effect, a ticking time bomb. There'll be a lot of blame flying around (MySQL was misconfigured, Apache was leaky, OOMKiller was crazy, it was a hacker...), so "the one" might be "the many". However, for this third option, I cannot stress heavily enough the need of full, adequate and constantly updated and monitored backups, with recovery tests.

Option 4 - "turning off" OOMkiller

This option might look like a joke. Like the old Windows XP trick to "How to avoid blue screens forever!" - which actually really worked... by setting a little known variable that turned them magenta.

But you can make it so oomkiller is never invoked, by editing the /etc/sysctl.conf file and adding these lines:

vm.overcommit_memory = 2

vm.overcommit_kbytes = SIZE_OF_PHYSICAL_MEMORY_IN_K

Then also run the commands sysctl vm.overcommit_memory=2 and sysctl vm.overcommit_kbytes=... to avoid the need to reboot. You want the overcommit_kbytes to be somewhat less than the physical available memory.

What happens now? If the system has memory enough, nothing happens - just as if you did nothing.

When the system is low on memory, new processes will be unable to start or will crash upon starting. They need memory, and the memory is not there. So they will not be "oomkilled", but instead "killed by out of memory error". The results are absolutely identical, and that's why some might think this a joke.

My own recommendation

Investigate why the system runs low on memory. This can be either inherently unavoidable, remediable, or avoidable entirely. If inherently unavoidable, bump the problem higher and require more memory to be installed.

If it is caused by a runaway process, due to a bug or a faulty input or a condition that can be recognized before criticality, it is remediable. You need to have the process reengineered, and to build a case for that.

It could also happen that the problem is entirely avoidable by splitting services between different servers, or by tuning more carefully one or more processes, or by (again) reengineering the faulty process(es).

In some scenarios, the more efficient solution might be "throw some money at it" - if you've troubles with a server with 256GB RAM and you know you'll need at most 280 for the foreseeable future, an upgrade to 320GB might cost as little as 3,000 USD up front, all inclusive, and be done in the weekend - which, compared to a 150,000 USD/day downtime cost, or four programming man-months at 14,000 USD each, is a real sweet deal, and will likely improve performances even in everyday conditions.

An alternative could be that of setting up a really large swap area, and activate it on demand. Supposing we have 32 GB free in /var/tmp (and that it is not memory-backed):

dd if=/dev/zero of=/var/tmp/oomswap bs=1M count=32768

# or: fallocate -l 32g /var/tmp/oomswap

chmod 600 /var/tmp/oomswap

mkswap /var/tmp/oomswap

swapon /var/tmp/oomswap

This will create a 32Gb file useful to give some breath to the various processes. You may also want to inspect and possibly increase swappiness:

cat /proc/sys/vm/swappiness

Ubuntu 14.04 has a default swappiness of 60 which is okay for most users.

sysctl vm.swappiness=70

Solution 2

echo 2 > /proc/sys/vm/overcommit_memory

echo 0 > /proc/sys/vm/overcommit_kbytes

See https://www.kernel.org/doc/Documentation/sysctl/vm.txt for descriptions of the options.

The standard caveat applies: when the system is out of memory, and your program asks for more, it will be refused; and this is a point where many programs crash or wedge.

Related videos on Youtube

Comments

-

Hassan Baig over 1 year

Hassan Baig over 1 yearHow does one disable the

OOM killeron Ubuntu 14.04? There's a description here, but it doesn't seem to have worked for the OP. Would be great to get an answer with clear steps. -

Hassan Baig over 7 yearsI get all your points (and thanks for the detailed response), but see this from 5:28 onwards: youtube.com/watch?v=3yhfW1BDOSQ So it'll at least be good to have an outline of the steps required to turn OOM killer off. Thanks for that.

Hassan Baig over 7 yearsI get all your points (and thanks for the detailed response), but see this from 5:28 onwards: youtube.com/watch?v=3yhfW1BDOSQ So it'll at least be good to have an outline of the steps required to turn OOM killer off. Thanks for that. -

LSerni over 7 yearsYou can prevent OOMkiller from running (mostly) by following @aecolley 's instructions. That way, over-allocating memory is impossible. Any program attempting it will be oomki... terminated. The steps to do so a bit better are already in my answer.

-

Mihai Albert over 2 years@LSerni I realize it's 5 years later, but wouldn't option 4 essentially result in potentially leaving huge portions of RAM off-limits? This is depicted here screencast.com/t/Ex0gJ8KNiX8, where a simple app is used to allocate (and touch) memory until exhaustion. Since overcommit is disabled (

Mihai Albert over 2 years@LSerni I realize it's 5 years later, but wouldn't option 4 essentially result in potentially leaving huge portions of RAM off-limits? This is depicted here screencast.com/t/Ex0gJ8KNiX8, where a simple app is used to allocate (and touch) memory until exhaustion. Since overcommit is disabled (vm.overcommit_memory=2) andvm.overcommit_kbytes=0this will mean the commit limit is set to the size of whatever swap is configured. Thus the system configured as such will never get to use any RAM above 4 GB (until 12 GB), and it will never be in any real danger of a low memory situation. -

Mihai Albert over 2 years@LSerni maybe I'm missing something here, but in the movie I've pasted - given the 2 variables set - the commit limit for the whole system is essentially set to 4 GB (size of swap). Once the app that allocates memory starts, it goes just fine up until it tries to allocate close to 4 GB (Committed_AS is already some small value), as it hits the overall commit limit. This leaves - as htop shows - large quantities of memory still unused.

Mihai Albert over 2 years@LSerni maybe I'm missing something here, but in the movie I've pasted - given the 2 variables set - the commit limit for the whole system is essentially set to 4 GB (size of swap). Once the app that allocates memory starts, it goes just fine up until it tries to allocate close to 4 GB (Committed_AS is already some small value), as it hits the overall commit limit. This leaves - as htop shows - large quantities of memory still unused. -

LSerni over 2 years@MihaiAlbert yes, sorry. I hadn't re-read the answer and didn't understand your objection, which is correct. Modified answer accordingly.

-

Jeff Learman about 2 yearsOption 5: (1) set a memory limit for a particular process, (2) handle malloc failure gracefully in that process, and (3) disable OOM killer for that process. Result: success! The process limits itself to what it can handle. This used to be SOP for system programming. You can say "OK Boomer" now.

Jeff Learman about 2 yearsOption 5: (1) set a memory limit for a particular process, (2) handle malloc failure gracefully in that process, and (3) disable OOM killer for that process. Result: success! The process limits itself to what it can handle. This used to be SOP for system programming. You can say "OK Boomer" now. -

LSerni about 2 years@JeffLearman That's how I still prefer my programs to behave. Assume nothing: maybe the memory will be there, maybe it won't. Free it as soon as you need it no longer. And yes, I am a boomer too (back in the days, I wrote my own memory canary routines). However, I do understand the economics... sometimes, unsatisfyingly, sloppiness is the most money-efficient approach.