Does a RAID controller with an NV cache improve the performance or integrity of an SSD array?

Solution 1

If used with SSDs without powerloss-protected write cache, the RAID controller's NVCACHE is extemely important to obtain good performance.

However, as you are using SSDs with powerloss-protected write caches, performance should not vary much between the various options. On the other hand, there are other factors to consider:

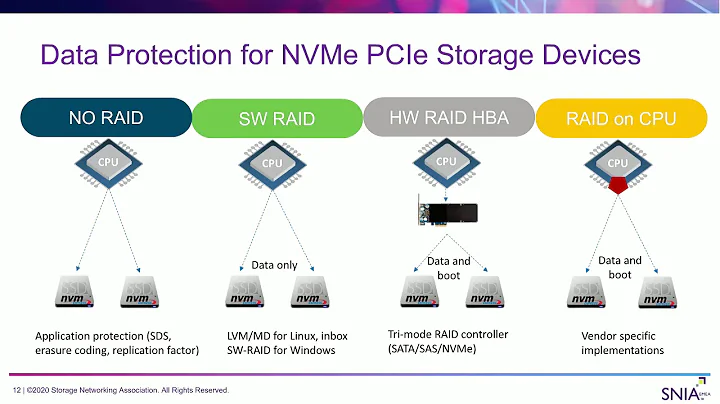

- with hardware RAID is often simpler to identify and replace a failed disk: the controller clearly marks the affected drive (eg: with an amber light) and replacing it is generally as simple as pull the old drive/insert the new one. With a software RAID solution, you need to enter the appropriate commands to indentify and replace the failed drive;

- hardware RAID presents the BIOS a single volume for booting, while software RAID shows the various component devices;

- with the right controller (ie: H730 or H740) and disks (SAS 4Kn) you can very easily enable the extended data integrity field (T10/T13);

- hardware RAID runs an opaque, binary blob on which you have no control;

- Linux software RAID is much more flexible than any hardware RAID I ever used.

That said, on such a setup I strongly advise you to consider using ZFS on Linux: the powerloss-protected write caches means you can go ahead without a dedicated ZIL device, and ZFS added features (compression, checksumming, etc) can be very useful.

To directly reply to your questions:

- Are any of these configurations at risk for data loss or corruption on power loss? No: as any caches is protected, you are should not corrupt any data on power losses.

- Which configuration should I expect to have the best write performance? The H740P configured in write-back cache mode should give you the absolute maximum write performance. However in some circumstances, depending on your specific workload, write-through can be faster. DELL (and LSI) controller even have some specific SSD features (ie: CTIO and FastPath) which build on write-through and can increase your random write performance.

- Are there any other benefits to an NV cache that I haven't considered? Yes: a controller with a proper NVCACHE will never let the two RAID1/10 legs to have different data. In some circumstances, Linux software RAID is prone to (harmless) RAID1 mismatches. ZFS does not suffer from that problem.

Solution 2

Q1: Are any of these configurations at risk for data loss or corruption on power loss?

A1: You shouldn't have any issues, unless you'll configure cache in write-back mode, and w/out NV RAM.

Q2: Which configuration should I expect to have the best write performance?

A2: One having biggest amount of cache obviously! ...and no parity RAID, but RAID10 of course.

Q3: Are there any other benefits to an NV cache that I haven't considered?

A3: Write coalescing, spoofing etc. But these are minor really.

Related videos on Youtube

Comments

-

sourcenouveau over 1 year

sourcenouveau over 1 yearI am planning to purchase a server (Dell PowerEdge R740) with SSDs in RAID 10, and my priorities are write performance and data integrity. It will be running Linux. The SSDs have write caches with power loss protection.

It seems like these are my RAID options:

- PERC H330 (no cache), software RAID (pass-through)

- PERC H330 (no cache), hardware RAID (write-through)

- PERC H730P (2 Gb NV cache), hardware RAID (write-through)

- PERC H740P (8 Gb NV cache), hardware RAID (write-through)

My questions:

- Are any of these configurations at risk for data loss or corruption on power loss?

- Which configuration should I expect to have the best write performance?

- Are there any other benefits to an NV cache that I haven't considered?

Related questions:

-

sourcenouveau over 6 yearsI wasn't sure whether the cache would help because I read that write reordering doesn't impact SSDs much, and because the SSDs have their own write caches.

sourcenouveau over 6 yearsI wasn't sure whether the cache would help because I read that write reordering doesn't impact SSDs much, and because the SSDs have their own write caches. -

ThoriumBR over 6 years@M.Dudley yes, they have caches, but you cannot have such a thing as too much cache. Cache is good, the more cache the better.

-

BaronSamedi1958 over 6 years@M. Dudley: RAID controller has gigabytes of cache sitting behind comparably fast and low latency PCIe x4-x8 lanes bus, while SSD caches are in megabytes and they are behind 6-12 Gbps SATA/SAS links.

BaronSamedi1958 over 6 years@M. Dudley: RAID controller has gigabytes of cache sitting behind comparably fast and low latency PCIe x4-x8 lanes bus, while SSD caches are in megabytes and they are behind 6-12 Gbps SATA/SAS links. -

BaronSamedi1958 over 6 yearsZFS is more than a RAID really: it has variable parity strips so there's no ready-modify-write or "write hole". Also instead of a page cache it has advanced ARC. There's one thing it misses: NV RAM... which can be solved with NV DIMM integration :)

BaronSamedi1958 over 6 yearsZFS is more than a RAID really: it has variable parity strips so there's no ready-modify-write or "write hole". Also instead of a page cache it has advanced ARC. There's one thing it misses: NV RAM... which can be solved with NV DIMM integration :) -

the-wabbit over 6 years@BaronSamedi1958 it does not matter as much as it might seem it would. "Gigabytes of cache" is spread over the entire logical volume you've defined, so broken down to a single disk it might come down to merely a few megabytes per disk. Also, even the dated Samsung 850 Pro came with 1 GB of DRAM cache, just about half the entire cache of the H730P. Last but not least: the SAS3 interface delivers 12GB/s over a single link, outperforming the x8 PCIe 3 lanes the RAID controllers are typically plugged into.

-

shodanshok over 6 years@the-wabbit while I generally agree with you, your bandwidth calculation is wrong: SAS3 has 12 Gb/s or 1.5 GB/s per-direction maximum. A PCI-E 8x has 128 Gb/s or 16 GB/s per-direction maximum bandwidth. Moreover, the SAS controller itself generally hangs from an upstream PCI-E link, just as the RAID controller.

shodanshok over 6 years@the-wabbit while I generally agree with you, your bandwidth calculation is wrong: SAS3 has 12 Gb/s or 1.5 GB/s per-direction maximum. A PCI-E 8x has 128 Gb/s or 16 GB/s per-direction maximum bandwidth. Moreover, the SAS controller itself generally hangs from an upstream PCI-E link, just as the RAID controller. -

BaronSamedi1958 over 6 years@the-wabbit You're confusing gigabits and gigabytes. Also you ignore the fact per-volume cache is closely related to the file system while per-disk cache is not: it's a write buffer. Same is true about SSDs cache which is actually a log-structured page assembly write buffer. + you ignore latency at every stage.

BaronSamedi1958 over 6 years@the-wabbit You're confusing gigabits and gigabytes. Also you ignore the fact per-volume cache is closely related to the file system while per-disk cache is not: it's a write buffer. Same is true about SSDs cache which is actually a log-structured page assembly write buffer. + you ignore latency at every stage.