Does Google cache robots.txt?

Solution 1

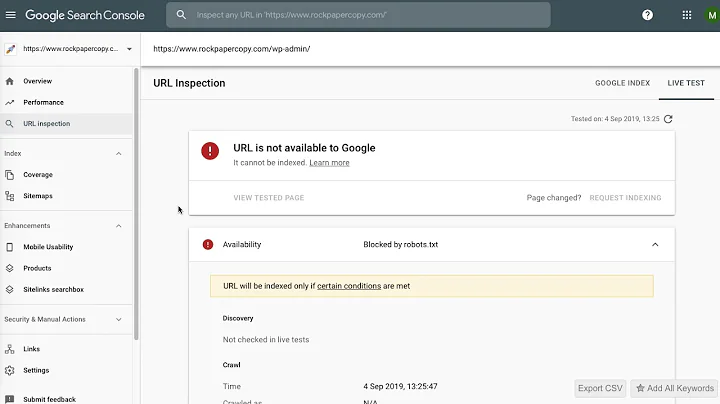

I would strongly recommend registering your site with Google Search Console (previously Google Webmaster Tools). There is a crawler access section under site configuration that will tell you when your robots.txt was last downloaded. The tool also provides a lot of detail as to how the crawlers are seeing your site, what is blocked or not working, and where you are appearing in queries on Google.

From what I can tell, Google downloads the robots.txt often. The Google Search Console site will also let you specifically remove URLs from the index, so you can remove those ones you are now blocking.

Solution 2

Persevere. I changed from robots.txt towards meta noindex,nofollow. In order to make the meta work the blocked addresses in robots.txt had to first be unblocked.

I did this brutally by deleting the robots.txt altogether (and delcaring it in google's webmaster).

The robots.txt removal process as seen in the webmaster tool (number of pages blocked) took 10 weeks to be completed, of which the bulk was only removed by google during the last 2 weeks.

Solution 3

Yes, Google will obviously cache robots.txt to an extent - it won't download it every time it wants to look at a page. How long it caches it for, I don't know. However, if you have a long Expires header set, Googlebot may leave it much longer to check the file.

Another problem could be a misconfigured file. In the Webmaster Tools that danivovich suggests, there is a robots.txt checker. It will tell you which types of pages are blocked and which are fine.

Solution 4

Google's Documentation states that they will usually cache robots.txt for a day, but might use it for longer in if they get errors when trying to refresh it.

A robots.txt request is generally cached for up to one day, but may be cached longer in situations where refreshing the cached version is not possible (for example, due to timeouts or 5xx errors). The cached response may be shared by different crawlers. Google may increase or decrease the cache lifetime based on max-age Cache-Control HTTP headers.

Solution 5

Yes. They say they typically update it once a day, but some have suggested they may also check it after a certain number of page hits (100?) so busier sites are checked more often.

See https://webmasters.stackexchange.com/a/29946 and the video that @DisgruntedGoat shared above http://youtube.com/watch?v=I2giR-WKUfY.

Related videos on Youtube

Quog

Updated on September 17, 2022Comments

-

Quog over 1 year

I added a robots.txt file to one of my sites a week ago, which should have prevented Googlebot from attempting to fetch certain URLs. However, this weekend I can see Googlebot loading those exact URLs.

Does Google cache robots.txt and, if so, should it?

-

Quog almost 14 yearsI checked webmaster tools: the robots.txt file is valid and it was most recently fetched 17 hours before the most recent visit to those pages by googlebot. I suspect it is a question of propagation through google's network - eventually all googlebot servers will catch up with the robots.txt instructions.

-

Quog almost 14 yearsSee comment on this answer webmasters.stackexchange.com/questions/2272/…

-

DisgruntledGoat almost 14 years@Quog: See this recent video: youtube.com/watch?v=I2giR-WKUfY Matt Cutts suggests that robots.txt is downloaded either once a day or about every 100 requests.

-

MrWhite over 8 yearsThis does not answer the question.

-

KOZASHI SOUZA over 8 yearswhy not the answer ?

-

MrWhite over 8 yearsBecause the question is specifically about robots.txt, caching and crawling of URLs. One of the results of this might be that URLs aren't indexed, but that is not the question. (Google's URL removal tool is also only a "tempoary" fix, there are other steps you need to do to make it permanent.)

-

Corporate Geek about 5 yearsI tend to agree with you. We made a mistake and wrongly updated the robots.txt file. Google cached it, and it is using it four weeks after we corrected the mistake, and replaced it with a new robots.txt. I even manually submitted a refresh request in Google Webmaster Tools and... nothing. This is really bad as it resulted in lost traffic and rankings. :(

Corporate Geek about 5 yearsI tend to agree with you. We made a mistake and wrongly updated the robots.txt file. Google cached it, and it is using it four weeks after we corrected the mistake, and replaced it with a new robots.txt. I even manually submitted a refresh request in Google Webmaster Tools and... nothing. This is really bad as it resulted in lost traffic and rankings. :( -

Corporate Geek about 5 yearsThe Google bot is not using the robots.txt as often as updates are reported in the Search Console. It is has been four weeks since I made an update, and the Google bot still uses a bad robots.txt - and it destroys our traffic and rankings.

Corporate Geek about 5 yearsThe Google bot is not using the robots.txt as often as updates are reported in the Search Console. It is has been four weeks since I made an update, and the Google bot still uses a bad robots.txt - and it destroys our traffic and rankings.