Enhanced REP MOVSB for memcpy

Solution 1

This is a topic pretty near to my heart and recent investigations, so I'll look at it from a few angles: history, some technical notes (mostly academic), test results on my box, and finally an attempt to answer your actual question of when and where rep movsb might make sense.

Partly, this is a call to share results - if you can run Tinymembench and share the results along with details of your CPU and RAM configuration it would be great. Especially if you have a 4-channel setup, an Ivy Bridge box, a server box, etc.

History and Official Advice

The performance history of the fast string copy instructions has been a bit of a stair-step affair - i.e., periods of stagnant performance alternating with big upgrades that brought them into line or even faster than competing approaches. For example, there was a jump in performance in Nehalem (mostly targeting startup overheads) and again in Ivy Bridge (most targeting total throughput for large copies). You can find decade-old insight on the difficulties of implementing the rep movs instructions from an Intel engineer in this thread.

For example, in guides preceding the introduction of Ivy Bridge, the typical advice is to avoid them or use them very carefully1.

The current (well, June 2016) guide has a variety of confusing and somewhat inconsistent advice, such as2:

The specific variant of the implementation is chosen at execution time based on data layout, alignment and the counter (ECX) value. For example, MOVSB/STOSB with the REP prefix should be used with counter value less than or equal to three for best performance.

So for copies of 3 or less bytes? You don't need a rep prefix for that in the first place, since with a claimed startup latency of ~9 cycles you are almost certainly better off with a simple DWORD or QWORD mov with a bit of bit-twiddling to mask off the unused bytes (or perhaps with 2 explicit byte, word movs if you know the size is exactly three).

They go on to say:

String MOVE/STORE instructions have multiple data granularities. For efficient data movement, larger data granularities are preferable. This means better efficiency can be achieved by decomposing an arbitrary counter value into a number of double words plus single byte moves with a count value less than or equal to 3.

This certainly seems wrong on current hardware with ERMSB where rep movsb is at least as fast, or faster, than the movd or movq variants for large copies.

In general, that section (3.7.5) of the current guide contains a mix of reasonable and badly obsolete advice. This is common throughput the Intel manuals, since they are updated in an incremental fashion for each architecture (and purport to cover nearly two decades worth of architectures even in the current manual), and old sections are often not updated to replace or make conditional advice that doesn't apply to the current architecture.

They then go on to cover ERMSB explicitly in section 3.7.6.

I won't go over the remaining advice exhaustively, but I'll summarize the good parts in the "why use it" below.

Other important claims from the guide are that on Haswell, rep movsb has been enhanced to use 256-bit operations internally.

Technical Considerations

This is just a quick summary of the underlying advantages and disadvantages that the rep instructions have from an implementation standpoint.

Advantages for rep movs

-

When a

repmovs instruction is issued, the CPU knows that an entire block of a known size is to be transferred. This can help it optimize the operation in a way that it cannot with discrete instructions, for example:- Avoiding the RFO request when it knows the entire cache line will be overwritten.

- Issuing prefetch requests immediately and exactly. Hardware prefetching does a good job at detecting

memcpy-like patterns, but it still takes a couple of reads to kick in and will "over-prefetch" many cache lines beyond the end of the copied region.rep movsbknows exactly the region size and can prefetch exactly.

Apparently, there is no guarantee of ordering among the stores within3 a single

rep movswhich can help simplify coherency traffic and simply other aspects of the block move, versus simplemovinstructions which have to obey rather strict memory ordering4.In principle, the

rep movsinstruction could take advantage of various architectural tricks that aren't exposed in the ISA. For example, architectures may have wider internal data paths that the ISA exposes5 andrep movscould use that internally.

Disadvantages

rep movsbmust implement a specific semantic which may be stronger than the underlying software requirement. In particular,memcpyforbids overlapping regions, and so may ignore that possibility, butrep movsballows them and must produce the expected result. On current implementations mostly affects to startup overhead, but probably not to large-block throughput. Similarly,rep movsbmust support byte-granular copies even if you are actually using it to copy large blocks which are a multiple of some large power of 2.The software may have information about alignment, copy size and possible aliasing that cannot be communicated to the hardware if using

rep movsb. Compilers can often determine the alignment of memory blocks6 and so can avoid much of the startup work thatrep movsmust do on every invocation.

Test Results

Here are test results for many different copy methods from tinymembench on my i7-6700HQ at 2.6 GHz (too bad I have the identical CPU so we aren't getting a new data point...):

C copy backwards : 8284.8 MB/s (0.3%)

C copy backwards (32 byte blocks) : 8273.9 MB/s (0.4%)

C copy backwards (64 byte blocks) : 8321.9 MB/s (0.8%)

C copy : 8863.1 MB/s (0.3%)

C copy prefetched (32 bytes step) : 8900.8 MB/s (0.3%)

C copy prefetched (64 bytes step) : 8817.5 MB/s (0.5%)

C 2-pass copy : 6492.3 MB/s (0.3%)

C 2-pass copy prefetched (32 bytes step) : 6516.0 MB/s (2.4%)

C 2-pass copy prefetched (64 bytes step) : 6520.5 MB/s (1.2%)

---

standard memcpy : 12169.8 MB/s (3.4%)

standard memset : 23479.9 MB/s (4.2%)

---

MOVSB copy : 10197.7 MB/s (1.6%)

MOVSD copy : 10177.6 MB/s (1.6%)

SSE2 copy : 8973.3 MB/s (2.5%)

SSE2 nontemporal copy : 12924.0 MB/s (1.7%)

SSE2 copy prefetched (32 bytes step) : 9014.2 MB/s (2.7%)

SSE2 copy prefetched (64 bytes step) : 8964.5 MB/s (2.3%)

SSE2 nontemporal copy prefetched (32 bytes step) : 11777.2 MB/s (5.6%)

SSE2 nontemporal copy prefetched (64 bytes step) : 11826.8 MB/s (3.2%)

SSE2 2-pass copy : 7529.5 MB/s (1.8%)

SSE2 2-pass copy prefetched (32 bytes step) : 7122.5 MB/s (1.0%)

SSE2 2-pass copy prefetched (64 bytes step) : 7214.9 MB/s (1.4%)

SSE2 2-pass nontemporal copy : 4987.0 MB/s

Some key takeaways:

- The

rep movsmethods are faster than all the other methods which aren't "non-temporal"7, and considerably faster than the "C" approaches which copy 8 bytes at a time. - The "non-temporal" methods are faster, by up to about 26% than the

rep movsones - but that's a much smaller delta than the one you reported (26 GB/s vs 15 GB/s = ~73%). - If you are not using non-temporal stores, using 8-byte copies from C is pretty much just as good as 128-bit wide SSE load/stores. That's because a good copy loop can generate enough memory pressure to saturate the bandwidth (e.g., 2.6 GHz * 1 store/cycle * 8 bytes = 26 GB/s for stores).

- There are no explicit 256-bit algorithms in tinymembench (except probably the "standard"

memcpy) but it probably doesn't matter due to the above note. - The increased throughput of the non-temporal store approaches over the temporal ones is about 1.45x, which is very close to the 1.5x you would expect if NT eliminates 1 out of 3 transfers (i.e., 1 read, 1 write for NT vs 2 reads, 1 write). The

rep movsapproaches lie in the middle. - The combination of fairly low memory latency and modest 2-channel bandwidth means this particular chip happens to be able to saturate its memory bandwidth from a single-thread, which changes the behavior dramatically.

-

rep movsdseems to use the same magic asrep movsbon this chip. That's interesting because ERMSB only explicitly targetsmovsband earlier tests on earlier archs with ERMSB showmovsbperforming much faster thanmovsd. This is mostly academic sincemovsbis more general thanmovsdanyway.

Haswell

Looking at the Haswell results kindly provided by iwillnotexist in the comments, we see the same general trends (most relevant results extracted):

C copy : 6777.8 MB/s (0.4%)

standard memcpy : 10487.3 MB/s (0.5%)

MOVSB copy : 9393.9 MB/s (0.2%)

MOVSD copy : 9155.0 MB/s (1.6%)

SSE2 copy : 6780.5 MB/s (0.4%)

SSE2 nontemporal copy : 10688.2 MB/s (0.3%)

The rep movsb approach is still slower than the non-temporal memcpy, but only by about 14% here (compared to ~26% in the Skylake test). The advantage of the NT techniques above their temporal cousins is now ~57%, even a bit more than the theoretical benefit of the bandwidth reduction.

When should you use rep movs?

Finally a stab at your actual question: when or why should you use it? It draw on the above and introduces a few new ideas. Unfortunately there is no simple answer: you'll have to trade off various factors, including some which you probably can't even know exactly, such as future developments.

A note that the alternative to rep movsb may be the optimized libc memcpy (including copies inlined by the compiler), or it may be a hand-rolled memcpy version. Some of the benefits below apply only in comparison to one or the other of these alternatives (e.g., "simplicity" helps against a hand-rolled version, but not against built-in memcpy), but some apply to both.

Restrictions on available instructions

In some environments there is a restriction on certain instructions or using certain registers. For example, in the Linux kernel, use of SSE/AVX or FP registers is generally disallowed. Therefore most of the optimized memcpy variants cannot be used as they rely on SSE or AVX registers, and a plain 64-bit mov-based copy is used on x86. For these platforms, using rep movsb allows most of the performance of an optimized memcpy without breaking the restriction on SIMD code.

A more general example might be code that has to target many generations of hardware, and which doesn't use hardware-specific dispatching (e.g., using cpuid). Here you might be forced to use only older instruction sets, which rules out any AVX, etc. rep movsb might be a good approach here since it allows "hidden" access to wider loads and stores without using new instructions. If you target pre-ERMSB hardware you'd have to see if rep movsb performance is acceptable there, though...

Future Proofing

A nice aspect of rep movsb is that it can, in theory take advantage of architectural improvement on future architectures, without source changes, that explicit moves cannot. For example, when 256-bit data paths were introduced, rep movsb was able to take advantage of them (as claimed by Intel) without any changes needed to the software. Software using 128-bit moves (which was optimal prior to Haswell) would have to be modified and recompiled.

So it is both a software maintenance benefit (no need to change source) and a benefit for existing binaries (no need to deploy new binaries to take advantage of the improvement).

How important this is depends on your maintenance model (e.g., how often new binaries are deployed in practice) and a very difficult to make judgement of how fast these instructions are likely to be in the future. At least Intel is kind of guiding uses in this direction though, by committing to at least reasonable performance in the future (15.3.3.6):

REP MOVSB and REP STOSB will continue to perform reasonably well on future processors.

Overlapping with subsequent work

This benefit won't show up in a plain memcpy benchmark of course, which by definition doesn't have subsequent work to overlap, so the magnitude of the benefit would have to be carefully measured in a real-world scenario. Taking maximum advantage might require re-organization of the code surrounding the memcpy.

This benefit is pointed out by Intel in their optimization manual (section 11.16.3.4) and in their words:

When the count is known to be at least a thousand byte or more, using enhanced REP MOVSB/STOSB can provide another advantage to amortize the cost of the non-consuming code. The heuristic can be understood using a value of Cnt = 4096 and memset() as example:

• A 256-bit SIMD implementation of memset() will need to issue/execute retire 128 instances of 32- byte store operation with VMOVDQA, before the non-consuming instruction sequences can make their way to retirement.

• An instance of enhanced REP STOSB with ECX= 4096 is decoded as a long micro-op flow provided by hardware, but retires as one instruction. There are many store_data operation that must complete before the result of memset() can be consumed. Because the completion of store data operation is de-coupled from program-order retirement, a substantial part of the non-consuming code stream can process through the issue/execute and retirement, essentially cost-free if the non-consuming sequence does not compete for store buffer resources.

So Intel is saying that after all some uops the code after rep movsb has issued, but while lots of stores are still in flight and the rep movsb as a whole hasn't retired yet, uops from following instructions can make more progress through the out-of-order machinery than they could if that code came after a copy loop.

The uops from an explicit load and store loop all have to actually retire separately in program order. That has to happen to make room in the ROB for following uops.

There doesn't seem to be much detailed information about how very long microcoded instruction like rep movsb work, exactly. We don't know exactly how micro-code branches request a different stream of uops from the microcode sequencer, or how the uops retire. If the individual uops don't have to retire separately, perhaps the whole instruction only takes up one slot in the ROB?

When the front-end that feeds the OoO machinery sees a rep movsb instruction in the uop cache, it activates the Microcode Sequencer ROM (MS-ROM) to send microcode uops into the queue that feeds the issue/rename stage. It's probably not possible for any other uops to mix in with that and issue/execute8 while rep movsb is still issuing, but subsequent instructions can be fetched/decoded and issue right after the last rep movsb uop does, while some of the copy hasn't executed yet.

This is only useful if at least some of your subsequent code doesn't depend on the result of the memcpy (which isn't unusual).

Now, the size of this benefit is limited: at most you can execute N instructions (uops actually) beyond the slow rep movsb instruction, at which point you'll stall, where N is the ROB size. With current ROB sizes of ~200 (192 on Haswell, 224 on Skylake), that's a maximum benefit of ~200 cycles of free work for subsequent code with an IPC of 1. In 200 cycles you can copy somewhere around 800 bytes at 10 GB/s, so for copies of that size you may get free work close to the cost of the copy (in a way making the copy free).

As copy sizes get much larger, however, the relative importance of this diminishes rapidly (e.g., if you are copying 80 KB instead, the free work is only 1% of the copy cost). Still, it is quite interesting for modest-sized copies.

Copy loops don't totally block subsequent instructions from executing, either. Intel does not go into detail on the size of the benefit, or on what kind of copies or surrounding code there is most benefit. (Hot or cold destination or source, high ILP or low ILP high-latency code after).

Code Size

The executed code size (a few bytes) is microscopic compared to a typical optimized memcpy routine. If performance is at all limited by i-cache (including uop cache) misses, the reduced code size might be of benefit.

Again, we can bound the magnitude of this benefit based on the size of the copy. I won't actually work it out numerically, but the intuition is that reducing the dynamic code size by B bytes can save at most C * B cache-misses, for some constant C. Every call to memcpy incurs the cache miss cost (or benefit) once, but the advantage of higher throughput scales with the number of bytes copied. So for large transfers, higher throughput will dominate the cache effects.

Again, this is not something that will show up in a plain benchmark, where the entire loop will no doubt fit in the uop cache. You'll need a real-world, in-place test to evaluate this effect.

Architecture Specific Optimization

You reported that on your hardware, rep movsb was considerably slower than the platform memcpy. However, even here there are reports of the opposite result on earlier hardware (like Ivy Bridge).

That's entirely plausible, since it seems that the string move operations get love periodically - but not every generation, so it may well be faster or at least tied (at which point it may win based on other advantages) on the architectures where it has been brought up to date, only to fall behind in subsequent hardware.

Quoting Andy Glew, who should know a thing or two about this after implementing these on the P6:

the big weakness of doing fast strings in microcode was [...] the microcode fell out of tune with every generation, getting slower and slower until somebody got around to fixing it. Just like a library men copy falls out of tune. I suppose that it is possible that one of the missed opportunities was to use 128-bit loads and stores when they became available, and so on.

In that case, it can be seen as just another "platform specific" optimization to apply in the typical every-trick-in-the-book memcpy routines you find in standard libraries and JIT compilers: but only for use on architectures where it is better. For JIT or AOT-compiled stuff this is easy, but for statically compiled binaries this does require platform specific dispatch, but that often already exists (sometimes implemented at link time), or the mtune argument can be used to make a static decision.

Simplicity

Even on Skylake, where it seems like it has fallen behind the absolute fastest non-temporal techniques, it is still faster than most approaches and is very simple. This means less time in validation, fewer mystery bugs, less time tuning and updating a monster memcpy implementation (or, conversely, less dependency on the whims of the standard library implementors if you rely on that).

Latency Bound Platforms

Memory throughput bound algorithms9 can actually be operating in two main overall regimes: DRAM bandwidth bound or concurrency/latency bound.

The first mode is the one that you are probably familiar with: the DRAM subsystem has a certain theoretic bandwidth that you can calculate pretty easily based on the number of channels, data rate/width and frequency. For example, my DDR4-2133 system with 2 channels has a max bandwidth of 2.133 * 8 * 2 = 34.1 GB/s, same as reported on ARK.

You won't sustain more than that rate from DRAM (and usually somewhat less due to various inefficiencies) added across all cores on the socket (i.e., it is a global limit for single-socket systems).

The other limit is imposed by how many concurrent requests a core can actually issue to the memory subsystem. Imagine if a core could only have 1 request in progress at once, for a 64-byte cache line - when the request completed, you could issue another. Assume also very fast 50ns memory latency. Then despite the large 34.1 GB/s DRAM bandwidth, you'd actually only get 64 bytes / 50 ns = 1.28 GB/s, or less than 4% of the max bandwidth.

In practice, cores can issue more than one request at a time, but not an unlimited number. It is usually understood that there are only 10 line fill buffers per core between the L1 and the rest of the memory hierarchy, and perhaps 16 or so fill buffers between L2 and DRAM. Prefetching competes for the same resources, but at least helps reduce the effective latency. For more details look at any of the great posts Dr. Bandwidth has written on the topic, mostly on the Intel forums.

Still, most recent CPUs are limited by this factor, not the RAM bandwidth. Typically they achieve 12 - 20 GB/s per core, while the RAM bandwidth may be 50+ GB/s (on a 4 channel system). Only some recent gen 2-channel "client" cores, which seem to have a better uncore, perhaps more line buffers can hit the DRAM limit on a single core, and our Skylake chips seem to be one of them.

Now of course, there is a reason Intel designs systems with 50 GB/s DRAM bandwidth, while only being to sustain < 20 GB/s per core due to concurrency limits: the former limit is socket-wide and the latter is per core. So each core on an 8 core system can push 20 GB/s worth of requests, at which point they will be DRAM limited again.

Why I am going on and on about this? Because the best memcpy implementation often depends on which regime you are operating in. Once you are DRAM BW limited (as our chips apparently are, but most aren't on a single core), using non-temporal writes becomes very important since it saves the read-for-ownership that normally wastes 1/3 of your bandwidth. You see that exactly in the test results above: the memcpy implementations that don't use NT stores lose 1/3 of their bandwidth.

If you are concurrency limited, however, the situation equalizes and sometimes reverses, however. You have DRAM bandwidth to spare, so NT stores don't help and they can even hurt since they may increase the latency since the handoff time for the line buffer may be longer than a scenario where prefetch brings the RFO line into LLC (or even L2) and then the store completes in LLC for an effective lower latency. Finally, server uncores tend to have much slower NT stores than client ones (and high bandwidth), which accentuates this effect.

So on other platforms you might find that NT stores are less useful (at least when you care about single-threaded performance) and perhaps rep movsb wins where (if it gets the best of both worlds).

Really, this last item is a call for most testing. I know that NT stores lose their apparent advantage for single-threaded tests on most archs (including current server archs), but I don't know how rep movsb will perform relatively...

References

Other good sources of info not integrated in the above.

comp.arch investigation of rep movsb versus alternatives. Lots of good notes about branch prediction, and an implementation of the approach I've often suggested for small blocks: using overlapping first and/or last read/writes rather than trying to write only exactly the required number of bytes (for example, implementing all copies from 9 to 16 bytes as two 8-byte copies which might overlap in up to 7 bytes).

1 Presumably the intention is to restrict it to cases where, for example, code-size is very important.

2 See Section 3.7.5: REP Prefix and Data Movement.

3 It is key to note this applies only for the various stores within the single instruction itself: once complete, the block of stores still appear ordered with respect to prior and subsequent stores. So code can see stores from the rep movs out of order with respect to each other but not with respect to prior or subsequent stores (and it's the latter guarantee you usually need). It will only be a problem if you use the end of the copy destination as a synchronization flag, instead of a separate store.

4 Note that non-temporal discrete stores also avoid most of the ordering requirements, although in practice rep movs has even more freedom since there are still some ordering constraints on WC/NT stores.

5 This is was common in the latter part of the 32-bit era, where many chips had 64-bit data paths (e.g, to support FPUs which had support for the 64-bit double type). Today, "neutered" chips such as the Pentium or Celeron brands have AVX disabled, but presumably rep movs microcode can still use 256b loads/stores.

6 E.g., due to language alignment rules, alignment attributes or operators, aliasing rules or other information determined at compile time. In the case of alignment, even if the exact alignment can't be determined, they may at least be able to hoist alignment checks out of loops or otherwise eliminate redundant checks.

7 I'm making the assumption that "standard" memcpy is choosing a non-temporal approach, which is highly likely for this size of buffer.

8 That isn't necessarily obvious, since it could be the case that the uop stream that is generated by the rep movsb simply monopolizes dispatch and then it would look very much like the explicit mov case. It seems that it doesn't work like that however - uops from subsequent instructions can mingle with uops from the microcoded rep movsb.

9 I.e., those which can issue a large number of independent memory requests and hence saturate the available DRAM-to-core bandwidth, of which memcpy would be a poster child (and as apposed to purely latency bound loads such as pointer chasing).

Solution 2

Enhanced REP MOVSB (Ivy Bridge and later)

Ivy Bridge microarchitecture (processors released in 2012 and 2013) introduced Enhanced REP MOVSB (ERMSB). We still need to check the corresponding bit. ERMS was intended to allow us to copy memory fast with rep movsb.

Cheapest versions of later processors - Kaby Lake Celeron and Pentium, released in 2017, don't have AVX that could have been used for fast memory copy, but still have the Enhanced REP MOVSB. And some of Intel's mobile and low-power architectures released in 2018 and onwards, which were not based on SkyLake, copy about twice more bytes per CPU cycle with REP MOVSB than previous generations of microarchitectures.

Enhanced REP MOVSB (ERMSB) before the Ice Lake microarchitecture with Fast Short REP MOV (FSRM) was only faster than AVX copy or general-use register copy if the block size is at least 256 bytes. For the blocks below 64 bytes, it was much slower, because there is a high internal startup in ERMSB - about 35 cycles. The FSRM feature intended blocks before 128 bytes also be quick.

See the Intel Manual on Optimization, section 3.7.6 Enhanced REP MOVSB and STOSB operation (ERMSB) http://www.intel.com/content/dam/www/public/us/en/documents/manuals/64-ia-32-architectures-optimization-manual.pdf (applies to processors which did not yet have FSRM):

- startup cost is 35 cycles;

- both the source and destination addresses have to be aligned to a 16-Byte boundary;

- the source region should not overlap with the destination region;

- the length has to be a multiple of 64 to produce higher performance;

- the direction has to be forward (CLD).

As I said earlier, REP MOVSB (on processors before FSRM) begins to outperform other methods when the length is at least 256 bytes, but to see the clear benefit over AVX copy, the length has to be more than 2048 bytes. Also, it should be noted that merely using AVX (256-bit registers) or AVX-512 (512-bit registers) for memory copy may sometimes have dire consequences like AVX/SSE transition penalties or reduced turbo frequency. So the REP MOVSB is a safer way to copy memory than AVX.

On the effect of the alignment if REP MOVSB vs. AVX copy, the Intel Manual gives the following information:

- if the source buffer is not aligned, the impact on ERMSB implementation versus 128-bit AVX is similar;

- if the destination buffer is not aligned, the effect on ERMSB implementation can be 25% degradation, while 128-bit AVX implementation of memory copy may degrade only 5%, relative to 16-byte aligned scenario.

I have made tests on Intel Core i5-6600, under 64-bit, and I have compared REP MOVSB memcpy() with a simple MOV RAX, [SRC]; MOV [DST], RAX implementation when the data fits L1 cache:

REP MOVSB memory copy

- 1622400000 data blocks of 32 bytes took 17.9337 seconds to copy; 2760.8205 MB/s

- 1622400000 data blocks of 64 bytes took 17.8364 seconds to copy; 5551.7463 MB/s

- 811200000 data blocks of 128 bytes took 10.8098 seconds to copy; 9160.5659 MB/s

- 405600000 data blocks of 256 bytes took 5.8616 seconds to copy; 16893.5527 MB/s

- 202800000 data blocks of 512 bytes took 3.9315 seconds to copy; 25187.2976 MB/s

- 101400000 data blocks of 1024 bytes took 2.1648 seconds to copy; 45743.4214 MB/s

- 50700000 data blocks of 2048 bytes took 1.5301 seconds to copy; 64717.0642 MB/s

- 25350000 data blocks of 4096 bytes took 1.3346 seconds to copy; 74198.4030 MB/s

- 12675000 data blocks of 8192 bytes took 1.1069 seconds to copy; 89456.2119 MB/s

- 6337500 data blocks of 16384 bytes took 1.1120 seconds to copy; 89053.2094 MB/s

MOV RAX... memory copy

- 1622400000 data blocks of 32 bytes took 7.3536 seconds to copy; 6733.0256 MB/s

- 1622400000 data blocks of 64 bytes took 10.7727 seconds to copy; 9192.1090 MB/s

- 811200000 data blocks of 128 bytes took 8.9408 seconds to copy; 11075.4480 MB/s

- 405600000 data blocks of 256 bytes took 8.4956 seconds to copy; 11655.8805 MB/s

- 202800000 data blocks of 512 bytes took 9.1032 seconds to copy; 10877.8248 MB/s

- 101400000 data blocks of 1024 bytes took 8.2539 seconds to copy; 11997.1185 MB/s

- 50700000 data blocks of 2048 bytes took 7.7909 seconds to copy; 12710.1252 MB/s

- 25350000 data blocks of 4096 bytes took 7.5992 seconds to copy; 13030.7062 MB/s

- 12675000 data blocks of 8192 bytes took 7.4679 seconds to copy; 13259.9384 MB/s

So, even on 128-bit blocks, REP MOVSB (on processors before FSRM) is slower than just a simple MOV RAX copy in a loop (not unrolled). The ERMSB implementation begins to outperform the MOV RAX loop only starting from 256-byte blocks.

Fast Short REP MOV (FSRM)

The Ice Lake microarchitecture launched in September 2019 introduced the Fast Short REP MOV (FSRM). This feature can be tested by a CPUID bit. It was intended for strings of 128 bytes and less to also be quick, but, in fact, strings before 64 bytes are still slower with rep movsb than with, for example, simple 64-bit register copy. Besides that, FSRM is only implemented under 64-bit, not under 32-bit. At least on my i7-1065G7 CPU, rep movsb is only quick for small strings under 64-bit, but on 32-bit strings have to be at least 4KB in order for rep movsb to start outperforming other methods.

Normal (not enhanced) REP MOVS on Nehalem (2009-2013)

Surprisingly, previous architectures (Nehalem and later, up to, but not including Ivy Bridge), that didn't yet have Enhanced REP MOVB, had relatively fast REP MOVSD/MOVSQ (but not REP MOVSB/MOVSW) implementation for large blocks, but not large enough to outsize the L1 cache.

Intel Optimization Manual (2.5.6 REP String Enhancement) gives the following information is related to Nehalem microarchitecture - Intel Core i5, i7 and Xeon processors released in 2009 and 2010, and later microarchitectures, including Sandy Bridge manufactured up to 2013.

REP MOVSB

The latency for MOVSB is 9 cycles if ECX < 4. Otherwise, REP MOVSB with ECX > 9 has a 50-cycle startup cost.

- tiny string (ECX < 4): the latency of REP MOVSB is 9 cycles;

- small string (ECX is between 4 and 9): no official information in the Intel manual, probably more than 9 cycles but less than 50 cycles;

- long string (ECX > 9): 50-cycle startup cost.

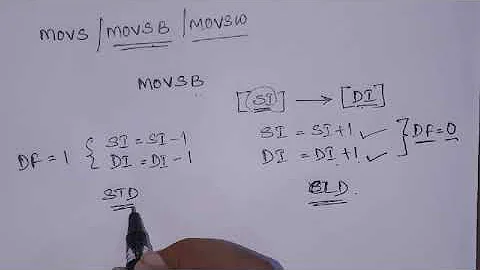

MOVSW/MOVSD/MOVSQ

Quote from the Intel Optimization Manual (2.5.6 REP String Enhancement):

- Short string (ECX <= 12): the latency of REP MOVSW/MOVSD/MOVSQ is about 20 cycles.

- Fast string (ECX >= 76: excluding REP MOVSB): the processor implementation provides hardware optimization by moving as many pieces of data in 16 bytes as possible. The latency of REP string latency will vary if one of the 16-byte data transfer spans across cache line boundary:

- = Split-free: the latency consists of a startup cost of about 40 cycles, and every 64 bytes of data adds 4 cycles.

- = Cache splits: the latency consists of a startup cost of about 35 cycles, and every 64 bytes of data adds 6 cycles.

- Intermediate string lengths: the latency of REP MOVSW/MOVSD/MOVSQ has a startup cost of about 15 cycles plus one cycle for each iteration of the data movement in word/dword/qword.

Therefore, according to Intel, for very large memory blocks, REP MOVSW is as fast as REP MOVSD/MOVSQ. Anyway, my tests have shown that only REP MOVSD/MOVSQ are fast, while REP MOVSW is even slower than REP MOVSB on Nehalem and Westmere.

According to the information provided by Intel in the manual, on previous Intel microarchitectures (before 2008) the startup costs are even higher.

Conclusion: if you just need to copy data that fits L1 cache, just 4 cycles to copy 64 bytes of data is excellent, and you don't need to use XMM registers!

#REP MOVSD/MOVSQ is the universal solution that works excellent on all Intel processors (no ERMSB required) if the data fits L1 cache #

Here are the tests of REP MOVS* when the source and destination was in the L1 cache, of blocks large enough to not be seriously affected by startup costs, but not that large to exceed the L1 cache size. Source: http://users.atw.hu/instlatx64/

Yonah (2006-2008)

REP MOVSB 10.91 B/c

REP MOVSW 10.85 B/c

REP MOVSD 11.05 B/c

Nehalem (2009-2010)

REP MOVSB 25.32 B/c

REP MOVSW 19.72 B/c

REP MOVSD 27.56 B/c

REP MOVSQ 27.54 B/c

Westmere (2010-2011)

REP MOVSB 21.14 B/c

REP MOVSW 19.11 B/c

REP MOVSD 24.27 B/c

Ivy Bridge (2012-2013) - with Enhanced REP MOVSB (all subsequent CPUs also have Enhanced REP MOVSB)

REP MOVSB 28.72 B/c

REP MOVSW 19.40 B/c

REP MOVSD 27.96 B/c

REP MOVSQ 27.89 B/c

SkyLake (2015-2016)

REP MOVSB 57.59 B/c

REP MOVSW 58.20 B/c

REP MOVSD 58.10 B/c

REP MOVSQ 57.59 B/c

Kaby Lake (2016-2017)

REP MOVSB 58.00 B/c

REP MOVSW 57.69 B/c

REP MOVSD 58.00 B/c

REP MOVSQ 57.89 B/c

I have presented test results for both SkyLake and Kaby Lake just for the sake of confirmation - these architectures have the same cycle-per-instruction data.

Cannon Lake, mobile (May 2018 - February 2020)

REP MOVSB 107.44 B/c

REP MOVSW 106.74 B/c

REP MOVSD 107.08 B/c

REP MOVSQ 107.08 B/c

Cascade lake, server (April 2019)

REP MOVSB 58.72 B/c

REP MOVSW 58.51 B/c

REP MOVSD 58.51 B/c

REP MOVSQ 58.20 B/c

Comet Lake, desktop, workstation, mobile (August 2019)

REP MOVSB 58.72 B/c

REP MOVSW 58.62 B/c

REP MOVSD 58.72 B/c

REP MOVSQ 58.72 B/c

Ice Lake, mobile (September 2019)

REP MOVSB 102.40 B/c

REP MOVSW 101.14 B/c

REP MOVSD 101.14 B/c

REP MOVSQ 101.14 B/c

Tremont, low power (September, 2020)

REP MOVSB 119.84 B/c

REP MOVSW 121.78 B/c

REP MOVSD 121.78 B/c

REP MOVSQ 121.78 B/c

Tiger Lake, mobile (October, 2020)

REP MOVSB 93.27 B/c

REP MOVSW 93.09 B/c

REP MOVSD 93.09 B/c

REP MOVSQ 93.09 B/c

As you see, the implementation of REP MOVS differs significantly from one microarchitecture to another. On some processors, like Ivy Bridge - REP MOVSB is fastest, albeit just slightly faster than REP MOVSD/MOVSQ, but no doubt that on all processors since Nehalem, REP MOVSD/MOVSQ works very well - you even don't need "Enhanced REP MOVSB", since, on Ivy Bridge (2013) with Enhacnced REP MOVSB, REP MOVSD shows the same byte per clock data as on Nehalem (2010) without Enhacnced REP MOVSB, while in fact REP MOVSB became very fast only since SkyLake (2015) - twice as fast as on Ivy Bridge. So this Enhacnced REP MOVSB bit in the CPUID may be confusing - it only shows that REP MOVSB per se is OK, but not that any REP MOVS* is faster.

The most confusing ERMSB implementation is on the Ivy Bridge microarchitecture. Yes, on very old processors, before ERMSB, REP MOVS* for large blocks did use a cache protocol feature that is not available to regular code (no-RFO). But this protocol is no longer used on Ivy Bridge that has ERMSB. According to Andy Glew's comments on an answer to "why are complicated memcpy/memset superior?" from a Peter Cordes answer, a cache protocol feature that is not available to regular code was once used on older processors, but no longer on Ivy Bridge. And there comes an explanation of why the startup costs are so high for REP MOVS*: „The large overhead for choosing and setting up the right method is mainly due to the lack of microcode branch prediction”. There has also been an interesting note that Pentium Pro (P6) in 1996 implemented REP MOVS* with 64 bit microcode loads and stores and a no-RFO cache protocol - they did not violate memory ordering, unlike ERMSB in Ivy Bridge.

As about rep movsb vs rep movsq, on some processors with ERMSB rep movsb is slightly faster (e.g., Xeon E3-1246 v3), on other rep movsq is faster (Skylake), and on other it is the same speed (e.g. i7-1065G7). However, I would go for rep movsq rather than rep movsb anyway.

Please also note that this answer is only relevant for the cases where the source and the destination data fits L1 cache. Depending on circumstances, the particularities of memory access (cache, etc.) should be taken into consideration. Please also note that the information in this answer is only related to Intel processors and not to the processors by other manufacturers like AMD that may have better or worse implementations of REP MOVS* instructions.

Tinymembench results

Here are some of the tinymembench results to show relative performance of the rep movsb and rep movsd.

Intel Xeon E5-1650V3

Haswell microarchitecture, ERMS, AVX-2, released on September 2014 for $583, base frequency 3.5 GHz, max turbo frequency: 3.8 GHz (one core), L2 cache 6 × 256 KB, L3 cache 15 MB, supports up to 4×DDR4-2133, installed 8 modules of 32768 MB DDR4 ECC reg (256GB total RAM).

C copy backwards : 7268.8 MB/s (1.5%)

C copy backwards (32 byte blocks) : 7264.3 MB/s

C copy backwards (64 byte blocks) : 7271.2 MB/s

C copy : 7147.2 MB/s

C copy prefetched (32 bytes step) : 7044.6 MB/s

C copy prefetched (64 bytes step) : 7032.5 MB/s

C 2-pass copy : 6055.3 MB/s

C 2-pass copy prefetched (32 bytes step) : 6350.6 MB/s

C 2-pass copy prefetched (64 bytes step) : 6336.4 MB/s

C fill : 11072.2 MB/s

C fill (shuffle within 16 byte blocks) : 11071.3 MB/s

C fill (shuffle within 32 byte blocks) : 11070.8 MB/s

C fill (shuffle within 64 byte blocks) : 11072.0 MB/s

---

standard memcpy : 11608.9 MB/s

standard memset : 15789.7 MB/s

---

MOVSB copy : 8123.9 MB/s

MOVSD copy : 8100.9 MB/s (0.3%)

SSE2 copy : 7213.2 MB/s

SSE2 nontemporal copy : 11985.5 MB/s

SSE2 copy prefetched (32 bytes step) : 7055.8 MB/s

SSE2 copy prefetched (64 bytes step) : 7044.3 MB/s

SSE2 nontemporal copy prefetched (32 bytes step) : 11794.4 MB/s

SSE2 nontemporal copy prefetched (64 bytes step) : 11813.1 MB/s

SSE2 2-pass copy : 6394.3 MB/s

SSE2 2-pass copy prefetched (32 bytes step) : 6255.9 MB/s

SSE2 2-pass copy prefetched (64 bytes step) : 6234.0 MB/s

SSE2 2-pass nontemporal copy : 4279.5 MB/s

SSE2 fill : 10745.0 MB/s

SSE2 nontemporal fill : 22014.4 MB/s

Intel Xeon E3-1246 v3

Haswell, ERMS, AVX-2, 3.50GHz

C copy backwards : 6911.8 MB/s

C copy backwards (32 byte blocks) : 6919.0 MB/s

C copy backwards (64 byte blocks) : 6924.6 MB/s

C copy : 6934.3 MB/s (0.2%)

C copy prefetched (32 bytes step) : 6860.1 MB/s

C copy prefetched (64 bytes step) : 6875.6 MB/s (0.1%)

C 2-pass copy : 6471.2 MB/s

C 2-pass copy prefetched (32 bytes step) : 6710.3 MB/s

C 2-pass copy prefetched (64 bytes step) : 6745.5 MB/s (0.3%)

C fill : 10812.1 MB/s (0.2%)

C fill (shuffle within 16 byte blocks) : 10807.7 MB/s

C fill (shuffle within 32 byte blocks) : 10806.6 MB/s

C fill (shuffle within 64 byte blocks) : 10809.7 MB/s

---

standard memcpy : 10922.0 MB/s

standard memset : 28935.1 MB/s

---

MOVSB copy : 9656.7 MB/s

MOVSD copy : 9430.1 MB/s

SSE2 copy : 6939.1 MB/s

SSE2 nontemporal copy : 10820.6 MB/s

SSE2 copy prefetched (32 bytes step) : 6857.4 MB/s

SSE2 copy prefetched (64 bytes step) : 6854.9 MB/s

SSE2 nontemporal copy prefetched (32 bytes step) : 10774.2 MB/s

SSE2 nontemporal copy prefetched (64 bytes step) : 10782.1 MB/s

SSE2 2-pass copy : 6683.0 MB/s

SSE2 2-pass copy prefetched (32 bytes step) : 6687.6 MB/s

SSE2 2-pass copy prefetched (64 bytes step) : 6685.8 MB/s

SSE2 2-pass nontemporal copy : 5234.9 MB/s

SSE2 fill : 10622.2 MB/s

SSE2 nontemporal fill : 22515.2 MB/s (0.1%)

Intel Xeon Skylake-SP

Skylake, ERMS, AVX-512, 2.1 GHz

MOVSB copy : 4619.3 MB/s (0.6%)

SSE2 fill : 9774.4 MB/s (1.5%)

SSE2 nontemporal fill : 6715.7 MB/s (1.1%)

Intel Xeon E3-1275V6

Kaby Lake, released on March 2017 for $339, base frequency 3.8 GHz, max turbo frequency 4.2 GHz, L2 cache 4 × 256 KB, L3 cache 8 MB, 4 cores (8 threads), 4 RAM modules of 16384 MB DDR4 ECC installed, but it can use only 2 memory channels.

MOVSB copy : 11720.8 MB/s

SSE2 fill : 15877.6 MB/s (2.7%)

SSE2 nontemporal fill : 36407.1 MB/s

Intel i7-1065G7

Ice Lake, AVX-512, ERMS, FSRM, 1.37 GHz (worked at the base frequency, turbo mode disabled)

MOVSB copy : 7322.7 MB/s

SSE2 fill : 9681.7 MB/s

SSE2 nontemporal fill : 16426.2 MB/s

AMD EPYC 7401P

Released on June 2017 at US $1075, based on Zen gen.1 microarchitecture, 24 cores (48 threads), base frequency: 2.0GHz, max turbo boost: 3.0GHz (few cores) or 2.8 (all cores); cache: L1 - 64 KB inst. & 32 KB data per core, L2 - 512 KB per core, L3 - 64 MB, 8 MB per CCX, DDR4-2666 8 channels, but only 4 RAM modules of 32768 MB each of DDR4 ECC reg. installed.

MOVSB copy : 7718.0 MB/s

SSE2 fill : 11233.5 MB/s

SSE2 nontemporal fill : 34893.3 MB/s

AMD Ryzen 7 1700X (4 RAM modules installed)

MOVSB copy : 7444.7 MB/s

SSE2 fill : 11100.1 MB/s

SSE2 nontemporal fill : 31019.8 MB/s

AMD Ryzen 7 Pro 1700X (2 RAM modules installed)

MOVSB copy : 7251.6 MB/s

SSE2 fill : 10691.6 MB/s

SSE2 nontemporal fill : 31014.7 MB/s

AMD Ryzen 7 Pro 1700X (4 RAM modules installed)

MOVSB copy : 7429.1 MB/s

SSE2 fill : 10954.6 MB/s

SSE2 nontemporal fill : 30957.5 MB/s

Conclusion

REP MOVSD/MOVSQ is the universal solution that works relatively well on all Intel processors for large memory blocks of at least 4KB (no ERMSB required) if the destination is aligned by at least 64 bytes. REP MOVSD/MOVSQ works even better on newer processors, starting from Skylake. And, for Ice Lake or newer microarchitectures, it works perfectly for even very small strings of at least 64 bytes.

Solution 3

You say that you want:

an answer that shows when ERMSB is useful

But I'm not sure it means what you think it means. Looking at the 3.7.6.1 docs you link to, it explicitly says:

implementing memcpy using ERMSB might not reach the same level of throughput as using 256-bit or 128-bit AVX alternatives, depending on length and alignment factors.

So just because CPUID indicates support for ERMSB, that isn't a guarantee that REP MOVSB will be the fastest way to copy memory. It just means it won't suck as bad as it has in some previous CPUs.

However just because there may be alternatives that can, under certain conditions, run faster doesn't mean that REP MOVSB is useless. Now that the performance penalties that this instruction used to incur are gone, it is potentially a useful instruction again.

Remember, it is a tiny bit of code (2 bytes!) compared to some of the more involved memcpy routines I have seen. Since loading and running big chunks of code also has a penalty (throwing some of your other code out of the cpu's cache), sometimes the 'benefit' of AVX et al is going to be offset by the impact it has on the rest of your code. Depends on what you are doing.

You also ask:

Why is the bandwidth so much lower with REP MOVSB? What can I do to improve it?

It isn't going to be possible to "do something" to make REP MOVSB run any faster. It does what it does.

If you want the higher speeds you are seeing from from memcpy, you can dig up the source for it. It's out there somewhere. Or you can trace into it from a debugger and see the actual code paths being taken. My expectation is that it's using some of those AVX instructions to work with 128 or 256bits at a time.

Or you can just... Well, you asked us not to say it.

Solution 4

This is not an answer to the stated question(s), only my results (and personal conclusions) when trying to find out.

In summary: GCC already optimizes memset()/memmove()/memcpy() (see e.g. gcc/config/i386/i386.c:expand_set_or_movmem_via_rep() in the GCC sources; also look for stringop_algs in the same file to see architecture-dependent variants). So, there is no reason to expect massive gains by using your own variant with GCC (unless you've forgotten important stuff like alignment attributes for your aligned data, or do not enable sufficiently specific optimizations like -O2 -march= -mtune=). If you agree, then the answers to the stated question are more or less irrelevant in practice.

(I only wish there was a memrepeat(), the opposite of memcpy() compared to memmove(), that would repeat the initial part of a buffer to fill the entire buffer.)

I currently have an Ivy Bridge machine in use (Core i5-6200U laptop, Linux 4.4.0 x86-64 kernel, with erms in /proc/cpuinfo flags). Because I wanted to find out if I can find a case where a custom memcpy() variant based on rep movsb would outperform a straightforward memcpy(), I wrote an overly complicated benchmark.

The core idea is that the main program allocates three large memory areas: original, current, and correct, each exactly the same size, and at least page-aligned. The copy operations are grouped into sets, with each set having distinct properties, like all sources and targets being aligned (to some number of bytes), or all lengths being within the same range. Each set is described using an array of src, dst, n triplets, where all src to src+n-1 and dst to dst+n-1 are completely within the current area.

A Xorshift* PRNG is used to initialize original to random data. (Like I warned above, this is overly complicated, but I wanted to ensure I'm not leaving any easy shortcuts for the compiler.) The correct area is obtained by starting with original data in current, applying all the triplets in the current set, using memcpy() provided by the C library, and copying the current area to correct. This allows each benchmarked function to be verified to behave correctly.

Each set of copy operations is timed a large number of times using the same function, and the median of these is used for comparison. (In my opinion, median makes the most sense in benchmarking, and provides sensible semantics -- the function is at least that fast at least half the time.)

To avoid compiler optimizations, I have the program load the functions and benchmarks dynamically, at run time. The functions all have the same form, void function(void *, const void *, size_t) -- note that unlike memcpy() and memmove(), they return nothing. The benchmarks (named sets of copy operations) are generated dynamically by a function call (that takes the pointer to the current area and its size as parameters, among others).

Unfortunately, I have not yet found any set where

static void rep_movsb(void *dst, const void *src, size_t n)

{

__asm__ __volatile__ ( "rep movsb\n\t"

: "+D" (dst), "+S" (src), "+c" (n)

:

: "memory" );

}

would beat

static void normal_memcpy(void *dst, const void *src, size_t n)

{

memcpy(dst, src, n);

}

using gcc -Wall -O2 -march=ivybridge -mtune=ivybridge using GCC 5.4.0 on aforementioned Core i5-6200U laptop running a linux-4.4.0 64-bit kernel. Copying 4096-byte aligned and sized chunks comes close, however.

This means that at least thus far, I have not found a case where using a rep movsb memcpy variant would make sense. It does not mean there is no such case; I just haven't found one.

(At this point the code is a spaghetti mess I'm more ashamed than proud of, so I shall omit publishing the sources unless someone asks. The above description should be enough to write a better one, though.)

This does not surprise me much, though. The C compiler can infer a lot of information about the alignment of the operand pointers, and whether the number of bytes to copy is a compile-time constant, a multiple of a suitable power of two. This information can, and will/should, be used by the compiler to replace the C library memcpy()/memmove() functions with its own.

GCC does exactly this (see e.g. gcc/config/i386/i386.c:expand_set_or_movmem_via_rep() in the GCC sources; also look for stringop_algs in the same file to see architecture-dependent variants). Indeed, memcpy()/memset()/memmove() has already been separately optimized for quite a few x86 processor variants; it would quite surprise me if the GCC developers had not already included erms support.

GCC provides several function attributes that developers can use to ensure good generated code. For example, alloc_align (n) tells GCC that the function returns memory aligned to at least n bytes. An application or a library can choose which implementation of a function to use at run time, by creating a "resolver function" (that returns a function pointer), and defining the function using the ifunc (resolver) attribute.

One of the most common patterns I use in my code for this is

some_type *pointer = __builtin_assume_aligned(ptr, alignment);

where ptr is some pointer, alignment is the number of bytes it is aligned to; GCC then knows/assumes that pointer is aligned to alignment bytes.

Another useful built-in, albeit much harder to use correctly, is __builtin_prefetch(). To maximize overall bandwidth/efficiency, I have found that minimizing latencies in each sub-operation, yields the best results. (For copying scattered elements to consecutive temporary storage, this is difficult, as prefetching typically involves a full cache line; if too many elements are prefetched, most of the cache is wasted by storing unused items.)

Solution 5

There are far more efficient ways to move data. These days, the implementation of memcpy will generate architecture specific code from the compiler that is optimized based upon the memory alignment of the data and other factors. This allows better use of non-temporal cache instructions and XMM and other registers in the x86 world.

When you hard-code rep movsb prevents this use of intrinsics.

Therefore, for something like a memcpy, unless you are writing something that will be tied to a very specific piece of hardware and unless you are going to take the time to write a highly optimized memcpy function in assembly (or using C level intrinsics), you are far better off allowing the compiler to figure it out for you.

Related videos on Youtube

Z boson

Updated on June 12, 2021Comments

-

Z boson almost 3 years

Z boson almost 3 yearsI would like to use enhanced REP MOVSB (ERMSB) to get a high bandwidth for a custom

memcpy.ERMSB was introduced with the Ivy Bridge microarchitecture. See the section "Enhanced REP MOVSB and STOSB operation (ERMSB)" in the Intel optimization manual if you don't know what ERMSB is.

The only way I know to do this directly is with inline assembly. I got the following function from https://groups.google.com/forum/#!topic/gnu.gcc.help/-Bmlm_EG_fE

static inline void *__movsb(void *d, const void *s, size_t n) { asm volatile ("rep movsb" : "=D" (d), "=S" (s), "=c" (n) : "0" (d), "1" (s), "2" (n) : "memory"); return d; }When I use this however, the bandwidth is much less than with

memcpy.__movsbgets 15 GB/s andmemcpyget 26 GB/s with my i7-6700HQ (Skylake) system, Ubuntu 16.10, DDR4@2400 MHz dual channel 32 GB, GCC 6.2.Why is the bandwidth so much lower with

REP MOVSB? What can I do to improve it?Here is the code I used to test this.

//gcc -O3 -march=native -fopenmp foo.c #include <stdlib.h> #include <string.h> #include <stdio.h> #include <stddef.h> #include <omp.h> #include <x86intrin.h> static inline void *__movsb(void *d, const void *s, size_t n) { asm volatile ("rep movsb" : "=D" (d), "=S" (s), "=c" (n) : "0" (d), "1" (s), "2" (n) : "memory"); return d; } int main(void) { int n = 1<<30; //char *a = malloc(n), *b = malloc(n); char *a = _mm_malloc(n,4096), *b = _mm_malloc(n,4096); memset(a,2,n), memset(b,1,n); __movsb(b,a,n); printf("%d\n", memcmp(b,a,n)); double dtime; dtime = -omp_get_wtime(); for(int i=0; i<10; i++) __movsb(b,a,n); dtime += omp_get_wtime(); printf("dtime %f, %.2f GB/s\n", dtime, 2.0*10*1E-9*n/dtime); dtime = -omp_get_wtime(); for(int i=0; i<10; i++) memcpy(b,a,n); dtime += omp_get_wtime(); printf("dtime %f, %.2f GB/s\n", dtime, 2.0*10*1E-9*n/dtime); }

The reason I am interested in

rep movsbis based off these commentsNote that on Ivybridge and Haswell, with buffers to large to fit in MLC you can beat movntdqa using rep movsb; movntdqa incurs a RFO into LLC, rep movsb does not... rep movsb is significantly faster than movntdqa when streaming to memory on Ivybridge and Haswell (but be aware that pre-Ivybridge it is slow!)

What's missing/sub-optimal in this memcpy implementation?

Here are my results on the same system from tinymembnech.

C copy backwards : 7910.6 MB/s (1.4%) C copy backwards (32 byte blocks) : 7696.6 MB/s (0.9%) C copy backwards (64 byte blocks) : 7679.5 MB/s (0.7%) C copy : 8811.0 MB/s (1.2%) C copy prefetched (32 bytes step) : 9328.4 MB/s (0.5%) C copy prefetched (64 bytes step) : 9355.1 MB/s (0.6%) C 2-pass copy : 6474.3 MB/s (1.3%) C 2-pass copy prefetched (32 bytes step) : 7072.9 MB/s (1.2%) C 2-pass copy prefetched (64 bytes step) : 7065.2 MB/s (0.8%) C fill : 14426.0 MB/s (1.5%) C fill (shuffle within 16 byte blocks) : 14198.0 MB/s (1.1%) C fill (shuffle within 32 byte blocks) : 14422.0 MB/s (1.7%) C fill (shuffle within 64 byte blocks) : 14178.3 MB/s (1.0%) --- standard memcpy : 12784.4 MB/s (1.9%) standard memset : 30630.3 MB/s (1.1%) --- MOVSB copy : 8712.0 MB/s (2.0%) MOVSD copy : 8712.7 MB/s (1.9%) SSE2 copy : 8952.2 MB/s (0.7%) SSE2 nontemporal copy : 12538.2 MB/s (0.8%) SSE2 copy prefetched (32 bytes step) : 9553.6 MB/s (0.8%) SSE2 copy prefetched (64 bytes step) : 9458.5 MB/s (0.5%) SSE2 nontemporal copy prefetched (32 bytes step) : 13103.2 MB/s (0.7%) SSE2 nontemporal copy prefetched (64 bytes step) : 13179.1 MB/s (0.9%) SSE2 2-pass copy : 7250.6 MB/s (0.7%) SSE2 2-pass copy prefetched (32 bytes step) : 7437.8 MB/s (0.6%) SSE2 2-pass copy prefetched (64 bytes step) : 7498.2 MB/s (0.9%) SSE2 2-pass nontemporal copy : 3776.6 MB/s (1.4%) SSE2 fill : 14701.3 MB/s (1.6%) SSE2 nontemporal fill : 34188.3 MB/s (0.8%)Note that on my system

SSE2 copy prefetchedis also faster thanMOVSB copy.

In my original tests I did not disable turbo. I disabled turbo and tested again and it does not appear to make much of a difference. However, changing the power management does make a big difference.

When I do

sudo cpufreq-set -r -g performanceI sometimes see over 20 GB/s with

rep movsb.with

sudo cpufreq-set -r -g powersavethe best I see is about 17 GB/s. But

memcpydoes not seem to be sensitive to the power management.

I checked the frequency (using

turbostat) with and without SpeedStep enabled, withperformanceand withpowersavefor idle, a 1 core load and a 4 core load. I ran Intel's MKL dense matrix multiplication to create a load and set the number of threads usingOMP_SET_NUM_THREADS. Here is a table of the results (numbers in GHz).SpeedStep idle 1 core 4 core powersave OFF 0.8 2.6 2.6 performance OFF 2.6 2.6 2.6 powersave ON 0.8 3.5 3.1 performance ON 3.5 3.5 3.1This shows that with

powersaveeven with SpeedStep disabled the CPU still clocks down to the idle frequency of0.8 GHz. It's only withperformancewithout SpeedStep that the CPU runs at a constant frequency.I used e.g

sudo cpufreq-set -r performance(becausecpufreq-setwas giving strange results) to change the power settings. This turns turbo back on so I had to disable turbo after.-

Ped7g about 7 years"What can I do to improve it?" ... basically nothing. The

memcpyimplementation in current version of compiler is very likely as close to the optimal solution, as you can get with any generic function. If you have some special case like always moving exactly 15 bytes/etc, then maybe a custom asm solution may beat the gcc compiler, but if your C source is vocal enough about what is happening (giving compiler good hints about alignment, length, etc), the compiler will very likely produce optimal machine code even for those specialized cases. You can try to improve the compiler output first. -

Z boson about 7 years@Ped7g, I don't expect it to be better than

Z boson about 7 years@Ped7g, I don't expect it to be better thanmemcpy. I expect it to be about as good asmemcpy. I used gdb to step throughmemcpyand I see that it enters a mainloop withrep movsb. So that appears to be whatmemcpyuses anyway (in some cases). -

Art about 7 yearsChange the order of the tests. What results do you get then?

-

Z boson about 7 years@Art, I get the same result (26GB/s for memcpy and 15 GB/s for __movsb).

Z boson about 7 years@Art, I get the same result (26GB/s for memcpy and 15 GB/s for __movsb). -

Art about 7 yearsFair enough. When in doubt, suspect the benchmark. But that doesn't seem to be the problem here. For what it's worth it's faster on an Ivy Bridge machine I had accessible (both when run first and second).

-

Z boson about 7 years@Art, thats interesting! I wonder why that is on your IVB system. Yeah, benchmarking is a pain. I recently answered a question which I had to edit several times due to benchmarking problems that I did not expect.

Z boson about 7 years@Art, thats interesting! I wonder why that is on your IVB system. Yeah, benchmarking is a pain. I recently answered a question which I had to edit several times due to benchmarking problems that I did not expect. -

Z boson about 7 years@Art maybe

Z boson about 7 years@Art maybeenhanced rep movsbis not so enhanced on Skylake (my system)? Still I don't understand why you had to change the order. -

Art about 7 years@Zboson The order didn't matter for me either. The "Change the order" comment was before I found a machine with the right CPU. It's 50% faster too, which is quite significant. On the other hand, on another machine with a newer CPU the performance is reversed.

-

Z boson about 7 years@Art, what function was 50% faster and on what machine?

Z boson about 7 years@Art, what function was 50% faster and on what machine? -

Art about 7 years@Zboson the movsb function was 50% faster on an Ivy Bridge machine.

-

Kerrek SB about 7 years@Zboson: No, I haven't heard of it I'm afraid. Is the term defined in the Intel instruction manual?

-

Z boson about 7 years@KerrekSB, yes, it's in section "3.7.6 Enhanced REP MOVSB and STOSB operation (ERMSB)

Z boson about 7 years@KerrekSB, yes, it's in section "3.7.6 Enhanced REP MOVSB and STOSB operation (ERMSB) -

Kerrek SB about 7 yearsInteresting. Did you check with cpuid that the feature is available on your CPU?

-

Kerrek SB about 7 yearsThe optimization manual suggests that ERMSB is better at providing small code size and at throughput than traditional REP-MOV/STO, but "implementing memcpy using ERMSB might not reach the same level of throughput as using 256-bit or 128-bit AVX alternatives, depending on length and alignment factors." The way I understand this is that it's enhanced for situations where you might previously already have used rep instructions, but it does not aim to compete with modern high-throughput alternatives.

-

Z boson about 7 years@KerrekSB, no. I assume that processors since Ivy Bridge have it.

Z boson about 7 years@KerrekSB, no. I assume that processors since Ivy Bridge have it.less /proc/cpuinfo | grep ermsshows erms. -

Kerrek SB about 7 years@Zboson: Yeah, same thing, that's good enough.

-

Z boson about 7 years@KerrekSB, yeah, I read that statement but was confused by it. I am basing everything off of the comment " rep movsb is significantly faster than movntdqa when streaming to memory on Ivybridge and Haswell (but be aware that pre-Ivybridge it is slow!)" (see the update at the end of my question).

Z boson about 7 years@KerrekSB, yeah, I read that statement but was confused by it. I am basing everything off of the comment " rep movsb is significantly faster than movntdqa when streaming to memory on Ivybridge and Haswell (but be aware that pre-Ivybridge it is slow!)" (see the update at the end of my question). -

Kerrek SB about 7 yearsHow about stepping through the machine code in a debugger and checking whether your memcpy actually uses movntdqa? It seems plausible that it would use SSE or AVX instructions instead. I have a feeling that ERMSB is meant to be better than some things, not better than everything.

-

Z boson about 7 yearsI have used

Z boson about 7 yearsI have usedgdbto studymemcpy. For a size defined at run time it used non temporal stores and some prefetching. For the same size (1GB) defined at compile time it usedrep movsb. I only looked at it once so it's possible I misinterpreted something. My own implementation usingmovntdqadoes about as well asmemcpy. -

Nominal Animal about 7 years@Zboson: You need a better microbenchmark/timing test. Your source and destination are both AVX vector aligned, and that will affect how the compiler will implement the memcpy(). I've done similar tests using pregenerated pseudo-random source-target-length tuples, with different alignment situations tested separately, to better mimic real world use cases. But, my real-world code usually memcpys cold data, and timing cache-cold behaviour is hard. Perhaps consider timing some real-world memcpy()/memmove()-heavy task?

Nominal Animal about 7 years@Zboson: You need a better microbenchmark/timing test. Your source and destination are both AVX vector aligned, and that will affect how the compiler will implement the memcpy(). I've done similar tests using pregenerated pseudo-random source-target-length tuples, with different alignment situations tested separately, to better mimic real world use cases. But, my real-world code usually memcpys cold data, and timing cache-cold behaviour is hard. Perhaps consider timing some real-world memcpy()/memmove()-heavy task? -

Z boson about 7 years@NominalAnimal, that's an interesting point. You mean that ERMSB is useful in less ideal situations e.g. where destination and source are not aligned. I thought alignment was critical to ERMSB? In any case, if you can demonstrate where ERMSB is useful then that would be a good answer. Show me a better microbenchmark/timing test.

Z boson about 7 years@NominalAnimal, that's an interesting point. You mean that ERMSB is useful in less ideal situations e.g. where destination and source are not aligned. I thought alignment was critical to ERMSB? In any case, if you can demonstrate where ERMSB is useful then that would be a good answer. Show me a better microbenchmark/timing test. -

Iwillnotexist Idonotexist about 7 years@NominalAnimal Intel's Optimization Manual, Table 3-4 claims that when both source and destination are at least 16B-aligned and the transfer size is 128-4096 bytes, ERMSB meets or exceeds Intel's own AVX-based

memcpy(). Although no one knows what thismemcpy()is, you can plausibly assume Intel would know how to get >50% of maximum bandwidth on their own chip. -

Z boson about 7 years@IwillnotexistIdonotexist, you don't need to compare to

Z boson about 7 years@IwillnotexistIdonotexist, you don't need to compare tomemcpy. You could compare ERMSB to a SSE/AVX solution or better to a solution with non-temporal stores. That's what I would do in this case: use non-temporal stores. But this comment and the comment that followed said even in the 1GB case ERMSB should win. Shouldn't the non-temporal stores prevent the prefetchers from reading the destination? I thought that was the point in using them. -

Iwillnotexist Idonotexist about 7 years@Zboson My glibc's

memcpy()uses AVX NT stores. And both NT stores and ERMSB behave in a write-combining fashion, and thus should not require RFO's. Nevertheless, my benchmarks on my own machine show that mymemcpy()and my ERMSB both cap out at 2/3rds of total bandwidth, like yourmemcpy()(but not your ERMSB) did Therefore, there is clearly an extra bus transaction somewhere, and it stinks a lot like an RFO. -

Z boson about 7 years@IwillnotexistIdonotexist your 2/3 observation is very interesting. I think I can get better than 2/3 using two threads. I'm not sure why my ERMSB on Skylake performs worse than your ERMSB on Haswell.

Z boson about 7 years@IwillnotexistIdonotexist your 2/3 observation is very interesting. I think I can get better than 2/3 using two threads. I'm not sure why my ERMSB on Skylake performs worse than your ERMSB on Haswell. -

Z boson about 7 years@IwillnotexistIdonotexist, did you see any benefit for more than 2 threads? I have not looked at performance counters before. That's a weakness I need to fix. What tools do you use for this? Agner Fog has a tool for this but it was a bit complicated. I should look into that again. What about

Z boson about 7 years@IwillnotexistIdonotexist, did you see any benefit for more than 2 threads? I have not looked at performance counters before. That's a weakness I need to fix. What tools do you use for this? Agner Fog has a tool for this but it was a bit complicated. I should look into that again. What aboutperf? If you answer the question please share the details. -

Iwillnotexist Idonotexist about 7 years@Zboson At 2 threads it's about 21GB/s, 4+ it saturates at 23GB/s. I examine performance counters using some homebrew software I wrote:

libpfc. It's nasty, far more limited thanocperf.py, only known to work on my own machine, only works properly for benching single-threaded code, but because I can easily (re)program the counters and access the timings from within the program, and I can tightly sandwich the code to be benchmarked, it suits my needs. Some day I'll have the time to fix its myriad issues. -

Z boson about 7 yearsIn case anyone cares here is a simpler inline assembly solution

Z boson about 7 yearsIn case anyone cares here is a simpler inline assembly solutionstatic void __movsb(void* dst, const void* src, size_t size) { __asm__ __volatile__("rep movsb" : "+D"(dst), "+S"(src), "+c"(size) : : "memory"); }which I found here hero.handmade.network/forums/code-discussion/t/… -

BeeOnRope about 7 yearsIt seems like a reasonable answer could be that it was faster, or at least as fast in IvB (per some results referenced here), but that the associated micro-code doesn't necessarily get love in each generation and so it becomes slower than the explicit code, which always uses the core functionality of the CPU that is guaranteed to be in tune, play nice with prefetching, etc. For example see Andy Glew's comment here:

-

BeeOnRope about 7 yearsThe big weakness of doing fast strings in microcode was ... and (b) the microcode fell out of tune with every generation, getting slower and slower until somebody got around to fixing it. - Andy Glew

-

BeeOnRope about 7 yearsIt is also interesting to note that fast string performance is actually very relevant in, for example, Linux kernel methods like

read()andwrite()which copy data into user-space: the kernel can't (doesn't) use any SIMD registers or SIMD code, so for a fast memcpy it either has to use 64-bit load/stores, or, more recently it will userep movsborrep rmovdif they are detected to be fast on the architecture. So they get a lot of the benefit of large moves without explicitly needing to usexmmorymmregs. -

BeeOnRope about 7 yearsOut of curiosity, are you calculating your bandwidth figures as 2 times the size of the

memcpylength or as 1 times? I.e., is your figure a "memory bandwidth" figure or a "memcpy bandwidth" figure? Of course it doesn't change the relative performance between the techniques, but it helps me compare with my system. -

Z boson about 7 years@BeeOnRope I am using 2 times the size of the

Z boson about 7 years@BeeOnRope I am using 2 times the size of thememcpylength i.e. the memory bandwidth. Since you have the same processor as me did you test my code in my quesiton on it? If so did you get the same result? You have to compile with-mavxdue to this bug. Try the exact compiler options I usedgcc -O3 -march=native -fopenmp foo.c. -

BeeOnRope about 7 years@Zboson - intesting - then your numbers look consistent with my box for the NT

memcpy(about 13 GB copied, aka 26 GB/s BW), but not for therep movsbwhere I see more than 20 GB/s BW, but you report only 15. I will try your code. BTW, I assume you disabled turbo for your tests (which is why you report 2.6 GHz?). I did, although I should have mentioned in explicitly in my answer. -

BeeOnRope about 7 years@Zboson - I get rough 19.5 GB/s and 23.5 GB/s for

rep movsbandmemcpyrespectively with your code. Very oddly inconsistent with your results, since we have the same CPU. There are all sorts of interesting stuff like "memory efficient turbo" that can play heavily here - let me play a bit. That's with turbo off. With turbo on I get roughly 20 vs 25. Turbo seems to help thememcpyversion more than therep movsversion. -

BeeOnRope about 7 yearsI get results closer to yours if I change to the

powersavegovernor: about 17.5 GB/s vs 23.5 GB/s. I.e., therep movsbperf drops but thememcpydoesn't. Indeed, repeated measurements show that with thepowersavegovernor, my CPU only runs at about 2.3 GHz for themovsbenchmark, but at 2.6 GHz for thememcpyone. So a significant part of the delta in your case is probably explained by power management. Basically power-efficient turbo (hereafter, PET) uses a heuristic to determine if the code is "memory stall bound" and ramps down the CPU since a high frequency is "pointless". -

BeeOnRope about 7 yearsSo

rep movsgets unfavorable treatment (performance wise, perhaps it saves power, however!) from PET heuristic, perhaps because the heuristic sees it has a long stall on one instruction, while the highly unrolled AVX version is still executing lots of instructions. I have seen this before while testing some algorithm across a range of parameter values: at some value there is a much larger than expected drop in performance: but what happens is that suddenly the PET threshold was reached and the CPU ramped down (which still hurts performance). -

Z boson about 7 years@BeeOnRope I did not disable Turbo in my tests. Why would that matter for memory bandwidth bound operations? Anyway, I just disabled it (I verified that it was disabled as well by running a custom frequency measuring tool) and it does not appear to make much of a difference. But changing the power management does make a difference. With

Z boson about 7 years@BeeOnRope I did not disable Turbo in my tests. Why would that matter for memory bandwidth bound operations? Anyway, I just disabled it (I verified that it was disabled as well by running a custom frequency measuring tool) and it does not appear to make much of a difference. But changing the power management does make a difference. Withperformancerep movsbgoes as high as 20 GB/s but withpowersaveit gets max 17 GB/s. I added this info to the end of my question. -

Z boson about 7 years@BeeOnRope how does PET matter when turbo is disabled? I went into the Bios and disabled SpeedStep. Shouldn't that run the CPU at a flat frequency? Why would

Z boson about 7 years@BeeOnRope how does PET matter when turbo is disabled? I went into the Bios and disabled SpeedStep. Shouldn't that run the CPU at a flat frequency? Why wouldpowersaveorperformancematter in this case if the CPU is running at a constant frequency? -

BeeOnRope about 7 yearsWell PET is probably a misnomer since apparently it doesn't just affect frequencies above nominal, but rather the whole DVFS range. That makes sense - it isn't like nominal freq is particularly special: if it makes sense to reduce to 2.6 GHz, it may also make sense to reduce to 2.3 or 1.0 or whatever. Turning off SpeedStep will probably work, but it's easy to verify, just run

grep /proc/cpu MHza few times and observe the values, or fire upturbostat. I ran the benchmark likeperf ./a.outto make my observation: it tells you the effect GHz for the process. -

BeeOnRope about 7 yearsIf your CPU is locked,

powersaveandperformanceperhaps shouldn't matter (there is still the un-discussed matter of uncore frequencies, which are independent, but no off-the-shelf tool reports them, as far as I know). Furthermore there may be other power saving aspects not directly related to frequency that is controlled by that setting (e.g., the aggressiveness of moving to higher C-states?). -

BeeOnRope about 7 yearsAbout turbo, it can make a significant difference for memory related things, since it affects the uncore performance and so impacts latency since much of the latency of a memory access is uncore work, which is sped up by turbo (but this is also complex due to the interaction between the power-saving heuristics, and the fact that the uncore and core frequences are partly independent). Since our chips seems to hit the true DRAM BW limit (i.e., not a concurrency-occupancy limit per the discussion in "latency bound platforms" below), it may not apply and I don't see much effect on my CPU. @Zboson

-

Z boson about 7 years@Art do you think you could run tinybenchmark (see BeeOnRope's answer) on your Ivy Bridge system and add the results to the end of my question?

Z boson about 7 years@Art do you think you could run tinybenchmark (see BeeOnRope's answer) on your Ivy Bridge system and add the results to the end of my question? -

Z boson about 7 years@BeeOnRope. I checked the frequency. With

Z boson about 7 years@BeeOnRope. I checked the frequency. Withpowersavethe CPU still idles at 0.8 GHz even with SpeedStep disabled. It's only withperformancethat the CPU is locked at 2.6 GHz with SpeedStep disabled. See the update at the end of my question. -

BeeOnRope about 7 years@Zboson - right, I recall something similar: the

intel_pstatedriver will still use P-states to control frequency even if SS is off in the BIOS. You can also useintel_pstate=disableas a boot parameter to disable it completely, allowing you to use the default power management, including the "user" governer that sets the frequency at whatever you want (no turbo freqs tho). Interesting trivia: withoutintel_pstate, my chip would never run above 3.4 GHz (i.e., the last 100 MHz of turbo were inaccessible). Withintel_pstate, no problem. -

BeeOnRope about 7 yearsBTW, there is a whole interesting rathole to descend with this power saving stuff: e.g., running two benchmarks side-by-side can result in more than 2x total throughput (i.e., "superlinear scaling" with more threads, which is really weird) because one benchmark keeps core or uncore frequency high which helps the other one, but perhaps it deserves a whole separate question. I think powersaving is one part of somewhat poorer

rep movsbperformance, but not the whole story (even at equal MHz it's slower). -

BeeOnRope about 7 yearsBTW, I measured the power use of

rep movsb(in powersave at the lower freq) versusmemcpy, but the power (i.e., watts) was only slightly less, and total energy consumed was higher (since it runs longer). So there is no power-saving benefit... -

Iwillnotexist Idonotexist almost 7 years@BeeOnRope Clearly there should be a chatroom RFB-x86 (Request For Benchmarks - x86) for the sole purpose of reverse-engineering the factors driving x86 processor performance.

-

BeeOnRope almost 7 years@Zboson - I am using commands like

sudo cpupower -c 0,1,2,3 frequency-set -g performance- based on my understandingcpuopweris the most-up-to-date and maintained of the commands for power management (it can also do things like adjust the "perf bias" on recent Intel chips). Using that command, switching toperformancedoesn't seem to affect turbo. I use this script to enable/disable turbo, although it seems perhaps/sys/devices/system/cpu/intel_pstate/no_turbois simpler if you are usingintel_pstate. -

Noah almost 3 years@BeeOnRope re: 'Basically power-efficient turbo (hereafter, PET) uses a heuristic to determine if the code is "memory stall bound" and ramps down the CPU since a high frequency is "pointless"'. Any further reading on this? Not finding any of the keywords in Optimization Manual.

-

BeeOnRope almost 3 years@Noah - it was something I discovered here on SO while answering a question about why

rep movsbwas slower than explicit copy/store instructions: this effect explained some of the gap. I'm not aware of any discussion of it outside SO: you could search for that question and link it if you find it. I wasn't able to find it but didn't spend much time on it and the SO search returns suspiciously few results.

-

-

fuz about 7 yearsActually, with enhanced rep movsb, using rep movsd is slower. Please read what this feature means before writing answers like this.

-

David Hoelzer about 7 yearsHmm... Thanks. I removed the rep movsd comment.

David Hoelzer about 7 yearsHmm... Thanks. I removed the rep movsd comment. -

Z boson about 7 yearsI discussed a custom

Z boson about 7 yearsI discussed a custommemcpyhere. One comment is "Note that on Ivybridge and Haswell, with buffers to large to fit in MLC you can beat movntdqa using rep movsb; movntdqa incurs a RFO into LLC, rep movsb does not." I can get something as good asmemcpywithmovntdqa. My question is how to I do as good as that or better withrep movsb? -