FFmpeg decoding .mp4 video file

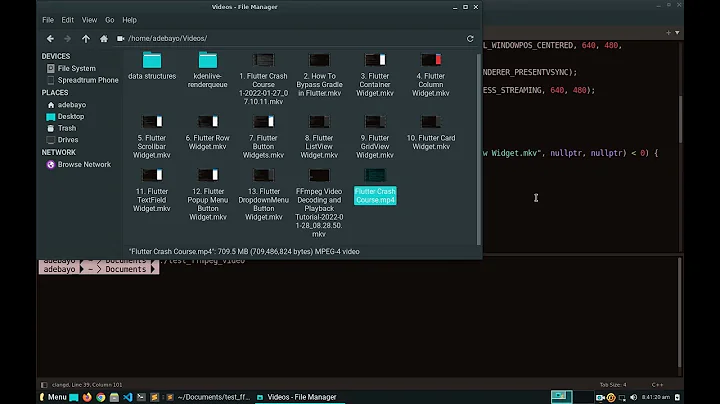

See the "detailed description" in the muxing docs. You:

- set ctx->oformat using av_guess_format

- set ctx->pb using avio_open2

- call avformat_new_stream for each stream in the output file. If you're re-encoding, this is by adding each stream of the input file into the output file.

- call avformat_write_header

- call av_interleaved_write_frame in a loop

- call av_write_trailer

- close the file (avio_close) and clear up all allocated memory

Related videos on Youtube

Sir DrinksCoffeeALot

Updated on June 04, 2022Comments

-

Sir DrinksCoffeeALot almost 2 years

I'm working on a project that needs to open .mp4 file format, read it's frames 1 by 1, decode them and encode them with better type of lossless compression and save them into a file.

Please correct me if i'm wrong with order of doing things, because i'm not 100% sure how this particular thing should be done. From my understanding it should go like this:

1. Open input .mp4 file 2. Find stream info -> find video stream index 3. Copy codec pointer of found video stream index into AVCodecContext type pointer 4. Find decoder -> allocate codec context -> open codec 5. Read frame by frame -> decode the frame -> encode the frame -> save it into a fileSo far i encountered couple of problems. For example, if i want to save a frame using

av_interleaved_write_frame()function, i can't open input .mp4 file usingavformat_open_input()since it's gonna populatefilenamepart of theAVFormatContextstructure with input file name and therefore i can't "write" into that file. I've tried different solution usingav_guess_format()but when i dump format usingdump_format()i get nothing so i can't find stream information about which codec is it using.So if anyone have any suggestions, i would really appreciate them. Thank you in advance.

-

Eugene about 8 yearsAre you trying to convert a .mp4 to a series of lossless still images?

-

Sir DrinksCoffeeALot about 8 yearsWell i'm trying to split .mp4 file into frames, compress them and send them through a network. On the other end those frames will get concatenated back into a .mp4 file.

-

Eugene about 8 yearsWhat's the point of that? And compressing lossless images is pointless.The mp4 format is already designed as an efficient container. This process is slow, processor intensive, and bandwidth inefficient, etc.

-

Sir DrinksCoffeeALot about 8 yearsIt's because i need to find out differences between multiple codecs, main goal is to split 4k video into frames, compress them and send them through 1Gbit connection. So i need to find out which codec would be the best compression-wise. And as an example i have HD file on which i need to do experiments.

-

-

Sir DrinksCoffeeALot about 8 yearsI'm not using static build of ffmpeg, i'm using their API in my own project.

-

Eugene about 8 yearsFFmpeg is the command line utility. Which library and language are you using? Libavcodec?

-

Sir DrinksCoffeeALot about 8 yearsI'm using libavcodec/format/device/filter/util/postproc/swscale. I'm writing in C. Basicly it's an old dev build of FFmpeg.

-

Gyan about 8 yearsFFmpeg is a project containing a bunch of libraries for A/V manipulation and an optional set of binaries such as ffmpeg, ffplay..etc which allow you to use the functions available in the libraries.

-

Sir DrinksCoffeeALot about 8 yearsDo you know how can i copy content of

AVFramestructure to achar* buffer, so i can send that buffer using winsock2'ssend()function to a different process? I'm trying to create local server-client communication to measure time needed to send a frame using different compression methods. -

Ronald S. Bultje about 8 yearsfor (y = 0; y < height; y++) memcpy(ptr + y *width, frame->data[0] + y * frame->linesize[0], width). You can also use avcodec_encode_video2() to compress frames (instead of gzip or so, which is what you're probably trying to do).

Ronald S. Bultje about 8 yearsfor (y = 0; y < height; y++) memcpy(ptr + y *width, frame->data[0] + y * frame->linesize[0], width). You can also use avcodec_encode_video2() to compress frames (instead of gzip or so, which is what you're probably trying to do). -

Sir DrinksCoffeeALot about 8 yearsHmm if i do it like that rather than copying whole

AVFramestructure, considering i'm copying raw video frames, would i be able to encode that frame (based only onframe->data[0]andframe->linesize[0]) on the recipient side usingavcodec_encode_video2()function? -

Sir DrinksCoffeeALot about 8 yearsAbout compression, i was planning to use lzw. I will take closer look into

avcodec_encode_video2()compression-wise. Btw, thank you for your responses i really appreciate it. -

Ronald S. Bultje about 8 yearsYou would do the same for data[1-2] with linesize[1-2] (assuming some planar YUV format), sorry, forgot to mention that; or rather, it depends on the number of planes for your pixfmt (see pixdesc).

Ronald S. Bultje about 8 yearsYou would do the same for data[1-2] with linesize[1-2] (assuming some planar YUV format), sorry, forgot to mention that; or rather, it depends on the number of planes for your pixfmt (see pixdesc). -

Sir DrinksCoffeeALot about 8 yearsI succeeded sending single decoded frame from one process to another one using send/recv functions. I determined size of

char* bufferneeded to store a frame of typePIX_FMT_RGB24usingavpicture_get_size(), copyingframe->data[0]was done like this :memcpy(buffer + y * frame->linesize[0], frame->data[0] + y*frame->linesize[0], width * 3);and writing to a.ppmfile on client side was done using:fwrite(buffer + y * width * 3, 1, width * 3, pf);. Basicly i just needed to multiplywidthfrom your code by 3 (since its RGB24) or use exact data stored inframe->linesize[0]. -

Sir DrinksCoffeeALot about 8 yearsI have one more question before i hopefully stop bugging you about FFmpeg, for example if i want to use LZW compression method which is already implemented in FFmpeg, how would i do it using

avcodec_encode_video2()function? I guess i need to populateAVCodecContextvariable with different parameters before calling the encode funtion? -

Ronald S. Bultje about 8 yearsffmpeg has no vanilla lzw encoder. There's a tiff and a gif encoder, which use lzw internally...

Ronald S. Bultje about 8 yearsffmpeg has no vanilla lzw encoder. There's a tiff and a gif encoder, which use lzw internally... -

Sir DrinksCoffeeALot about 8 yearsYea i saw tiff uses LZW internally, was hoping i could somehow implement their source into mine. Ill try to do that today.

![2. [ ffmpeg ] Convert video - Đổi đuôi video , change bitrate - ffmpeg full course](https://i.ytimg.com/vi/X80F72zIK6k/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLClXoDgesO9va3oLCg6zDCPh3_fWQ)