Gevent pool with nested web requests

10,328

Solution 1

I think the following should get you what you want. I'm using BeautifulSoup in my example instead the link striping stuff you had.

from bs4 import BeautifulSoup

import requests

import gevent

from gevent import monkey, pool

monkey.patch_all()

jobs = []

links = []

p = pool.Pool(10)

urls = [

'http://www.google.com',

# ... another 100 urls

]

def get_links(url):

r = requests.get(url)

if r.status_code == 200:

soup = BeautifulSoup(r.text)

links.extend(soup.find_all('a'))

for url in urls:

jobs.append(p.spawn(get_links, url))

gevent.joinall(jobs)

Solution 2

gevent.pool will limit the concurrent greenlets, not the connections.

You should use session with HTTPAdapter

connection_limit = 10

adapter = requests.adapters.HTTPAdapter(pool_connections=connection_limit,

pool_maxsize=connection_limit)

session = requests.session()

session.mount('http://', adapter)

session.get('some url')

# or do your work with gevent

from gevent.pool import Pool

# it should bigger than connection limit if the time of processing data

# is longer than downings,

# to give a change run processing.

pool_size = 15

pool = Pool(pool_size)

for url in urls:

pool.spawn(session.get, url)

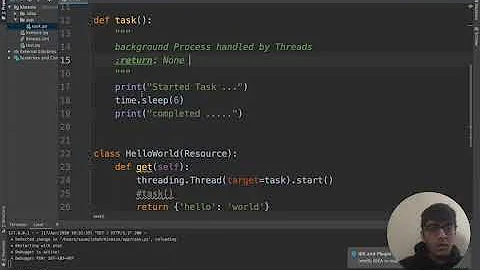

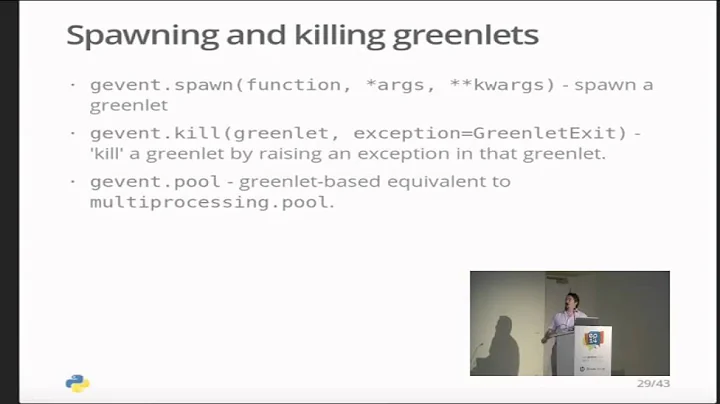

Related videos on Youtube

Comments

-

DominiCane almost 2 years

I try to organize pool with maximum 10 concurrent downloads. The function should download base url, then parser all urls on this page and download each of them, but OVERALL number of concurrent downloads should not exceed 10.

from lxml import etree import gevent from gevent import monkey, pool import requests monkey.patch_all() urls = [ 'http://www.google.com', 'http://www.yandex.ru', 'http://www.python.org', 'http://stackoverflow.com', # ... another 100 urls ] LINKS_ON_PAGE=[] POOL = pool.Pool(10) def parse_urls(page): html = etree.HTML(page) if html: links = [link for link in html.xpath("//a/@href") if 'http' in link] # Download each url that appears in the main URL for link in links: data = requests.get(link) LINKS_ON_PAGE.append('%s: %s bytes: %r' % (link, len(data.content), data.status_code)) def get_base_urls(url): # Download the main URL data = requests.get(url) parse_urls(data.content)How can I organize it to go concurrent way, but to keep the general global Pool limit for ALL web requests?

-

DominiCane about 11 yearsThe problem is that I have 2 types of urls, and each one requires different function to work with it.

-

Ellochka Cannibal about 11 yearsIf you need different processors(consumers) for urls, then wrap the logic in the producer, according to the type of url you should spawn a specific function. But they all have one queue.

-

ARF over 10 yearsCould you please explain why you use gevent.pool in addition to the connection pool already provided by by HTTPAdapter. Why not simply use gevent.spawn(...)? Many thanks.