How do I move a Linux software RAID to a new machine?

Solution 1

You really kinda need the original mdadm.conf file. But, as you don't have it, you'll have to recreate it. First, before doing anything, read up on mdadm via its manual page. Why chance losing your data to a situation or command that you didn't have a grasp on?

That being said, this advice is at your own risk. You can easily lose all your data with the wrong commands. Before you run anything, double-check the ramifications of the command. I cannot be held responsible for data loss or other issues related to any actions you take - so double check everything.

You can try this:

mdadm --assemble --scan --verbose /dev/md{number} /dev/{disk1} /dev/{disk2} /dev/{disk3} /dev/{disk4}

This should give you some info to start working with, along with the ID. It will also create a new array device /dev/md{number}, from there you should be able to find any mounts. Do not use the --auto option, the man page verbiage implies that under certain circumstances this may cause an overwrite of your array settings on the drives. This is probably not the case, and the page probably needs to be re-written for clarity, but why chance it?

If the array assembles correctly and everything is "normal", be sure to get your mdadm.conf written and stored in /etc, so you'll have it at boot time. Include the new ID from the array in the file to help it along.

Solution 2

Just wanted to add my full answer for Debian at least.

- Install the raid manager via -->

sudo apt-get install mdadm Scan for the old raid disks via -->

sudo mdadm --assemble --scan-

At this point, I like to check

BLKIDand mount the raid manually to confirm.blkid mount /dev/md0 /mnt - Append Info to mdadm.conf via -->

mdadm --detail --scan >> /etc/mdadm/mdadm.conf Update initramfs via -->

update-initramfs -uTroubleshooting:

Make sure the output of mdadm --detail --scan matches your /etc/mdadm/mdadm.conf

nano /etc/mdadm/mdadm.conf

ARRAY /dev/md/0 level=raid5 num-devices=3 metadata=00.90 UUID=a44a52e4:0211e47f:f15bce44:817d167c

-

Example FSTAB

/dev/md0 /mnt/mdadm ext4 defaults,nobootwait,nofail 0 2

https://unix.stackexchange.com/questions/23879/using-mdadm-examine-to-write-mdadm-conf/52935#52935

How do I move a Linux software RAID to a new machine?

Solution 3

An issue with a 4 disk data Raid0 separate from the OS disk when updating the OS from CentOS 6.2 to CentOS 8.2 brought me here.

I was able to get use Avery's accepted answer above (https://serverfault.com/a/32721/551746), but ran into problems due to Raid Layout confusion introduced in Kernel 3.14.

Following this post (https://www.reddit.com/r/linuxquestions/comments/debx7w/mdadm_raid0_default_layout/) I had to change the default layout (/sys/module/raid0/parameters/default_layout) in order for the new Kernel to use the old Raid0 layout.

mdadm --detail /dev/mdx

echo 2> /sys/module/raid0/parameters/default_layout #study the previous link to see if 2,1 or 0 needs to be echoed here.

mdadm --assemble --scan

If that works, add the kernel parameter so the raid0 default layout is 2 (or 1 or 0) on reboot by editing /etc/default/grub and setting

raid0.default_layout=2

Rebuild grub.cfg, add to /etc/fstab and reboot!

Solution 4

mdadm -Ac partitions -m 0 /dev/md0

Scan all partitions and devices listed in /proc/partitions and assemble /dev/md0 out of all such devices with a RAID superblock with a minor number of 0.

if the conf was successful you can add --detail --scan >> /etc/mdadm/mdadm.conf so it catches it on boot

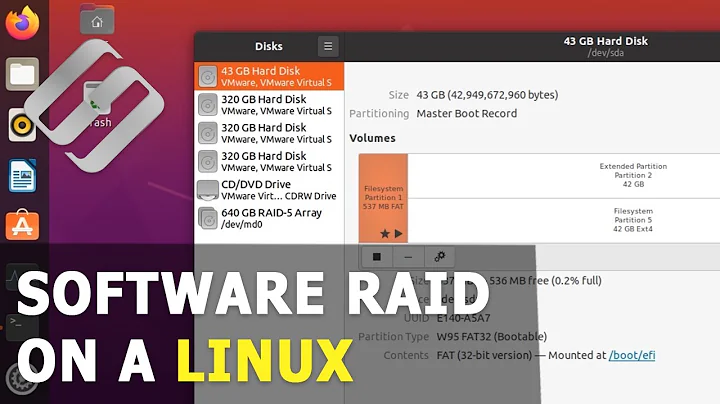

Related videos on Youtube

romandas

Mainly a systems security guy with some Perl knowledge. I'm reading through SICP and K&R right now. I wish someone had shown me SICP earlier in my career. Just Chapter 1 has been blowing my mind.

Updated on September 17, 2022Comments

-

romandas over 1 year

I have a newly built machine with a fresh Gentoo Linux install and a software RAID 5 array from another machine (4 IDE disks connected to off-board PCI controllers). I've successfully moved the controllers to the new machine; the drives are detected by the kernel; and I've used mdadm --examine and verified that the single RAID partition is detected, clean, and even in the "right" order (hde1 == drive 0, hdg1 == drive 1, etc).

What I don't have access to is the original configuration files from the older machine. How should I proceed to reactivate this array without losing the data?

-

Spence almost 15 years+1 - Right on! I've moved several RAID-1 and RAID-5 sets around between Linux machines. One thing I'm not sure about is where you're seeing the info about "--auto". From the manual page on a CentOS 5.1 machine, I'm only seeing tht "--auto" creates a /dev/mdX entry (or entries) for the array. I'm not seeing anything that might indicate that it would write to the drives. (In fact, "--auto=yes" is the default in the mdadm on CentoS 5.1 if "--auto" is not specified.) "--update" can be your friend if you need to move an array to a different mdX number from the orginal specified in the superblock.

-

romandas almost 15 yearsExcellent, I can successfully mount the array. The only lingering issue is that the RAID doesn't come up after a reboot; I have to rerun mdadm -Av /dev/md0. Any idea why?

-

Avery Payne almost 15 yearsDid you recreate the /etc/mdadm.conf file? The system will look in this file at boot time to find arrays.

-

romandas almost 15 yearsI did. What made the difference was recompiling the kernel with CONFIG_MD_AUTO. I hadn't initially because I thought there was a way mdadm would do it instead. I read sonewhere that the kernel code isn't as robust as using mdadm to automount, but I can't find anything to back that up anymore.

-

Maarten almost 4 yearsIf you do have the mdadm.conf file see if you can also find the old fstab file. From both take the lines that are about the old array and copy paste those lines in their corresponding files. I did this when I re-used an array from another server and after 2 reboots it worked perfectly.