How do I use robots.txt to disallow crawling for only my subdomains?

Solution 1

The robots.txt file needs to go in the top level directory of you webserver. If your main domain and each subdomain are on different vhosts then you can put it in the top level directory of each subdomain and include something like

User-agent: *

Disallow: /

Where the robots.txt is located depends upon how you access a particular site. Given a URL like

http://example.com/somewhere/index.html

a crawler will discard everything to the right of the domain name and append robots.txt

http://example.com/robots.txt

So you need to put your robots.txt in the directory pointed to by the DocumentRoot directive for example.com and to disallow access to /somewhere you need

User-agent: *

Disallow: /somewhere

If you have subdomains and you access them as

http://subdomain.example.com

and you want to disallow access to the whole subdomain then you need to put your robots.txt in the directory pointed to by the DocumentRoot directive for the subdomain etc.

Solution 2

You need to put robots.txt in you root directory

The Disallow rules are not domian/sub-domain specific and will apply to all urls

For example: Lets assume you are using a sub.mydomain.com and mydomain.com (both are linked to the same ftp folder). For this setup, if you set a Disallow: /admin/ rule then all URL sub.mydomain.com/admin/ and in mydomain.com/admin/ will be Disallowed.

But if sub.mydomain.com is actually links no another site (and also to another ftp folder) then you`ll need to create another robots.txt and put it in the root of that folder.

Solution 3

You have to put it in your root directory, otherwise it won't be found.

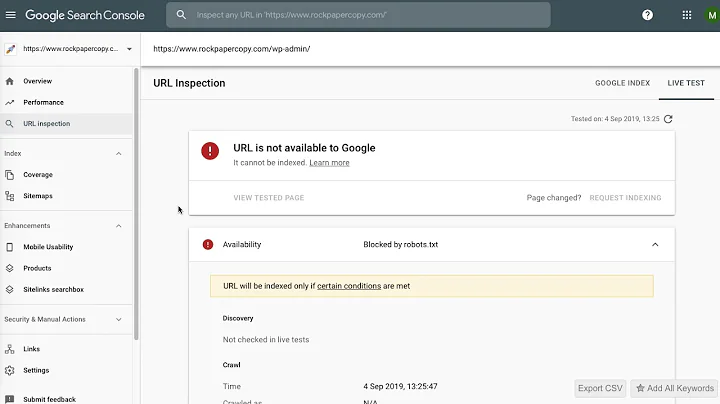

Related videos on Youtube

tkbx

Updated on September 18, 2022Comments

-

tkbx over 1 year

If I want my main website to on search engines, but none of the subdomains to be, should I just put the "disallow all" robots.txt in the directories of the subdomains? If I do, will my main domain still be crawlable?

-

tkbx over 11 yearsWould this work?

User-agent: *; Allow: *; Disallow: /subdomains? -

user9517 over 11 yearsIf you access your subdomains as example.com/subdomains/subdomain1 etc then you shouldn't need the allow as everything not excluded is allowed by default.

-

tkbx over 11 yearsOK, so within the server, I have my root files and /Subdomains with their own index.html's. I'm not sure how common this is, but on the service I use (1&1), an actual subdomain (sub.domain.com) can be linked to a folder. I can have sub.domain.com link to /Subdomains/SomeSite (and /Subdomains/SomeSite/index.html from there). Will disallowing /Subdomains work in this case?

-

user9517 over 11 yearsIt's all about how you access your main domain and it's subdomains. Take a look at robotstxt.org.