How do we analyse a loss vs epochs graph?

The first conclusion is obviously that the first model performs worse than the second, and that is generally true, as long as you use the same data for validation. In the case where you train a model with different splits, that might not necessarily be the case.

Furthermore, to answer your question regarding overfitting/underfitting:

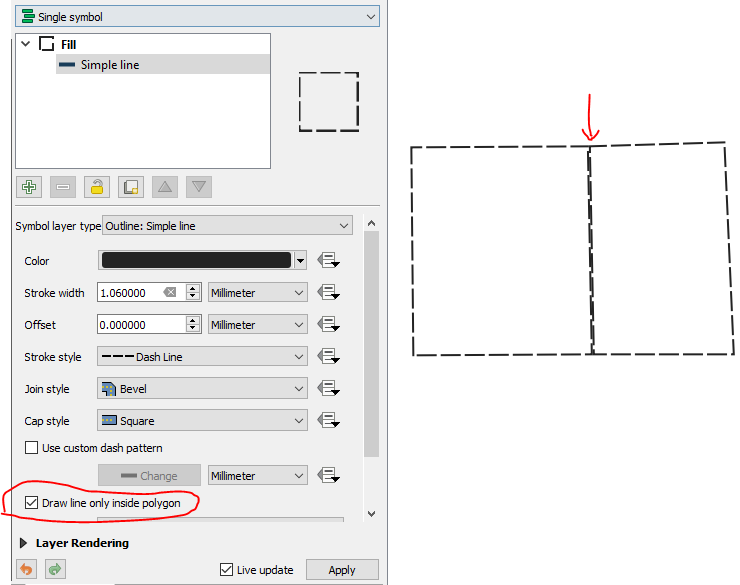

A typical graph for overfitting looks like this:

So, in your case, you clearly just reach convergence, but don't actually overfit! (This is great news!) On the other hand, you could ask yourself whether you could achieve even better results. I am assuming that you are decaying your learning rate, which lets you pan out at some form of plateau. If that is the case, try reducing the learning rate less at first, and see if you can reduce your loss even further.

Moreover, if you still see a very long plateau, you can also consider stopping early, since you effectively gain no more improvements. Depending on your framework, there are implementations of this (for example, Keras has callbacks for early stopping, which is generally tied to the validation/testing error). If your validation error increases, similar to the image, you should consider using the loweste validation error as a point for early stopping. One way I like to do this is to checkpoint the model every now and then, but only if the validation error improved.

Another inference you can make is the learning rate in general: Is it too large, your graph will likely be very "jumpy/jagged", whereas a very low learning rate will have only a small decline in the error, and not so much exponentially decaying behavior.

You can see a weak form of this by comparing the steepness of the decline in the first few epochs in your two examples, where the first one (with the lower learning rate) takes longer to converge.

Lastly, if your training and test error are very far apart (as in the first case), you might ask yourself whether you are actually accurately describing or modeling the problem; in some instances, you might realize that there is some problem in the (data) distribution that you might have overlooked. Since the second graph is way better, though, I doubt this is the case in your problem.

Sleeba Paul

Machine Learning Engineer at Ericsson Global AI Accelerator. TEDx and PyData Speaker. ML Projects showcased at NeurIPS for Art, Florence Biennale, and many national media.

Updated on June 05, 2022Comments

-

Sleeba Paul 4 months

I'm training a language model and the loss vs epochs is plotted each time of training. I'm attaching two samples from it.

Obviously, the second one is showing better performance. But, from these graphs, when do we take a decision to stop training (early stopping)?

Can we understand overfitting and underfitting from these graphs or do I need to plot additional learning curves?

What are the additional inferences that can be made from these plots?

-

Sleeba Paul about 4 yearsHey thanks a lot. > I'm forking official PyTorch example word level language model. They use 20 as initial

lrand decay by one-fourth. I find this value bit unusual, reduced the initial learning rate and loss was stuck. What do you think about it? > I find some validation sets are bit difficult to fit. Maybe your second inference would be just right. -

dennlinger about 4 yearsI think decaying by one-fourth is quite harsh, but that depends on the problem. (Careful, the following is my personal opinion) I start with a way smaller learning rate (0.001-0.05), and then decay by multiplying by 0.98-0.9995 after every epoch (in case of large training data, more frequently). I additionally increase the decay once a certain number of epochs has passed. Generally, playing around with the model and going with educated guesses is a good idea, or you use random search / gradient boosting for the hyperparameters.

dennlinger about 4 yearsI think decaying by one-fourth is quite harsh, but that depends on the problem. (Careful, the following is my personal opinion) I start with a way smaller learning rate (0.001-0.05), and then decay by multiplying by 0.98-0.9995 after every epoch (in case of large training data, more frequently). I additionally increase the decay once a certain number of epochs has passed. Generally, playing around with the model and going with educated guesses is a good idea, or you use random search / gradient boosting for the hyperparameters.