How do you extract a url from a string using python?

Solution 1

There may be few ways to do this but the cleanest would be to use regex

>>> myString = "This is a link http://www.google.com"

>>> print re.search("(?P<url>https?://[^\s]+)", myString).group("url")

http://www.google.com

If there can be multiple links you can use something similar to below

>>> myString = "These are the links http://www.google.com and http://stackoverflow.com/questions/839994/extracting-a-url-in-python"

>>> print re.findall(r'(https?://[^\s]+)', myString)

['http://www.google.com', 'http://stackoverflow.com/questions/839994/extracting-a-url-in-python']

>>>

Solution 2

In order to find a web URL in a generic string, you can use a regular expression (regex).

A simple regex for URL matching like the following should fit your case.

regex = r'('

# Scheme (HTTP, HTTPS, FTP and SFTP):

regex += r'(?:(https?|s?ftp):\/\/)?'

# www:

regex += r'(?:www\.)?'

regex += r'('

# Host and domain (including ccSLD):

regex += r'(?:(?:[A-Z0-9][A-Z0-9-]{0,61}[A-Z0-9]\.)+)'

# TLD:

regex += r'([A-Z]{2,6})'

# IP Address:

regex += r'|(?:\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3})'

regex += r')'

# Port:

regex += r'(?::(\d{1,5}))?'

# Query path:

regex += r'(?:(\/\S+)*)'

regex += r')'

If you want to be even more precise, in the TLD section, you should ensure that the TLD is a valid TLD (see the entire list of valid TLDs here: https://data.iana.org/TLD/tlds-alpha-by-domain.txt):

# TLD:

regex += r'(com|net|org|eu|...)'

Then, you can simply compile the former regex and use it to find possible matches:

import re

string = "This is a link http://www.google.com"

find_urls_in_string = re.compile(regex, re.IGNORECASE)

url = find_urls_in_string.search(string)

if url is not None and url.group(0) is not None:

print("URL parts: " + str(url.groups()))

print("URL" + url.group(0).strip())

Which, in case of the string "This is a link http://www.google.com" will output:

URL parts: ('http://www.google.com', 'http', 'google.com', 'com', None, None)

URL: http://www.google.com

If you change the input with a more complex URL, for example "This is also a URL https://www.host.domain.com:80/path/page.php?query=value&a2=v2#foo but this is not anymore" the output will be:

URL parts: ('https://www.host.domain.com:80/path/page.php?query=value&a2=v2#foo', 'https', 'host.domain.com', 'com', '80', '/path/page.php?query=value&a2=v2#foo')

URL: https://www.host.domain.com:80/path/page.php?query=value&a2=v2#foo

NOTE: If you are looking for more URLs in a single string, you can still use the same regex, but just use findall() instead of search().

Solution 3

There is another way how to extract URLs from text easily. You can use urlextract to do it for you, just install it via pip:

pip install urlextract

and then you can use it like this:

from urlextract import URLExtract

extractor = URLExtract()

urls = extractor.find_urls("Let's have URL stackoverflow.com as an example.")

print(urls) # prints: ['stackoverflow.com']

You can find more info on my github page: https://github.com/lipoja/URLExtract

NOTE: It downloads a list of TLDs from iana.org to keep you up to date. But if the program does not have internet access then it's not for you.

Solution 4

This extracts all urls with parameters, somehow all above examples haven't worked for me

import re

data = 'https://net2333.us3.list-some.com/subscribe/confirm?u=f3cca8a1ffdee924a6a413ae9&id=6c03fa85f8&e=6bbacccc5b'

WEB_URL_REGEX = r"""(?i)\b((?:https?:(?:/{1,3}|[a-z0-9%])|[a-z0-9.\-]+[.](?:com|net|org|edu|gov|mil|aero|asia|biz|cat|coop|info|int|jobs|mobi|museum|name|post|pro|tel|travel|xxx|ac|ad|ae|af|ag|ai|al|am|an|ao|aq|ar|as|at|au|aw|ax|az|ba|bb|bd|be|bf|bg|bh|bi|bj|bm|bn|bo|br|bs|bt|bv|bw|by|bz|ca|cc|cd|cf|cg|ch|ci|ck|cl|cm|cn|co|cr|cs|cu|cv|cx|cy|cz|dd|de|dj|dk|dm|do|dz|ec|ee|eg|eh|er|es|et|eu|fi|fj|fk|fm|fo|fr|ga|gb|gd|ge|gf|gg|gh|gi|gl|gm|gn|gp|gq|gr|gs|gt|gu|gw|gy|hk|hm|hn|hr|ht|hu|id|ie|il|im|in|io|iq|ir|is|it|je|jm|jo|jp|ke|kg|kh|ki|km|kn|kp|kr|kw|ky|kz|la|lb|lc|li|lk|lr|ls|lt|lu|lv|ly|ma|mc|md|me|mg|mh|mk|ml|mm|mn|mo|mp|mq|mr|ms|mt|mu|mv|mw|mx|my|mz|na|nc|ne|nf|ng|ni|nl|no|np|nr|nu|nz|om|pa|pe|pf|pg|ph|pk|pl|pm|pn|pr|ps|pt|pw|py|qa|re|ro|rs|ru|rw|sa|sb|sc|sd|se|sg|sh|si|sj|Ja|sk|sl|sm|sn|so|sr|ss|st|su|sv|sx|sy|sz|tc|td|tf|tg|th|tj|tk|tl|tm|tn|to|tp|tr|tt|tv|tw|tz|ua|ug|uk|us|uy|uz|va|vc|ve|vg|vi|vn|vu|wf|ws|ye|yt|yu|za|zm|zw)/)(?:[^\s()<>{}\[\]]+|\([^\s()]*?\([^\s()]+\)[^\s()]*?\)|\([^\s]+?\))+(?:\([^\s()]*?\([^\s()]+\)[^\s()]*?\)|\([^\s]+?\)|[^\s`!()\[\]{};:'".,<>?«»“”‘’])|(?:(?<!@)[a-z0-9]+(?:[.\-][a-z0-9]+)*[.](?:com|net|org|edu|gov|mil|aero|asia|biz|cat|coop|info|int|jobs|mobi|museum|name|post|pro|tel|travel|xxx|ac|ad|ae|af|ag|ai|al|am|an|ao|aq|ar|as|at|au|aw|ax|az|ba|bb|bd|be|bf|bg|bh|bi|bj|bm|bn|bo|br|bs|bt|bv|bw|by|bz|ca|cc|cd|cf|cg|ch|ci|ck|cl|cm|cn|co|cr|cs|cu|cv|cx|cy|cz|dd|de|dj|dk|dm|do|dz|ec|ee|eg|eh|er|es|et|eu|fi|fj|fk|fm|fo|fr|ga|gb|gd|ge|gf|gg|gh|gi|gl|gm|gn|gp|gq|gr|gs|gt|gu|gw|gy|hk|hm|hn|hr|ht|hu|id|ie|il|im|in|io|iq|ir|is|it|je|jm|jo|jp|ke|kg|kh|ki|km|kn|kp|kr|kw|ky|kz|la|lb|lc|li|lk|lr|ls|lt|lu|lv|ly|ma|mc|md|me|mg|mh|mk|ml|mm|mn|mo|mp|mq|mr|ms|mt|mu|mv|mw|mx|my|mz|na|nc|ne|nf|ng|ni|nl|no|np|nr|nu|nz|om|pa|pe|pf|pg|ph|pk|pl|pm|pn|pr|ps|pt|pw|py|qa|re|ro|rs|ru|rw|sa|sb|sc|sd|se|sg|sh|si|sj|Ja|sk|sl|sm|sn|so|sr|ss|st|su|sv|sx|sy|sz|tc|td|tf|tg|th|tj|tk|tl|tm|tn|to|tp|tr|tt|tv|tw|tz|ua|ug|uk|us|uy|uz|va|vc|ve|vg|vi|vn|vu|wf|ws|ye|yt|yu|za|zm|zw)\b/?(?!@)))"""

re.findall(WEB_URL_REGEX, text)

Solution 5

You can extract any URL from a string using the following patterns,

1.

>>> import re

>>> string = "This is a link http://www.google.com"

>>> pattern = r'[(http://)|\w]*?[\w]*\.[-/\w]*\.\w*[(/{1})]?[#-\./\w]*[(/{1,})]?'

>>> re.search(pattern, string)

http://www.google.com

>>> TWEET = ('New Pybites article: Module of the Week - Requests-cache '

'for Repeated API Calls - http://pybit.es/requests-cache.html '

'#python #APIs')

>>> re.search(pattern, TWEET)

http://pybit.es/requests-cache.html

>>> tweet = ('Pybites My Reading List | 12 Rules for Life - #books '

'that expand the mind! '

'http://pbreadinglist.herokuapp.com/books/'

'TvEqDAAAQBAJ#.XVOriU5z2tA.twitter'

' #psychology #philosophy')

>>> re.findall(pattern, TWEET)

['http://pbreadinglist.herokuapp.com/books/TvEqDAAAQBAJ#.XVOriU5z2tA.twitter']

to take the above pattern to the next level, we can also detect hashtags including URL the following ways

2.

>>> pattern = r'[(http://)|\w]*?[\w]*\.[-/\w]*\.\w*[(/{1})]?[#-\./\w]*[(/{1,})]?|#[.\w]*'

>>> re.findall(pattern, tweet)

['#books', http://pbreadinglist.herokuapp.com/books/TvEqDAAAQBAJ#.XVOriU5z2tA.twitter', '#psychology', '#philosophy']

The above example for taking URL and hashtags can be shortened to

>>> pattern = r'((?:#|http)\S+)'

>>> re.findall(pattern, tweet)

['#books', http://pbreadinglist.herokuapp.com/books/TvEqDAAAQBAJ#.XVOriU5z2tA.twitter', '#psychology', '#philosophy']

The pattern below can matches two alphanumeric separated by "." as URL

>>> pattern = pattern = r'(?:http://)?\w+\.\S*[^.\s]'

>>> tweet = ('PyBites My Reading List | 12 Rules for Life - #books '

'that expand the mind! '

'www.google.com/telephone/wire.... '

'http://pbreadinglist.herokuapp.com/books/'

'TvEqDAAAQBAJ#.XVOriU5z2tA.twitter '

"http://-www.pip.org "

"google.com "

"twitter.com "

"facebook.com"

' #psychology #philosophy')

>>> re.findall(pattern, tweet)

['www.google.com/telephone/wire', 'http://pbreadinglist.herokuapp.com/books/TvEqDAAAQBAJ#.XVOriU5z2tA.twitter', 'www.pip.org', 'google.com', 'twitter.com', 'facebook.com']

You can try any complicated URL with the number 1 & 2 pattern. To learn more about re module in python, do check this out REGEXES IN PYTHON by Real Python.

Cheers!

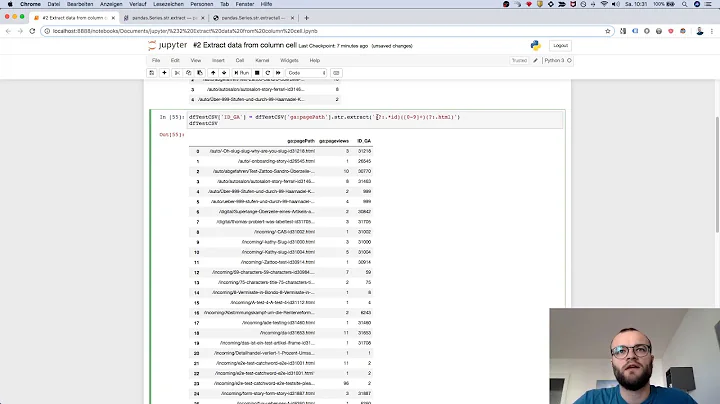

Related videos on Youtube

Sheldon

https://plus.google.com/+GrahameThomson https://twitter.com/#!/grahamethomson

Updated on February 02, 2022Comments

-

Sheldon over 2 years

For example:

string = "This is a link http://www.google.com"How could I extract 'http://www.google.com' ?

(Each link will be of the same format i.e 'http://')

-

rjz about 12 yearsYou might check out this answer: stackoverflow.com/questions/499345/…

-

Sheldon about 12 yearsNone is returned when I try that solution.

-

Alexandre Dulaunoy about 12 yearsIf this is for a raw text file (as expressed in your question), you might check this answer: stackoverflow.com/questions/839994/extracting-a-url-in-python

-

Martin Thoma over 6 years

-

Yash Kumar Verma over 6 yearsPossible duplicate of What is the best regular expression to check if a string is a valid URL?

Yash Kumar Verma over 6 yearsPossible duplicate of What is the best regular expression to check if a string is a valid URL?

-

-

tripleee over 9 yearsThis is too crude for many real-world scenarios. It fails entirely for

tripleee over 9 yearsThis is too crude for many real-world scenarios. It fails entirely forftp://URLs andmailto:URLs etc, and will naïvely grab the tail part from<a href="http://google.com/">Click here</a>(i.e. up through "click"). -

luckydonald over 7 yearsSo, the regex end up being

((?:(https?|s?ftp):\/\/)?(?:www\.)?((?:(?:[A-Z0-9][A-Z0-9-]{0,61}[A-Z0-9]\.)+)([A-Z]{2,6})|(?:\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}))(?::(\d{1,5}))?(?:(\/\S+)*)). Also note the TLD list right now also includes fun endings likeXN--VERMGENSBERATUNG-PWB, being 24 characters long, which will not be catched by this. -

teewuane over 7 years@tripleee The question isn't about parsing HTML, but finding a URL in a string of text that will always be

httpformat. So this works really well for that. But yes, pretty important for people to know what you're saying if they're here for parsing HTML or similar. -

Mr_and_Mrs_D about 6 yearsWould be better to add

(?i)to the pattern - more portable. Also, bear in mind this will match23.084.828.566which is not a valid IP address but is a valid float in some locales. -

Jorge Orpinel Pérez over 5 yearsThere's some sort of length limit to this regex e.g:

docs.google.com/spreadsheets/d/10FmR8upvxZcZE1q9n1o40z16mygUJklkXQ7lwGS4nlIjust matchesdocs.google.com/spreadsheets/d/10FmR8upvxZcZE1q9n. -

Henrik over 3 yearsWorks like a charm, and doesn't clutter the rest of my script.

-

autonopy almost 3 yearsUnfortunately, this fails whenever there is text (i.e., not space) attached to the beginning or end of the url. e.g.

autonopy almost 3 yearsUnfortunately, this fails whenever there is text (i.e., not space) attached to the beginning or end of the url. e.g.ok/https://www.duckduckgo.comwon't catch the url in it.