How to extract logs between the current time and the last 15 minutes

Solution 1

You almost have it.

Step1

On GNU and Linux and perhaps other systems, the date command can be used to print out an arbitrary format for a time specification given a user-friendly time expression. For instance, I can get a string representing the time from 15 minutes in the past using this:

date --date='15 minutes ago'

You've pretty much done this with your code, albeit less efficiently. But you left out the microseconds. Presumably you don't actually care about them, but you do have to match them. The timezone could be done with '%:z' but presumably, you need to match the existing timezone; things might break shortly after daylight savings time switches. If you have to worry about multiple timezones, you'll need a regex or the Sobrique's solution. Caveat emptor, you could probably get away with:

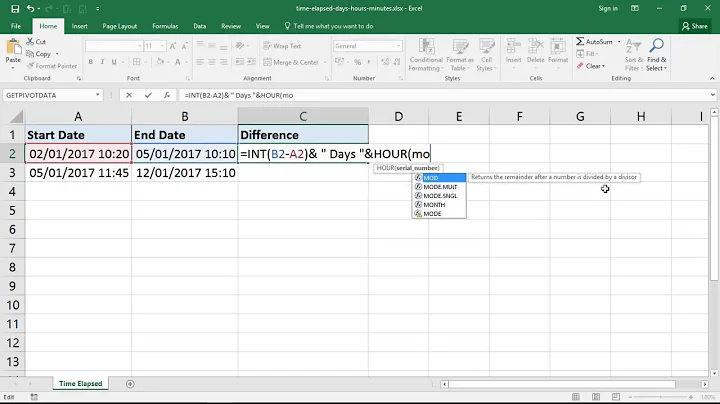

NOW=$(date +"%FT%T.000%-04:00")

T2=$(date --date='15 minutes ago' +"%FT%T.000%-04:00")

Step 2

You can use your NOW and T2 as inputs to awk. String-based matching will work just fine here, but awk lets you make sure that every line fits within the required time range by doing greater-than and less-than string compares.

awk -v TSTART="[$T2]" -v TEND="[$NOW]" '$1>=TSTART && $1<=TEND'

Step 3 (NEW)

You have to get the untimestamped log lines in between such timestamped lines. So we use the above awk code to match timestamp lines and when the timestamp is in the right range, set a flag. Only when the flag is set to 1, the current line is printed.

awk -v TSTART="[$T2]" -v TEND="[$NOW]" \

'/^\[[^ ]*\] / { log = ($1>=TSTART && $1<=TEND) } log { print }'

Step 4

As usual, redirect output, but you should note that if you're running your script every 15 minutes, your current redirection code will overwrite the same file because %H won't have changed for at least 3 of those runs. Better make it %H-%M or something. But there's no need for any redirect-to-file at all. You can send it directly to mail (unless you really need the attachment):

{

echo "$MESSAGE"; echo

awk -v TSTART="[$T2]" -v TEND="[$NOW]" '$1>=TSTART && $1<=TEND' $LogDir/oim_server1-diagnostic.log

} | mailx -S smtp="$SMTP" -r "$SENDER" -s "$SUBJECT" "$EMAIL1"

The rest of your script should work as-is.

However, in the above setting, now you don't get an attachment that you can easily save with the relevant date-timestamp. And maybe you don't want to send mail if there is no output. So you could do something like this:

OUT=/tmp/oim_server1-diagnostic_$(date +%F-%H-%M)

awk -v TSTART="[$T2]" -v TEND="[$NOW]" '$1>=TSTART && $1<=TEND' $LogDir/oim_server1-diagnostic.log > "$OUT"

test -s $OUT &&

echo -e "$MESSAGE" | mailx -S smtp="$SMTP" -a "$OUT" -r "$SENDER" -s "$SUBJECT" "$EMAIL1"

rm -f "$OUT"

This cleans up after itself so you don't have numerous diagnostic log files left hanging around.

Solution 2

I'd use:

awk -v limit="$(date -d '15 minutes ago' +'[%FT%T')" '

$0 >= limit' < log-file

That ignores the potential problems you may have two hours per year when the GMT offsets goes from -04:00 to -05:00 and back if daylight saving applies in your timezone.

date -d is GNU specific, but you're using it already.

Related videos on Youtube

Puneet Khullar

Software Engineer. Passionate to code for real challenges. Learning Unix.

Updated on September 18, 2022Comments

-

Puneet Khullar over 1 year

I want to extract the logs between the current time stamp and 15 minutes before and sent an email to the people configured. I developed the below script but it's not working properly; can someone help me?? I have a log file containing this pattern:

[2016-05-24T00:58:04.508-04:00] [oim_server1] [TRACE:32] [] [oracle.iam.scheduler.impl.quartz] [tid: OIMQuartzScheduler_QuartzSchedulerThread] [userId: oiminternal] [ecid: 0000LI6NBsP4yk4LzUS4yW1NBABd000003,1:21904] [APP: oim#11.1.2.0.0] [SRC_CLASS: oracle.iam.scheduler.impl.quartz.QuartzJob] [SRC_METHOD: <init>] Constructor QuartzJob [2016-05-24T00:58:04.508-04:00] [oim_server1] [TRACE:32] [] [oracle.iam.scheduler.impl.quartz] [tid: OIMQuartzScheduler_QuartzSchedulerThread] [userId: oiminternal] [ecid: 0000LI6NBsP4yk4LzUS4yW1NBABd000003,1:21904] [APP: oim#11.1.2.0.0] [SRC_CLASS: oracle.iam.scheduler.impl.quartz.QuartzJob] [SRC_METHOD: <init>] Constructor QuartzJob [2016-05-24T00:58:04.513-04:00] [oim_server1] [TRACE:32] [] [oracle.iam.scheduler.impl.quartz] [tid: OIMQuartzScheduler_Worker-1] [userId: oiminternal] [ecid: 0000LI6NBsP4yk4LzUS4yW1NBABd000003,1:21908] [APP: oim#11.1.2.0.0] [SRC_CLASS: oracle.iam.scheduler.impl.quartz.QuartzTriggerListener] [SRC_METHOD: triggerFired] Trigger state 0 [2016-05-24T00:58:04.515-04:00] [oim_server1] [TRACE:32] [] [oracle.iam.scheduler.impl.quartz] [tid: OIMQuartzScheduler_Worker-1] [userId: oiminternal] [ecid: 0000LI6NBsP4yk4LzUS4yW1NBABd000003,1:21908] [APP: oim#11.1.2.0.0] [SRC_CLASS: oracle.iam.scheduler.impl.quartz.QuartzTriggerListener] [SRC_METHOD: triggerFired] Trigger state 0 [2016-05-24T00:58:04.516-04:00] [oim_server1] [TRACE:32] [] [oracle.iam.scheduler.impl.quartz] [tid: OIMQuartzScheduler_Worker-1] [userId: oiminternal] [ecid: 0000LI6NBsP4yk4LzUS4yW1NBABd000003,1:21908] [APP: oim#11.1.2.0.0] [SRC_CLASS: oracle.iam.scheduler.impl.quartz.QuartzTriggerListener] [SRC_METHOD: triggerFired] Trigger Listener QuartzTriggerListener.triggerFired(Trigger trigger, JobExecutionContext ctx) [2016-05-24T01:00:04.513-04:00] [oim_server1] [WARNING] [] [oracle.iam.scheduler.vo] [tid: OIMQuartzScheduler_Worker-7] [userId: oiminternal] [ecid: 0000LI6NBsP4yk4LzUS4yW1NBABd000003,1:21956] [APP: oim#11.1.2.0.0] IAM-1020021 Unable to execute job : CmyAccess Flat File WD Candidate with Job History Id:1336814[[ org.identityconnectors.framework.common.exceptions.ConfigurationException: Directory does not contain normal files to read HR-76 at org.identityconnectors.flatfile.utils.FlatFileUtil.assertValidFilesinDir(FlatFileUtil.java:230) at org.identityconnectors.flatfile.utils.FlatFileUtil.getDir(FlatFileUtil.java:176) at org.identityconnectors.flatfile.utils.FlatFileUtil.getFlatFileDir(FlatFileUtil.java:182) at org.identityconnectors.flatfile.FlatFileConnector.executeQuery(FlatFileConnector.java:134) at org.identityconnectors.flatfile.FlatFileConnector.executeQuery(FlatFileConnector.java:58) at org.identityconnectors.framework.impl.api.local.operations.SearchImpl.rawSearch(SearchImpl.java:105) at org.identityconnectors.framework.impl.api.local.operations.SearchImpl.search(SearchImpl.java:82) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.identityconnectors.framework.impl.api.local.operations.ConnectorAPIOperationRunnerProxy.invoke(ConnectorAPIOperationRunnerProxy.java:93) at com.sun.proxy.$Proxy735.search(Unknown Source) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.identityconnectors.framework.impl.api.local.operations.ThreadClassLoaderManagerProxy.invoke(ThreadClassLoaderManagerProxy.java:107) at com.sun.proxy.$Proxy735.search(Unknown Source) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.identityconnectors.framework.impl.api.BufferedResultsProxy$BufferedResultsHandler.run(BufferedResultsProxy.java:162)

The script I have written counts the errors found and stores them in a file with the number; if the error count increases it will run the script and send a mail. I can configure cron for this but the script I have written is not working fine. Can someone help me to extract logs between the current time and the last 15 minutes and generate a temp file?

LogDir=/data/app/Oracle/Middleware/user_projects/domains/oim_domain/servers/oim_server1/logs [email protected] SUBJECT=Failed MESSAGE="Scheduler failed" SMTP="SMTPHOSTNAME" [email protected] NOW=$(date +"%FT%T.000%-04:00") T2=$(date --date='15 minutes ago' +"%FT%T.000%-04:00") OUT=/tmp/oim_server1-diagnostic_$(date +%F-%H-%M).log find $LogDir -mmin -15 -name "oim_server1-diagnostic.log" > files.txt count=0; if [ -f lastCount ]; then count=$(cat lastCount) fi while read file do echo "reading file \n " $file currentCount=$(grep -c 'Directory does not contain normal files to read HR-76' $file) if [ $currentCount -ne $count -a $currentCount -ne 0 ];then echo "Error Found " $currentCount awk -v TSTART="[$T2]" -v TEND="[$NOW]" '$1>=TSTART && $1<=TEND' $LogDir/oim_server1-diagnostic.log > "$OUT" test -s $OUT && echo -e "$MESSAGE" | mailx -S smtp="$SMTP" -a "$OUT" -r "$SENDER" -s "$SUBJECT" "$EMAIL1" rm -f "$OUT" fi echo $currentCount > lastCount done < files.txtThis script is extracting the logs but not in the appropriate format. The largest log which i am finding with

(grep -c 'Directory does not contain normal files to read HR-76' $file)I want to extract all logs between two timestamps. Some lines may not have the timestamp, but I want those lines also. In short, I want every line that falls under two time stamps. This script is giving me log file only have timestamp and rest all the lines are missing any suggestion ??? Please note the start time stamp or end time stamp may not be there in all lines of the log, but I want every line between these two time stamps. Sample generation of above log mentioned:::

[2016-05-24T01:00:04.513-04:00] [oim_server1] [WARNING] [] [oracle.iam.scheduler.vo] [tid: OIMQuartzScheduler_Worker-6] [userId: oiminternal] [ecid: 0000LIt5i3n4yk4LzU^AyW1NEPxf000002,1:23444] [APP: oim#11.1.2.0.0] IAM-1020021 Unable to execute job : CmyAccess Flat File WD Employee with Job History Id:46608[[-

Peter David Carter almost 8 yearsHi Puneet and welcome to Unix & Linux Stack Exchange. When posting, instead of using <p> to format your code, instead select the sections of the question where you've written the code and press Ctrl+K. It will end up much more readable and your question is much more likely to get answered.

Peter David Carter almost 8 yearsHi Puneet and welcome to Unix & Linux Stack Exchange. When posting, instead of using <p> to format your code, instead select the sections of the question where you've written the code and press Ctrl+K. It will end up much more readable and your question is much more likely to get answered. -

Sobrique almost 8 years

Sobrique almost 8 yearsgrepis not a good way of matching timestamps, as it doesn't understand the 'value' of a time field. I would suggest instead that you need to parse the date, and filter that. -

Otheus almost 8 yearsNow I understand. I think the solution is very simple. See my answer.

-

-

ott-- almost 8 yearsI would another step and compress the logfile before maling it.

-

Otheus almost 8 years@ott-- Why? Then the user gets a logfile they cannot read, or one that takes at least several steps to decompress.

-

ott-- almost 8 yearsYou should not encrypt it so the user can't read it. It's just not polite to send an attachment of 15 MB while the gzipped file is 1.5 MB in size only. And

lesscan show it without an extra step. Remember this isUnix & Linux, not Windows or MacOSX. -

Otheus almost 8 yearsHow do you know the system receiving the emails is UNIX? Oh well, the OP can figure out if and how he wants to compress it.

-

Puneet Khullar almost 8 years@Otheus the log file genertion is incomplete. I am going to edit the question please refer that.

-

G-Man Says 'Reinstate Monica' over 2 years(1) Suppose the current time is 01:15:00. If there was an event at 01:00:00 and another event at 01:15:00, then your command will list them and everything between. But, if you’re running the command at 01:15:00, then there probably isn’t an event at exactly the current time. And there probably wasn’t an event at exactly 01:00:00. Look at the sample data in the question — your command wouldn’t find the event at 01:00:04, because there isn’t an event at 01:00:00. (2) If there were multiple events at 01:15:00, then your command will show only the first one. … (Cont’d)

G-Man Says 'Reinstate Monica' over 2 years(1) Suppose the current time is 01:15:00. If there was an event at 01:00:00 and another event at 01:15:00, then your command will list them and everything between. But, if you’re running the command at 01:15:00, then there probably isn’t an event at exactly the current time. And there probably wasn’t an event at exactly 01:00:00. Look at the sample data in the question — your command wouldn’t find the event at 01:00:04, because there isn’t an event at 01:00:00. (2) If there were multiple events at 01:15:00, then your command will show only the first one. … (Cont’d) -

G-Man Says 'Reinstate Monica' over 2 years(Cont’d) … (3) If there was an event at 01:00:00 yesterday, then your command would show everything since then (i.e., everything in the last 24 hours and 15 minutes). (4) Whenever you use a

G-Man Says 'Reinstate Monica' over 2 years(Cont’d) … (3) If there was an event at 01:00:00 yesterday, then your command would show everything since then (i.e., everything in the last 24 hours and 15 minutes). (4) Whenever you use a$in a shell command (i.e., a variable or a$(…)), you should put it in quotes; e.g.,sed -n "/$(date --date="15 minutes ago" "+%T")/,/$(date "+%T")/p". -

G-Man Says 'Reinstate Monica' over 2 years+1 for catching the daylight saving time issue.

G-Man Says 'Reinstate Monica' over 2 years+1 for catching the daylight saving time issue. -

BSivakumar over 2 yearsAs you said (your command wouldn’t find the event at 01:00:04) - my command not working in this case. because time

01:00:04is 15 min before 01:15:00. My command will work from 01:01:00. -

G-Man Says 'Reinstate Monica' over 2 yearsI have no idea what you’re trying to say. (I) Are you admitting that your answer is wrong? If so, you might want to try to fix it by editing it. If you can’t fix it (without duplicating one of the other answers), you might want to delete yours. (II) Are you saying that your answer is perfectly correct and my critique is wrong? Your “My command will work from 01:01:00.” statement looks like a defense of your answer. As I said, I don’t understand. Again, you might want to edit your answer to make it clearer and more complete.

G-Man Says 'Reinstate Monica' over 2 yearsI have no idea what you’re trying to say. (I) Are you admitting that your answer is wrong? If so, you might want to try to fix it by editing it. If you can’t fix it (without duplicating one of the other answers), you might want to delete yours. (II) Are you saying that your answer is perfectly correct and my critique is wrong? Your “My command will work from 01:01:00.” statement looks like a defense of your answer. As I said, I don’t understand. Again, you might want to edit your answer to make it clearer and more complete.