How to process a list in parallel in Python?

Solution 1

Assuming CPython and the GIL here.

If your task is I/O bound, in general, threading may be more efficient since the threads are simply dumping work on the operating system and idling until the I/O operation finishes. Spawning processes is a heavy way to babysit I/O.

However, most file systems aren't concurrent, so using multithreading or multiprocessing may not be any faster than synchronous writes.

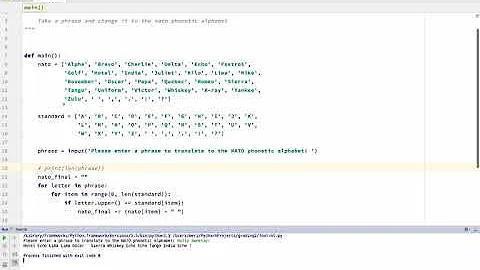

Nonetheless, here's a contrived example of multiprocessing.Pool.map which may help with your CPU-bound work:

from multiprocessing import cpu_count, Pool

def process(data):

# best to do heavy CPU-bound work here...

# file write for demonstration

with open("%s.txt" % data, "w") as f:

f.write(data)

# example of returning a result to the map

return data.upper()

tasks = ["data1", "data2", "data3"]

pool = Pool(cpu_count() - 1)

print(pool.map(process, tasks))

A similar setup for threading can be found in concurrent.futures.ThreadPoolExecutor.

As an aside, all is a builtin function and isn't a great variable name choice.

Solution 2

Or:

from threading import Thread

def process(data):

print("processing {}".format(data))

l= ["data1", "data2", "data3"]

for task in l:

t = Thread(target=process, args=(task,))

t.start()

Or (only python version > 3.6.0):

from threading import Thread

def process(data):

print(f"processing {data}")

l= ["data1", "data2", "data3"]

for task in l:

t = Thread(target=process, args=(task,))

t.start()

Solution 3

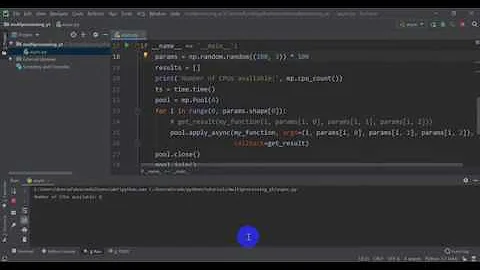

There is a template of using multiprocessing, hope helpful.

from multiprocessing.dummy import Pool as ThreadPool

def process(data):

print("processing {}".format(data))

alldata = ["data1", "data2", "data3"]

pool = ThreadPool()

results = pool.map(process, alldata)

pool.close()

pool.join()

Related videos on Youtube

Comments

-

Joan Venge almost 2 years

I wrote code like this:

def process(data): #create file using data all = ["data1", "data2", "data3"]I want to execute process function on my all list in parallel, because they are creating small files so I am not concerned about disk write but the processing takes long, so I want to use all of my cores.

How can I do this using default modules in python 2.7?

-

Sraw over 5 yearsHelp you to search next time, keywords:

multiprocessing python map. Then you can easily find solution from Google.

-

-

Sraw over 5 yearsOP says "I want to use all of my cores".

ThreadPoolwon't use more than one core. -

Mauricio over 2 yearsThis solution for Python version > 3.6.0 is exactly what I was looking for.