How to run Scrapy from within a Python script

Solution 1

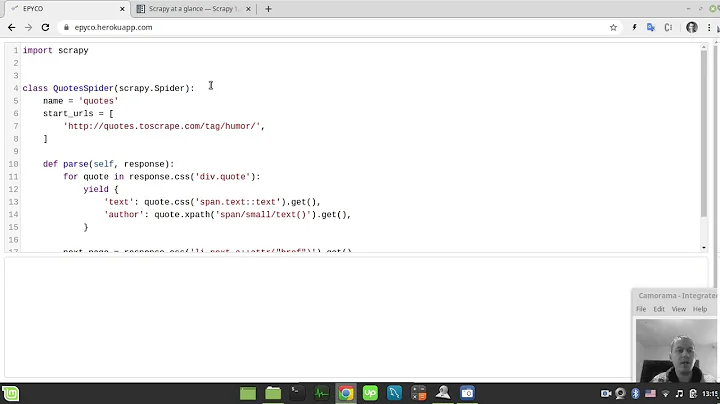

All other answers reference Scrapy v0.x. According to the updated docs, Scrapy 1.0 demands:

import scrapy

from scrapy.crawler import CrawlerProcess

class MySpider(scrapy.Spider):

# Your spider definition

...

process = CrawlerProcess({

'USER_AGENT': 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)'

})

process.crawl(MySpider)

process.start() # the script will block here until the crawling is finished

Solution 2

Simply we can use

from scrapy.crawler import CrawlerProcess

from project.spiders.test_spider import SpiderName

process = CrawlerProcess()

process.crawl(SpiderName, arg1=val1,arg2=val2)

process.start()

Use these arguments inside spider __init__ function with the global scope.

Solution 3

Though I haven't tried it I think the answer can be found within the scrapy documentation. To quote directly from it:

from twisted.internet import reactor

from scrapy.crawler import Crawler

from scrapy.settings import Settings

from scrapy import log

from testspiders.spiders.followall import FollowAllSpider

spider = FollowAllSpider(domain='scrapinghub.com')

crawler = Crawler(Settings())

crawler.configure()

crawler.crawl(spider)

crawler.start()

log.start()

reactor.run() # the script will block here

From what I gather this is a new development in the library which renders some of the earlier approaches online (such as that in the question) obsolete.

Solution 4

In scrapy 0.19.x you should do this:

from twisted.internet import reactor

from scrapy.crawler import Crawler

from scrapy import log, signals

from testspiders.spiders.followall import FollowAllSpider

from scrapy.utils.project import get_project_settings

spider = FollowAllSpider(domain='scrapinghub.com')

settings = get_project_settings()

crawler = Crawler(settings)

crawler.signals.connect(reactor.stop, signal=signals.spider_closed)

crawler.configure()

crawler.crawl(spider)

crawler.start()

log.start()

reactor.run() # the script will block here until the spider_closed signal was sent

Note these lines

settings = get_project_settings()

crawler = Crawler(settings)

Without it your spider won't use your settings and will not save the items. Took me a while to figure out why the example in documentation wasn't saving my items. I sent a pull request to fix the doc example.

One more to do so is just call command directly from you script

from scrapy import cmdline

cmdline.execute("scrapy crawl followall".split()) #followall is the spider's name

Copied this answer from my first answer in here: https://stackoverflow.com/a/19060485/1402286

Solution 5

When there are multiple crawlers need to be run inside one python script, the reactor stop needs to be handled with caution as the reactor can only be stopped once and cannot be restarted.

However, I found while doing my project that using

os.system("scrapy crawl yourspider")

is the easiest. This will save me from handling all sorts of signals especially when I have multiple spiders.

If Performance is a concern, you can use multiprocessing to run your spiders in parallel, something like:

def _crawl(spider_name=None):

if spider_name:

os.system('scrapy crawl %s' % spider_name)

return None

def run_crawler():

spider_names = ['spider1', 'spider2', 'spider2']

pool = Pool(processes=len(spider_names))

pool.map(_crawl, spider_names)

Related videos on Youtube

user47954

Updated on October 24, 2020Comments

-

user47954 over 3 years

I'm new to Scrapy and I'm looking for a way to run it from a Python script. I found 2 sources that explain this:

http://tryolabs.com/Blog/2011/09/27/calling-scrapy-python-script/

http://snipplr.com/view/67006/using-scrapy-from-a-script/

I can't figure out where I should put my spider code and how to call it from the main function. Please help. This is the example code:

# This snippet can be used to run scrapy spiders independent of scrapyd or the scrapy command line tool and use it from a script. # # The multiprocessing library is used in order to work around a bug in Twisted, in which you cannot restart an already running reactor or in this case a scrapy instance. # # [Here](http://groups.google.com/group/scrapy-users/browse_thread/thread/f332fc5b749d401a) is the mailing-list discussion for this snippet. #!/usr/bin/python import os os.environ.setdefault('SCRAPY_SETTINGS_MODULE', 'project.settings') #Must be at the top before other imports from scrapy import log, signals, project from scrapy.xlib.pydispatch import dispatcher from scrapy.conf import settings from scrapy.crawler import CrawlerProcess from multiprocessing import Process, Queue class CrawlerScript(): def __init__(self): self.crawler = CrawlerProcess(settings) if not hasattr(project, 'crawler'): self.crawler.install() self.crawler.configure() self.items = [] dispatcher.connect(self._item_passed, signals.item_passed) def _item_passed(self, item): self.items.append(item) def _crawl(self, queue, spider_name): spider = self.crawler.spiders.create(spider_name) if spider: self.crawler.queue.append_spider(spider) self.crawler.start() self.crawler.stop() queue.put(self.items) def crawl(self, spider): queue = Queue() p = Process(target=self._crawl, args=(queue, spider,)) p.start() p.join() return queue.get(True) # Usage if __name__ == "__main__": log.start() """ This example runs spider1 and then spider2 three times. """ items = list() crawler = CrawlerScript() items.append(crawler.crawl('spider1')) for i in range(3): items.append(crawler.crawl('spider2')) print items # Snippet imported from snippets.scrapy.org (which no longer works) # author: joehillen # date : Oct 24, 2010Thank you.

-

Has QUIT--Anony-Mousse over 11 yearsI replaced the inappropriate tag data-mining (= advanced data analysis) with web-scraping. As to improve your question, make sure it includes: What did you try? and What happened, when you tried!

Has QUIT--Anony-Mousse over 11 yearsI replaced the inappropriate tag data-mining (= advanced data analysis) with web-scraping. As to improve your question, make sure it includes: What did you try? and What happened, when you tried! -

Sjaak Trekhaak over 11 yearsThose examples are outdated - they won't work with current Scrapy anymore.

-

user47954 over 11 yearsThanks for the comment. How do you suggest I should do in order to call a spider from within a script? I'm using the latest Scrapy

-

alecxe over 9 yearsCross-referencing this answer - should give you a detailed overview on how to run Scrapy from a script.

alecxe over 9 yearsCross-referencing this answer - should give you a detailed overview on how to run Scrapy from a script. -

PlsWork about 5 yearsAttributeError: module 'scrapy.log' has no attribute 'start'

-

Levon almost 5 yearsAlso check this answer for one file only solution

Levon almost 5 yearsAlso check this answer for one file only solution

-

-

scharfmn almost 11 yearsThis works, but what do you do at the end? How do you get out of the reactor?

-

Sjaak Trekhaak almost 11 years@CharlesS.: The answer at stackoverflow.com/a/14802526/968644 contains the information to stop the reactor

-

William Kinaan over 10 yearswhere should I put the script please?

William Kinaan over 10 yearswhere should I put the script please? -

Medeiros about 10 yearsThis won't use your custom settings. See this for the details stackoverflow.com/a/19060578/1402286

-

Winston almost 9 yearsI could run this program. I could saw the output from the console. However how could I got it within python? Thanks

Winston almost 9 yearsI could run this program. I could saw the output from the console. However how could I got it within python? Thanks -

danielmhanover almost 9 yearsThat is handled within the spider definition

danielmhanover almost 9 yearsThat is handled within the spider definition -

Winston almost 9 yearsThanks but I need more declaration In traditional way I would write my own spider (similar to the BlogSpider in the official web site) and then run "scrapy crawl myspider.py -o items.json -t json". All the needed data will be saved in a json file for further process. I never got that done within the spider definition. Do you have a link for reference? Thank you very much

Winston almost 9 yearsThanks but I need more declaration In traditional way I would write my own spider (similar to the BlogSpider in the official web site) and then run "scrapy crawl myspider.py -o items.json -t json". All the needed data will be saved in a json file for further process. I never got that done within the spider definition. Do you have a link for reference? Thank you very much -

loremIpsum1771 almost 9 yearsAre all of these spiders within the same project? I was trying to do something similar except with each spider in a different project (since I couldn't get the results to pipeline properly into their own database tables). Since I have to run multiple projects, I can't put the script in any one project.

-

Shadi about 8 yearsLike @Winston , I couldn't find online documentation on how to return data from the spider to my python code. Can you clarify what you mean by

Shadi about 8 yearsLike @Winston , I couldn't find online documentation on how to return data from the spider to my python code. Can you clarify what you mean byhandled within the spider definition? @sddhhanover Thanks -

Shadi about 8 yearsI ended up using item loaders and attaching a function to the item scraped signal

Shadi about 8 yearsI ended up using item loaders and attaching a function to the item scraped signal -

Akshay Hazari almost 7 yearsHow could I pass an argument to MySpider

-

kev over 6 yearsThis is not running scrapy from within a python script.

-

Kwame about 6 years@AkshayHazari the

Kwame about 6 years@AkshayHazari theprocess.crawlfunction will accept keyword arguments and pass them to your spider'sinit -

softmarshmallow over 5 yearsIs Parameter to CrawlerProcess optional?

softmarshmallow over 5 yearsIs Parameter to CrawlerProcess optional? -

Memphis Meng almost 4 yearsIt no longer works because log cannot be found in scrapy in version 2.2

-

Grzegorz Krug almost 4 yearsHow can I attach pipeline to it?

Grzegorz Krug almost 4 yearsHow can I attach pipeline to it? -

ZF007 over 3 yearsAdd context to imporve quality of answer. Keep in mind 7 more answer were given before your and you want to draw attention to your "superior" solution. Perhaps to get rep as well. End of Review.