keepalived: 2nd VRRP_Script never seems to run

This is solved, the problem was a fat fingered script name in the track_script section of the conf file. This was solved by running keepalived --dump-conf which parsed the configuration file and output the results. I tailed /var/log/messages and found an error regarding a missing track script.

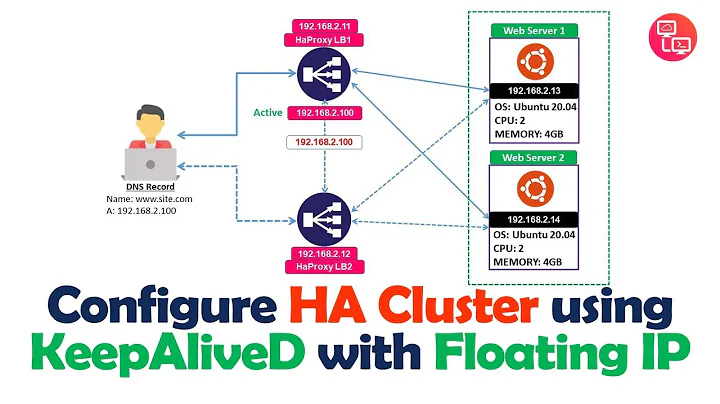

Related videos on Youtube

grahamjgreen

Updated on September 18, 2022Comments

-

grahamjgreen almost 2 years

I'm trying to implement keepalived on 3 mongodb boxes, the idea is that if mongod on one of the boxes goes down or we need to move the primary node to another system for some reason our application doesn't need to be reconfigured.

The keepalived.conf is pretty straight forward, there are 2 VRRP_scripts, one to check mongod is running and the other which is meant to execute a bash script which checks to see if the local mongod instance is the primary node.

keepalived.conf

!Configuration File for keepalived # Global definitions global_defs { notification_email { [email protected] } notification_email_from [email protected] smtp_server smtprelay.penton.com smtp_connect_timeout 30 } # Check to see if mongod is running vrrp_script chk_mongod { script "killall -0 mongod" # verify the pid exists interval 2 # check every 2 seconds # weight 2 # add 2 points if OK } # Check to see if this node is the primary vrrp_script chk_mongod_primary { script "/usr/local/bin/chk_mongo_primary.sh" interval 2 # weight 2 } # Virtual interface configuration vrrp_instance VI_1 { state MASTER interface eth0 #interface to monitor virtual_router_id 51 priority 101 # 101 on mater, 100 on backup virtual_ipaddress { 192.168.122.99 } track_script { chk_mongod chk_mongo_primary } }If I shut the mongod service down on a node the floating IP shifts to another box as I would expect I see output like this in /var/log/messages

Jul 17 16:23:34 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Script(chk_mongod) failed Jul 17 16:23:35 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) Entering FAULT STATE Jul 17 16:23:35 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) removing protocol VIPs. Jul 17 16:23:35 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) Now in FAULT state Jul 17 16:23:35 mongodbtest01 Keepalived_healthcheckers[30303]: Netlink reflector reports IP 192.168.122.99 removedIf I bring mongod back up the IP moves back to that box (since it has priority).

Jul 17 16:27:42 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Script(chk_mongod) succeeded Jul 17 16:27:43 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) prio is higher than received advert Jul 17 16:27:43 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) Transition to MASTER STATE Jul 17 16:27:44 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) Entering MASTER STATE Jul 17 16:27:44 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) setting protocol VIPs. Jul 17 16:27:44 mongodbtest01 Keepalived_vrrp[30304]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.122.99Note the VRRP_Script(chk_mongod) in each log output when mongod goes down and comes back up. If however I reconfigure the replica set such that this node is no longer primary I never see that my VRRP_Script named chk_mongod_primary runs succeeds or fails. I have tested the script from the command line and it returns the expect result each time but it never seems to be executed by collectd.

This is what /usr/local/bin/chk_mongo_primary.sh looks like:

#!/bin/bash # Check to see if this node is master result=$(mongo --eval "printjson(db.isMaster().ismaster)" 2>&1) m_status=`echo $result | cut -d' ' -f 8` if [ "$m_status" == "true" ] ; then echo "I am primary" exit 0 else echo "I am secondary" exit 1 fiI have tried various things and looked at other keepalived configurations to see if I could figure out where I am going wrong but this has me stumped.

Can anyone provide clues as to where I am going wrong?

Thanks in advance.