mdadm: can't remove components in RAID 1

Solution 1

It's because the device nodes no longer exist on your system (probably udev removed them when the drive died). You should be able to remove them by using the keyword failed or detached instead:

mdadm -r /dev/md0 failed # all failed devices

mdadm -r /dev/md0 detached # failed ones that aren't in /dev anymore

If your version of mdadm is too old to do that, you might be able to get it to work by mknod'ing the device to exist again. Or, honestly, just ignore it—it's not really a problem, and should go away the next time you reboot.

Solution 2

What I ended up doing was using mknod like @derobert suggested to create the devices that mdadm was looking for. I tried the major/minor numbers mdadm was telling me it couldn't find with the different drive letters I was trying to remove until it worked.

mknod /dev/sde1 b 8 17

Then I had to use the --force option to get it remove the component.

mdadm /dev/md0 --remove --force /dev/sde1

Then, I removed that created block device.

rm /dev/sde1

Solution 3

You could also fix just by degreasing number of disks in array:

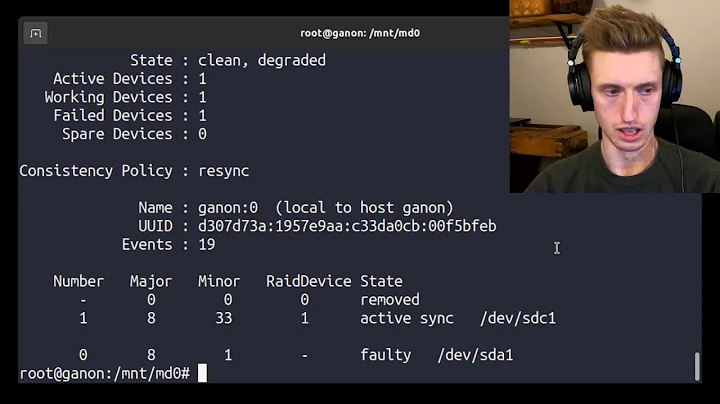

In my case I have raid-1 array /dev/md0 with /dev/sda1 and "removed". I just simply shrunk it to use one drive only:

mdadm -G /dev/md0 --raid-devices=1 --force

After that removed was really removed (no removed lines anymore in mdadm --detail)

Related videos on Youtube

giuseppe maugeri

Updated on September 18, 2022Comments

-

giuseppe maugeri over 1 year

I have my /boot partition in a RAID 1 array using mdadm. This array has degraded a few times in the past, and every time I remove the physical drive, add a new one, bring the array being to normal, it uses a new drive letter. Leaving the old one still in the array and failed. I can't seem to remove those all components that no longer exist.

[root@xxx ~]# cat /proc/mdstat Personalities : [raid1] md0 : active raid1 sdg1[10] sde1[8](F) sdb1[7](F) sdd1[6](F) sda1[4] sdc1[5] 358336 blocks super 1.0 [4/3] [UUU_]Here's what I've tried to remove the non-existent drives and partitions. For example,

/dev/sdb1.[root@xxx ~]# mdadm /dev/md0 -r /dev/sdb1 mdadm: Cannot find /dev/sdb1: No such file or directory [root@xxx ~]# mdadm /dev/md0 -r faulty mdadm: Cannot find 8:49: No such file or directory [root@xxx ~]# mdadm /dev/md0 -r detached mdadm: Cannot find 8:49: No such file or directoryThat

8:49I believe refers to the major and minor number shown in--detail, but I'm not quite sure where to go from here. I'm trying to avoid a reboot or restarting mdadm.[root@xxx ~]# mdadm --detail /dev/md0 /dev/md0: Version : 1.0 Creation Time : Thu Aug 8 18:07:35 2013 Raid Level : raid1 Array Size : 358336 (350.00 MiB 366.94 MB) Used Dev Size : 358336 (350.00 MiB 366.94 MB) Raid Devices : 4 Total Devices : 6 Persistence : Superblock is persistent Update Time : Sat Apr 18 16:44:20 2015 State : clean, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 3 Spare Devices : 0 Name : xxx.xxxxx.xxx:0 (local to host xxx.xxxxx.xxx) UUID : 991eecd2:5662b800:34ba96a4:2039d40a Events : 694 Number Major Minor RaidDevice State 4 8 1 0 active sync /dev/sda1 10 8 97 1 active sync /dev/sdg1 5 8 33 2 active sync /dev/sdc1 6 0 0 6 removed 6 8 49 - faulty 7 8 17 - faulty 8 8 65 - faultyNote: The array is legitimately degraded right now and I'm getting a new drive in there as we speak. However, as you can see above, that shouldn't matter. I should still be able to remove

/dev/sdb1from this array. -

giuseppe maugeri about 9 yearsI've tried using the keywords, you can see the output it gave me in the original post. I'll take a look at

mknod. Yeah, it's probably not an issue, but I'm OCD, lol. -

Avio about 8 yearsThis didn't worked for me,

mdadmcontinued to say: "device or resource busy", but this made me try to feed him not with a fake block device, but with a "true" block device such as a loopback mounted image. At this point, I discovered that I had a stale/dev/loopthat was still using a file on the degraded array. I detached it and finallymdadmlet me stop the array. Horay! For everybody reading this, there is always a logical explanation formdadmbeing so jerk, so look for a stale process/file/mountpoint/nfs handler/open bash/loopback device/etc. still using the degraded array. :) -

ILIV over 5 yearsI was able to use exactly the same major and minor versions (8:18 in my case) to mknod a fake /dev/sdb2 device. After that, mdadm --remove deleted stale record of /dev/sdb2 from /proc/mdstat. Remember to rm /dev/sdb2 after successful --remove action.

-

ILIV over 5 yearsYou have to be careful with this approach, though. Understand well what type of RAID you're dealing with before modifying --raid-devices.

-

Scrooge McDuck over 2 yearsThis is exactly how to remove failed drive.

Scrooge McDuck over 2 yearsThis is exactly how to remove failed drive. -

Fmstrat about 2 yearsClarifying note: The OP did not state he used "failed". I got the same errors they did, but "failed" worked for me. They used "faulty".