Unable to remove the software raid-1 array when it is in degraded state

Before all you must understand how it works. Partitions work over whole disk sda, software RAID works over partitions and next as in diagram:

Disk sda -> partition sda4 -> software RAID md0 -> LVM physical volume -> LVM volume group vg0 -> LVM logical volume -> filesystem -> system mount point.

You can't unmount root filesystem from command line interface which is running from it. That's why you need run same Linux system from CD/DVD. You can use same Linux install CD or last version SystemRescueCD. You need check after starting from CD:

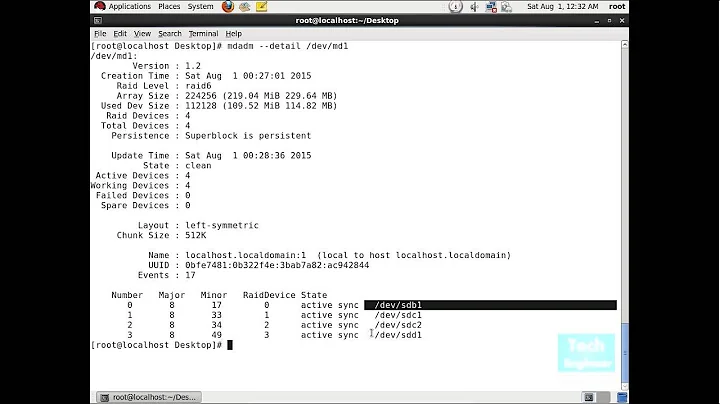

Is software RAID starting and his state by command

cat /proc/mdstat? RAID devicemdmay have another number.Is LVM volume group active by command

vgdisplay?Are filesystems (on LVM volumes) mounted by command

mount?

Then unmount all filesystems which are placed on LVM volumes by command umount, deactivate LVM volume group vg0 by command vgchange -a n vg0, shut down the RAID array by command mdadm --stop /dev/md0, remove the RAID device by command mdadm --remove /dev/md0 and only then zero the superblock on sda4 by command mdadm --zero-superblock /dev/sda4.

Before all you need to backup all files on all LVM volumes filesystems.

May be you will restore grub boot loader.

Updates:

Before restoring boot you need to restore LVM! Boot your system again from SystemResqueCD. Run fdisk /dev/sda and press:

t (type)

4

8e (Linux LVM)

w

Then run testdisk:

create new log file

select /dev/sda and press Proceed

select Intel/PC partition type

select Analyse

select Backup (at first starting `testdisk`) or select Quick Search and Press Enter

select Deeper Search

select Linux LVM with heights start CHS values and press space key to change this found structure as Primary partition and press enter

select Write

Then store testdisk's backup somewhere by scp backup.log user@somehost:~

and reboot again from SystemResqueCD.

after reboot you can see your volumegroup vg0 by command vgdisplay. If it isn't then run testdisk again, load testdisk's backup and start again with another founded Linux LVM partition.

After succesfully restoring LVM you can restore boot as described at Ubuntu Boot repair.

Related videos on Youtube

Sunny

Updated on September 18, 2022Comments

-

Sunny over 1 year

I want to remove the software raid-1 array [ when it is degraded state] configured on LVM in linux system and unable to remove the same. I have even tried using Knoppix Live CD to remove the RAID-1 associated to LVM, but this attempt also failed. When i analyzed the issue, there is a LVM configured along with RAID and the logical volume (vg0-root) is mounted on "/" filesystem. Could you please suggest any way to delete this Raid-1 array with out loss of data.

Please find out the configuration of system:

root@:~# fdisk -l Disk /dev/sdb: 500.1 GB, 500107862016 bytes 255 heads, 63 sectors/track, 60801 cylinders, total 976773168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk identifier: 0x000bb738 Device Boot Start End Blocks Id System /dev/sdb1 2048 34613373 17305663 da Non-FS data /dev/sdb4 * 34613374 156248189 60817408 fd Linux raid autodetect Partition 4 does not start on physical sector boundary. Disk /dev/sda: 500.1 GB, 500107862016 bytes 255 heads, 63 sectors/track, 60801 cylinders, total 976773168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk identifier: 0x000bb738 Device Boot Start End Blocks Id System /dev/sda1 2048 34613373 17305663 da Non-FS data /dev/sda4 * 34613374 156248189 60817408 fd Linux raid autodetect Partition 4 does not start on physical sector boundary. root@:~# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 465.8G 0 disk ├─sda1 8:1 0 16.5G 0 part └─sda4 8:4 0 58G 0 part └─md0 9:0 0 58G 0 raid1 ├─vg0-swap (dm-0) 252:0 0 1.9G 0 lvm [SWAP] ├─vg0-root (dm-1) 252:1 0 19.6G 0 lvm / └─vg0-backup (dm-2) 252:2 0 19.6G 0 lvm sdb 8:16 0 465.8G 0 disk ├─sdb1 8:17 0 16.5G 0 part └─sdb4 8:20 0 58G 0 part root@S761012:~# cat /proc/mdstat Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid1 sda4[0] 60801024 blocks super 1.2 [2/1] [U_] unused devices: <none> root@:~# mdadm --detail /dev/md0 /dev/md0: Version : 1.2 Creation Time : Wed Sep 23 02:59:04 2015 Raid Level : raid1 Array Size : 60801024 (57.98 GiB 62.26 GB) Used Dev Size : 60801024 (57.98 GiB 62.26 GB) Raid Devices : 2 Total Devices : 1 Persistence : Superblock is persistent Update Time : Tue Mar 7 23:38:20 2017 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : Raja:0 UUID : 8b007464:369201ca:13634910:1d1d4bbf Events : 823063 Number Major Minor RaidDevice State 0 8 4 0 active sync /dev/sda4 1 0 0 1 removed root@:~# mdadm --manage /dev/md0 --fail /dev/sda4 mdadm: set device faulty failed for /dev/sda4: Device or resource busy root@:~# mdadm --manage /dev/md0 --remove /dev/sda4 mdadm: hot remove failed for /dev/sda4: Device or resource busy root@:~# mdadm --stop /dev/md0 mdadm: Cannot get exclusive access to /dev/md0:Perhaps a running process, mounted filesystem or active volume group? root@:~# pvdisplay --- Physical volume --- PV Name /dev/md0 VG Name vg0 PV Size 57.98 GiB / not usable 3.00 MiB Allocatable yes PE Size 4.00 MiB Total PE 14843 Free PE 4361 Allocated PE 10482 PV UUID uxH3FS-sUOF-LsIP-kAjq-7Bwq-suhK-CLJXI1 root@:~#:~# lvdisplay --- Logical volume --- LV Path /dev/vg0/swap LV Name swap VG Name vg0 LV UUID BIwp5H-NYlf-drQJ-12Vf-5qYM-7NUj-ty9GhE LV Write Access read/write LV Creation host, time S000001, 2015-09-23 03:00:58 +0000 LV Status available # open 2 LV Size 1.86 GiB Current LE 476 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 252:0 --- Logical volume --- LV Path /dev/vg0/root LV Name root VG Name vg0 LV UUID SBf1mc-iqaB-noBx-1neo-IEPi-HhsH-SM14er LV Write Access read/write LV Creation host, time S000001, 2015-09-23 03:01:19 +0000 LV Status available # open 1 LV Size 19.54 GiB Current LE 5003 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 252:1 --- Logical volume --- LV Path /dev/vg0/backup LV Name backup VG Name vg0 LV UUID w1jGGy-KkfJ-0lDp-MFDl-8BJU-uJWU-24XKSL LV Write Access read/write LV Creation host, time SRAJA, 2016-10-22 05:30:03 +0000 LV Status available # open 0 LV Size 19.54 GiB Current LE 5003 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 252:2 root@:~# vgdisplay --- Volume group --- VG Name vg0 System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 674 VG Access read/write VG Status resizable MAX LV 0 Cur LV 3 Open LV 2 Max PV 0 Cur PV 1 Act PV 1 VG Size 57.98 GiB PE Size 4.00 MiB Total PE 14843 Alloc PE / Size 10482 / 40.95 GiB Free PE / Size 4361 / 17.04 GiB VG UUID LjCUyX-25MQ-WCFT-j2eF-2UWX-LYCp-TtLVJ5Lastly i tried to do:

root@S761012:~# mdadm --zero-superblock /dev/md0 mdadm: Couldn't open /dev/md0 for write - not zeroingroot@:~# umount /dev/md0 umount: /dev/md0: not mounted root@:~# root@:~# mdadm --manage /dev/md0 --remove /dev/sda4 mdadm: hot remove failed for /dev/sda4: Device or resource busy root@:~# mdadm --stop /dev/md0 mdadm: Cannot get exclusive access to /dev/md0:Perhaps a running process, mounted filesystem or active volume group? root@:~# mdadm --zero-superblock /dev/sda4 mdadm: Couldn't open /dev/sda4 for write - not zeroing

Since sda4 is the active disk, it is not allowing me to do any operations. Could you please suggest any other ways to achieve the same.

- Tried with the knoppix live and Gparted live CD, result is same.

I tried to use as mentioned steps:

create new log file select /dev/sda and press Proceed select Intel/PC partition type select Analyse select Backup (at first starting `testdisk`) or select Quick Search and Press Enter select Deeper Search -> After deep search is not showing any thing.-> No partition found or selected for recovery. It is empty. Due to which Boot repair also not working as expected. Always it is going to grub rescue mode.

-

Sunny about 7 yearsThanks and sorry for late response. I have tried by above suggestion, but still i am facing the same errors. root@:~# umount /dev/md0 umount: /dev/md0: not mounted root@:~# root@:~# mdadm --manage /dev/md0 --remove /dev/sda4 mdadm: hot remove failed for /dev/sda4: Device or resource busy root@:~# mdadm --stop /dev/md0 mdadm: Cannot get exclusive access to /dev/md0:Perhaps a running process, mounted filesystem or active volume group? root@:~# mdadm --zero-superblock /dev/sda4 mdadm: Couldn't open /dev/sda4 for write - not zeroing

-

Sunny about 7 yearsmdadm --stop /dev/md0 command worked using SystemRescueCD. Are they any tools in SystemRescueCD to take backup of LVM filesystems, if so could you please let me know.

-

Mikhail Khirgiy about 7 yearsYou can use tar gzip scp rsync ftp dd FSArchiver PartImage Gparted.

Mikhail Khirgiy about 7 yearsYou can use tar gzip scp rsync ftp dd FSArchiver PartImage Gparted. -

George Erhard about 7 yearsLooks like the reason is what Mikhail pointed out - even in single-user mode, your kernel is still using the drive (because that's where it was booted from.) So you'll need to bring the system up using a USB or CD Live Boot, and then work with the array.

George Erhard about 7 yearsLooks like the reason is what Mikhail pointed out - even in single-user mode, your kernel is still using the drive (because that's where it was booted from.) So you'll need to bring the system up using a USB or CD Live Boot, and then work with the array. -

Sunny about 7 yearsAfter i did, mdadm --zero-superblock /dev/sda4, the Linux machine is going to grub resuce mode> and machine boot loader got corrupted. I tried with boot repair disk, still no sucess. Do you have any inputs or suggestions to recover for this issue.

-

Mikhail Khirgiy about 7 yearsWhat Linux version was on your HDD?

Mikhail Khirgiy about 7 yearsWhat Linux version was on your HDD? -

Sunny about 7 yearsubuntu 14.04 LTS Kernel 3.13-0-85

-

Sunny about 7 yearsCan you help me, how to do reload the grub after mdadm --zero-superblock /dev/sda4 command.

-

Mikhail Khirgiy about 7 yearsI'm sorry for my late answer. I was very busy.

Mikhail Khirgiy about 7 yearsI'm sorry for my late answer. I was very busy. -

Sunny about 7 yearsThanks a lot for your help. I will try and let us know the result soon,

-

Sunny about 7 yearsI followed the steps as mentioned, in the testdisk after Quick search and deeper search , I am seeing the message as "No partition is found". But after Analyse , i could see p sys=DA and "No lvm or Lvm2 structure message followed by * Linux LVM data repeated two times.

-

Mikhail Khirgiy about 7 yearsThen you need to recovery from backup.

Mikhail Khirgiy about 7 yearsThen you need to recovery from backup. -

Mikhail Khirgiy about 7 yearsIt's a pity. I tested this on fresh Ubuntu 14.04 installation.

Mikhail Khirgiy about 7 yearsIt's a pity. I tested this on fresh Ubuntu 14.04 installation. -

wvxvw over 2 yearsCame from web search hoping to find information about how to remove failed MD raid, found none in this answer.