mdadm raid1 and what chunksize (or blocksize) on 4k drives?

Chunk size does not apply to raid1 because there is no striping; essentially the entire disk is one chunk. In short, you do not need to worry about the 4k physical sector size. Recent versions of mdadm use the information from the kernel to make sure that the start of data is aligned to a 4kb boundary. Just make sure you are using a 1.x metadata format.

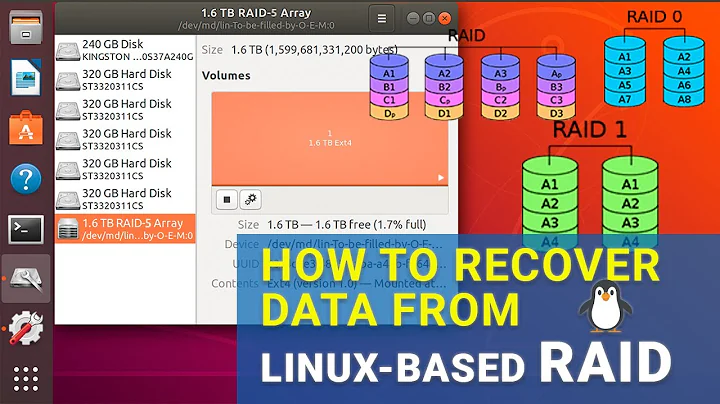

Related videos on Youtube

maxschlepzig

My name is Georg Sauthoff. 'Max Schlepzig' is just a silly old pseudonym (I am hesitant to change it because existing @-replies will not be updated) I studied computer science In my current line of work, I work on trading system software and thus care about low-latency

Updated on September 18, 2022Comments

-

maxschlepzig over 1 year

I want to use two 3 TB drives in a mdadm raid1 setup (using Debian Sequeeze).

The drives use 4k hardware sectors instead of the traditional 512 byte ones.

I am a bit confused because on the one hand the kernel reports:

$ cat /sys/block/sdb/queue/hw_sector_size 512But on the other hand

fdiskreports:# fdisk -l /dev/sdb Disk /dev/sdb: 3000.6 GB, 3000592982016 bytes 255 heads, 63 sectors/track, 364801 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytesThus, it seems that the kernel has some idea that the drive uses 4k sectors.

The

mdadmman page is a bit cryptic about the chunk size and raid1:-c, --chunk= Specify chunk size of kibibytes. The default when creating an array is 512KB. To ensure compatibility with earlier versions, the default when Building and array with no persistent metadata is 64KB. This is only meaningful for RAID0, RAID4, RAID5, RAID6, and RAID10.Why is it not meaningful for raid1?

Looking at

/proc/mdstat, the raid1 device md8 has 2930265424 blocks, i.e.3000591794176/2930265424/2 = 512Does

mdadmuse then a blocksize of 512 bytes? (/2 because it a two-way mirror)And is chunk-size a different concept than blocksize?

Trying to let

mdadmexplain a device:# mdadm -E /dev/sdb -v -v Avail Dev Size : 5860531120 (2794.52 GiB 3000.59 GB) Array Size : 5860530848 (2794.52 GiB 3000.59 GB)Where

3000591794176/5860530848 = 512With a default

mkfs.xfson the md device, it reports:sectsz=512 bsize=4096I corrected this with a call of

mkfs.xfs -s size=4096 /dev/md8Edit: Testing a bit around I noticed following things:

It seems that the initial resync is done with a block size of 128k (and not 512 bytes):

md: resync of RAID array md8 md: minimum _guaranteed_ speed: 1000 KB/sec/disk. md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for resync. md: using 128k window, over a total of 2930265424 blocks.The displayed speed via

/proc/mdstatis consistent for that blocksize (for 512bytes one would expect a performance hit):[>....................] resync = 3.0% (90510912/2930265424) finish=381.1min speed=124166K/sec(For example when disabling the write cache the displayed speed immediately drops to 18m/sec)

Under

/systhere are actually some more relevant files besideshw_sector_size:# cat /sys/block/sdb/queue/physical_block_size 4096 # cat /sys/block/sdb/queue/logical_block_size 512That means that the drive does not lie to the kernel about its 4k sector size and the kernel has some 4k sector support (as the output of

fstab -lsuggested).Googling a bit around resulted in a few reports about WD disks, which do not report the 4k size - fortunately this 3 TB WD disk does not do that - perhaps WD fixed their firmware with current disks.

-

AveryFreeman over 2 yearsIf using an mdadm mirror for iscsi for ESXi, and vmfs block size is 1M, is it best to align to 1M for iscsi for vmfs, or does iscsi change alignment with overhead?

AveryFreeman over 2 yearsIf using an mdadm mirror for iscsi for ESXi, and vmfs block size is 1M, is it best to align to 1M for iscsi for vmfs, or does iscsi change alignment with overhead?