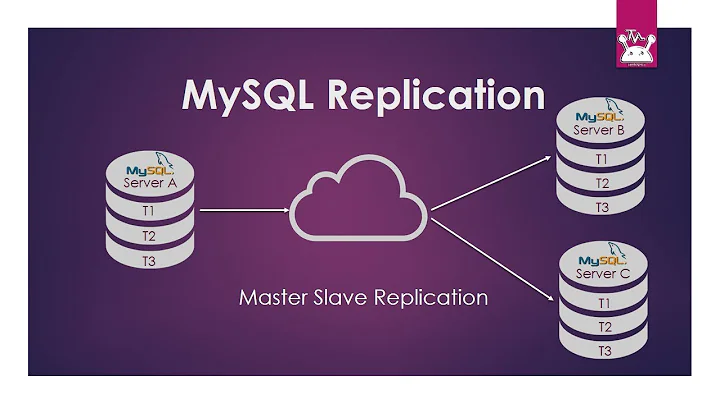

MySQL Replication Performance

Solution 1

Wow, you have some awfully beefy hardware for this problem. There's not much more you can throw at it hardware wise, with the exception of upgrading to maybe Sandy/Ivy Bridge CPUs for 20-50% better performance out of Btree searches, etc.

Please note that my forte is Innodb, so I'm going to

- Ignore that you're myisam and act as if it won't make a difference.

- Assume this problem is enough impetus to get you to upgrade. Yes, it is an upgrade.

Innodb can help take great advantage of all that memory by storing these frequently accessed rows in its buffer pool. You can tune it to be as large as you want (say 80% of memory) and fresh reads/writes remain in memory until it needs to push them to disk to make more room for latest accessed data. In memory is an order of magnitude faster than your FusionIOs.

There are many more Innodb features, such as adaptive hashes, auto-inc locking mechanisms, etc. that can be a boon to your environment. You, however, know your data better than I do.

In the innodb world, an good short term solution is to optimize your slave - do you really need every index on your slave that you have on your master? Indexes are a ball and chain on inserts/updates/deletes, EVEN with Fusion IO cards. IOPS are not everything here. Sandy/Ivy bridge procs have much better memory throughput and computing performance - they can make a huge difference of the Westmeres you have now. (Figure 20-50% overall). Remove all indexes you don't need on the slave!

Second, and almost certainly applies to innodb only, is that mk-prefetch can know which updates and before the slave writes them. This allows mk-prefetch to run a read query first, thereby forcing the data to be in memory by the time the the single repl runs the write query. This means the data is in memory and not in fusionIO, a quick order of magnitude performance gain. This makes a HUGE difference, more than one might expect. Lots of companies use this as a permanent solution. Find out more by checking out the Percona Toolkit.

Third, and most importantly, once you've upgraded to Innodb, definitely checkout Tokutek. These guys have some wickedly awesome stuff that exceeds the write/update/delete performance of Innodb by a long shot. They tout improved replication speed as one of the key benefits, and you can see from their benchmarks why Fusions crazy IOPS still won't help you in the case of Btrees. (Note: Not independently verified by me.) They use a drop-in replace of a btree index that, while hideously more complex, ameliorates many of the algorithmic speed limitations of btree indexes.

I am in the process of considering an adoption of Tokutek. If they free up so much write speed, that allows me to add more indexes. Since they compress the data and indexes at such wonderful ratios (25x they quote), you don't even pay a (performance, maintenance) price for increased data. You do pay ($) for their engine though, $2500/year per pre-compressed GB, IIRC. They have discounts if you have the data replicated, but you can even just install Tokutek on your slave and keep your master as-is. Check out the technical details in the MIT Algoritms Open Courseware lecture. Alternatively, they have tons of technical stuff on their blog and regular whitepapers for those who don't have 1:20 to watch the video. I believe this video also gives the Big-O formula for how fast reads are. I have to assume that reads are slower (There's always a tradeoff!), but the formula is too complex for me to gauge how much. They claim it's roughly the same, but I'd rather understand the math (not likely!). You may be in a better situation to discover this than I am.

P.s. I am not affiliated with Tokutek, I have never run their product and they don't even know I'm looking at them.

Update:

I see you have some other questions on this page and thought I'd chip in:

First, slave pre-fetch almost certainly will not work for myisam unless you have an exceptional environment. This is mostly because the prefetching will be locking the very tables you intend to write to, or the slave thread has the table locked that the pre-fetch daemon needs. If your tables are extremely well balanced for replication and different tables are being written to in a round-robin fashion, this may work - but keep in mind this is very theoretical. The book "High Performance Mysql" has more information in the "Replication Problems" section.

Second, presumably your slave holds a load of 1.0-1.5, it may be higher if you have other procs or queries running but a baseline of 1.0. This means you are likely CPU bound, which is likely with your FusionIO on board. As I mentioned earlier, Sandy/Ivy Bridge is going to give a little bit more oomph, but probably not enough to get you through the rougher times with minimal lag. If the load on this slave is mostly write-only (i.e. not many reads), your CPU is almost certainly spending it's time calculating positions for btree insertions/deletions. This should reinforce my point above about removing non-critical indexes - you can always re-add them later. Disabling hyperthreading will not work, more CPU is not your enemy. Once you get above 32GB ram, say 64GB, you need to worry about ram distribution, but even then the symptoms are different.

Finally, and most importantly (don't skip this part;)), I'm assuming you're now running RBR (Row based replication) because you mentioned a non-trivial performance increase when switching too it. However - there may be a way to get even more performance here. Mysql bug 53375 can manifest if you have tables being replicated with no primary key. The slave is basically not smart enough to use anything but a primary key, so the absence of one forces the replication thread to do a full table scan for every update. A fix is simply adding a benign, surrogate autoincrementing primary key. I'd only do this if the table were large (say several 10s of thousands rows or larger). This, of course, comes at the cost of having another index on the table, which brings up the price you pay in CPU. Note there are very few theoretical arguments against this, as InnoDB adds one behind the scenes if you don't. The phantom one, however, is not a useful defense against 53375. Tungsten can overcome this problem too, but you need to be sure when using Tungsten that you have your encoding straight. The last time I played with it, it would die horribly when any non-UTF8 string needed replicating. That's about the time I gave up on it.

Solution 2

not an answer but you might consider tungsten replicator and their commercial products for more flexibility. is it 100%cpu usage on single core that is the bottleneck?

Solution 3

So if you are doing backups on the slave.. and you use myiasm tables.. you are locking the tables to do the backups to prevent corruption. So replication can't work until the backup is complete.. then it catches up.

Related videos on Youtube

Nick

Updated on September 18, 2022Comments

-

Nick over 1 year

I'm having a serious issue with MySQL 5.5 replication performance between two machines, mostly myISAM tables with statement based replication. The binary logs and mysql data directory are both located on the same Fusion ioDrive.

The problem was a big issue recently when we needed to pause replication for approx. 3 hours. It took about 10 hours to catch up again with no other load.

How can I increase the performance of the replication? Machine B is basically idle (little, IO, 2 maxed out cores out of 16, lots of free RAM), as only 1 mySQL thread was writing data. Here are some ideas I had:

- Switch to row-based replication. In tests this only yielded a 10-20% performance boost

- Upgrade to mySQL 5.6 with multi-threaded replication. We could easily split our data into separate databases, and benchmarks seems to indicate this would help, but the code doesn't seem production ready.

- Some configuration variables that will help speed up replication

The primary issue is that if it takes 10h to catch up after pausing for 3h, this means that replication is writing 13h of data in 10h, or is able to write at 130% of the speed of data coming in. I'm looking to at least double writes on the Master machine in the near future, so desperately need a way to improve replication performance.

Machine A:

- Master

- 24GB Ram

- 1.2TB Fusion ioDrive2

- 2x E5620

- Gigabit interconnect

my.cnf:[mysqld] server-id=71 datadir=/data_fio/mysqldata socket=/var/lib/mysql/mysql.sock tmpdir=/data_fio/mysqltmp log-error = /data/logs/mysql/error.log log-slow-queries = /data/logs/mysql/stats03-slowquery.log long_query_time = 2 port=3306 log-bin=/data_fio/mysqlbinlog/mysql-bin.log binlog-format=STATEMENT replicate-ignore-db=mysql log-slave-updates = true # Performance Tuning max_allowed_packet=16M max_connections=500 table_open_cache = 2048 max_connect_errors=1000 open-files-limit=5000 # mem = key_buffer + ( sort_buffer_size + read_buffer_size ) * max_connections key_buffer=4G max_heap_table_size = 1G tmp_table_size = 4G myisam_sort_buffer_size = 256M sort_buffer_size=4M read_buffer_size=2M query_cache_size=16M query_cache_type=2 thread_concurrency=32 user=mysql symbolic-links=0 [mysqld_safe] log-error=/var/log/mysqld.log pid-file=/var/run/mysqld/mysqld.pid [mysql] socket=/var/lib/mysql/mysql.sock [client] socket=/var/lib/mysql/mysql.sockMachine B:

- Slave

- 36GB Ram

- 1.2TB Fusion ioDrive2

- 2x E5620

- Gigabit interconnect

my.cnf:[mysqld] server-id=72 datadir=/data_fio/mysqldata socket=/var/lib/mysql/mysql.sock tmpdir=/data_fio/mysqltmp log-error = /data/logs/mysql/error.log log-slow-queries = /data/logs/mysql/stats03-slowquery.log long_query_time = 2 port=3306 # Performance Tuning max_allowed_packet=16M max_connections=500 table_open_cache = 2048 max_connect_errors=1000 open-files-limit=5000 # mem = key_buffer + ( sort_buffer_size + read_buffer_size ) * max_connections key_buffer=4G max_heap_table_size = 1G tmp_table_size = 4G myisam_sort_buffer_size = 256M sort_buffer_size=4M read_buffer_size=2M query_cache_size=16M query_cache_type=2 thread_concurrency=32 user=mysql symbolic-links=0 plugin-load=archive=ha_archive.so;blackhole=ha_blackhole.so [mysqld_safe] log-error=/var/log/mysqld.log pid-file=/var/run/mysqld/mysqld.pid [mysql] socket=/var/lib/mysql/mysql.sock [client] socket=/var/lib/mysql/mysql.sock-

Stefan Lasiewski almost 12 yearsMachine B is basically idle . This is my experience with replication on MySQL 5.1. Replication is single-threaded, and one CPU will be maxed out while the others sit there idle.

-

Mike almost 12 yearsare you doing backups off the slave?

-

Nick almost 12 years@stefan-lasiewski To be clear, this is MySQL 5.5, but yes. It is single-threaded and very frustrating

-

Nick almost 12 years@Mike Yes, as well as heavy queries that take many minutes throughout the day. Replication slows to ~100s or so, and then takes a while to catch up again. The service that runs these queries will run one query, wait for it to catch up, then run another, wait, etc... If we're able to speed up replication, we can increase the frequency that we run these queries

-

Stefan Lasiewski almost 12 yearsHave you tried disabling backups, and does that help?

-

Nick almost 12 years@stefan-lasiewski Yes - If nothing stops the replication, it obviously won't get behind. The primary issue is that the replication speed is a bottleneck to increasing writes on the master. If it takes 3.3s to catch up 1s, that means that replication is writing 4.3s of data in 3.3s, or is only able to replicate at 130% of the speed of data coming in. I'm looking to at least double write load on this server.

-

Nick almost 12 yearsThanks! That is an interesting solution, although I'm a little hesitant to plug 3rd-party software into MySQL. In the docs it says "No need to upgrade to wait for future MySQL versions or migrated to untested alternatives", so it appears to be similar to what MySQL 5.6 will have support for. Do you have any experience with Tungsten Replicator?

-

pQd almost 12 yearsnope, just know that reputable mysql ecosystem contributor works for them [ datacharmer.blogspot.com ]. how about the bottleneck - are you sure it's a single-core-load that's the limiting factor?

-

pQd almost 12 years@Nick - sorry, i cannot advice about hyper-threading. but try... also - try installing munin or cacti with mysql templates and take a look more in details what's going on.

-

Nick almost 12 yearsAbsolutely. We do regularly lock tables for either backups or long queries, but the issue lies in the speed of the replication once the IO thread resumes. I estimate that it is only replicating at 130% of the speed of the data coming in, which limits how much further we can scale this setup unless we can improve replication speed. Does that make sense?

-

Nick almost 12 years@HTTP500 Thanks for the link! Great benchmarks there.

-

Nick almost 12 yearsThanks so much for your time! I really appreciate the information you've given here. Moving to InnoDB was something that we had been considering for a while, mostly for the benefits of row-level locking. This gives me some food for thought. Thanks again.

-

plat_winz over 10 yearsWow, this is some seriously brilliant mysql analysis :)