No space on device when removing a file under OpenSolaris

Solution 1

Ok, that's a weird one… not enough space to remove a file!

This turns out to be a relatively common issue with ZFS, though it could potentially arise on any filesystem that has snapshots.

The explanation is that the file you're trying to delete still exists on a snapshot. So when you delete it, the contents keep existing (in the snapshot only); and the system must additionally write the information that the snapshot has the file but the current state doesn't. There's no space left for that extra little bit of information.

A short-term fix is to find a file that's been created after the latest snapshot and delete it. Another possibility is to find a file that's been appended to after the latest snapshot and truncate it to the size it had at the time of the latest snapshot. If your disk got full because something's been spamming your logs, try trimming the largest log files.

A more generally applicable fix is to remove some snapshots. You can list snapshots with zfs list -t snapshot. There doesn't seem to be an easy way to predict how much space will be regained if you destroy a particular snapshot, because the data it stores may turn out to be needed by other snapshots and so will live on if you destroy that snapshot. So back up your data to another disk if necessary, identify one or more snapshots that you no longer need, and run zfs destroy name/of/snap@shot.

There is an extended discussion of this issue in this OpenSolaris forums thread.

Solution 2

That's a well-known issue with copy-on-write filesystems: To delete a file, the filesystem first needs to allocate a block and fix the new status before it is able to release the wealth of space contained within the file just being deleted.

(It is not a problem of filesystems with snapshots, as there are other ways of implementing these than just copy-on-write)

Ways out of the squeeze:

- release a snapshot (in case there is one...)

- grow the pool (in case there's any spare left you can assign to it)

- destroy another filesystem in the pool, then grow the tight filesystem

- truncate the file, then remove it (though once I have been in too tight a squeeze to be able to do even that, see thread at ZFS Discuss)

- unlink the file. (same as above)

I've run into the same trap a few years ago, and didn't have any snapshots I could have released to free me. See the thread at ZFS Discuss where this problem had been discussed in depth.

Solution 3

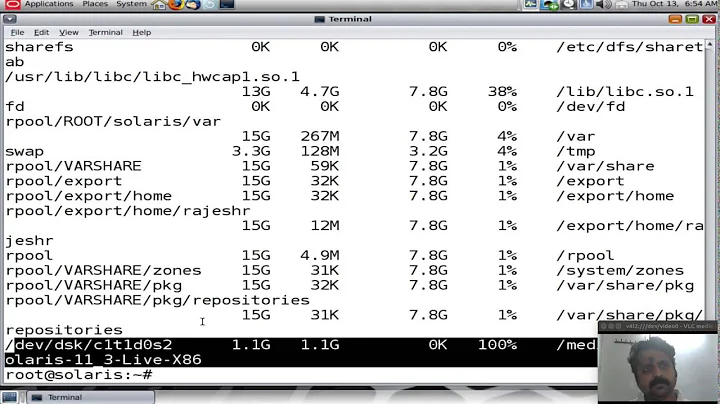

4.Z3G (rpool/root USED column) is dubious.

In any case, rpool/export/home/admin being too big (3.85 GB) is likely the root cause. Have a look at its content and remove unnecessary files there. As the admin file system has no snapshots, this should immediately free some space in the pool.

Related videos on Youtube

Nick Faraday

Updated on September 18, 2022Comments

-

Nick Faraday over 1 year

While trying to mount a NFS share (exported from an OpenIndiana server) on a client box, the OI server crashed. I got the black screen of death, what looked like a log dump, then the system restated. It never came back up and I get the following error msg after I halt the boot:

svc.startd[9] Could not log for svc:/network/dns/mulitcast:default: write(30) failed with No space left on device?I don't have anything else on the boot drive other than the OS so... I'm not sure what could be filling up the drive? Maybe a log file of some kind? I can't seem to delete anything regardless. It gives me a no space error when I try and delete anything:

$ rm filename cannot remove 'filename' : No space left on deviceI can login into "Maintenance Mode" but not the standard user prompt.

The output of

dfis:rpool/ROOT/openindiana-baseline 4133493 4133493 0 100% / swap 83097900 11028 830386872 1% /etc/svc/volatile /usr/lib/libc/libc_hwcap1.so.1 4133493 4133493 0 100% /lib/libc.so.1The output of

mountis:/ on rpool/ROOT/openindiana-baseline read/write/setuid/devices/dev:2d9002 on Wed Dec 31 16:00:00 1969 /devices on /devices read/write/setuid/devices/dev:8b40000 on Fri Jul 8 14:56:54 2011 /dev on /dev read/write/setuid/devices/dev:8b80000 on Fri Jul 8 14:56:54 2011 /system/contract on ctfs read/write/setuid/devices/dev:8c40001 on Fri Jul 8 14:56:54 2011 /proc on proc read/write/setuid/devices/dev:8bc0000 on Fri Jul 8 14:56:54 2011 /etc/mnttab on mnttab read/write/setuid/devices/dev:8c80001 on Fri Jul 8 14:56:54 2011 /etc/svc/volatile on swap read/write/setuid/devices/xattr/dev:8cc0001 on Fri Ju8 14:56:54 2011 /system/object on objfs read/write/setuid/devices/dev:8d00001 on Fri Jul 8 14:6:54 2011 /etc/dfs/sharetab on sharefs read/write/setuid/devices/dev:8d40001 on Fri Jul 14:56:54 2011 /lib/libc.s0.1 on /usr/lib/libc/libc_hucap1.s0.1 read/write/setuid/devices/dev:d90002 on Fri Jul 8 14:57:06 2011The output of 'zfs list -t all' is:

rpool 36.4G 0 47.5K /rpool rpool/ROOT 4.23G 0 31K legacy rpool/ROOT/openindiana 57.5M 0 3.99G / rpool/ROOT/openindiana-baseline 61K 0 3.94G / rpoo1/ROOT/openindiana-system-edge 4.17G 0 3.98G / rpool/ROOT/openindiana-system-edge@install 19.9M - 3 38G - rpoo1/ROOT/openindiana-system-edge@2011-07-06-20:45:08 73.1M - 3.57G - rpoo1/ROOT/openindiana-system-edge@2011-07-06-20:48:53 75.9M - 3 82G - rpoo1/ROOT/openindiana-system-edge@2011-07-07-02:14:04 61K - 3.94G - rpoo1/ROOT/openindiana-system-edge@2011-07-07-02:15:14 61K - 3.94G - rpoo1/ROOT/openindiana-system-edge@2011-07-07-02:28:14 61K - 3.94G - rpool/ROOT/openindiana-system-stable 61K 0 3.94G / rpoo1/ROOT/pre_first_update_07.06 108K 0 3 82G / rpool/ROOT/pre_second_update_07.06 90K 0 3.57G / rpool/dump 9.07G 0 9.07G - rpool/export 3.85G 0 32K /export rpool/export/home 3.85G 0 32K /export/home rpool/export/home/admin 3.85G 0 3.85G /export/home/admin rpool/swap 19.3G 19.1G 126M --

Gilles 'SO- stop being evil' almost 13 yearsIt does look like the filesystem or pool where the logs are being written is full. What is the filesystem and disk organization on the server? Can you still log into the server (you seem to be saying no, but then you say you've tried to delete files)? What do you mean by “It gives me a no space error when I try and delete anything”: what command exactly did you type, and what exact error message did you get?

Gilles 'SO- stop being evil' almost 13 yearsIt does look like the filesystem or pool where the logs are being written is full. What is the filesystem and disk organization on the server? Can you still log into the server (you seem to be saying no, but then you say you've tried to delete files)? What do you mean by “It gives me a no space error when I try and delete anything”: what command exactly did you type, and what exact error message did you get? -

Nick Faraday almost 13 yearsupdated post to answer your questions

-

Gilles 'SO- stop being evil' almost 13 yearsok. So please post the output of

Gilles 'SO- stop being evil' almost 13 yearsok. So please post the output ofdfandmount. What do you know about the configuration of that server? In particular, about its logging configuration? -

jlliagre almost 13 yearsPlease add the output of

jlliagre almost 13 yearsPlease add the output ofzfs list -t all

-

-

Nick Faraday almost 13 yearsya that should have been a '2' not a z (OCR'd img). What's weird is when I cd to /rpool there is nothing in there? I don't think the "Maintenance Mode" make the proper links! Nothing in /export either.

-

jlliagre almost 13 yearsadmin should be mounted on /export/home/admin, not on /rpool. You might just mount it manually if it isn't in maintenance mode.

jlliagre almost 13 yearsadmin should be mounted on /export/home/admin, not on /rpool. You might just mount it manually if it isn't in maintenance mode. -

Aska Ray over 12 yearsThe snapshot capability isn't the cause of the problem - see my answer below. But being able to release a snapshot can work miracles in resolving it, as you have correctly described :)