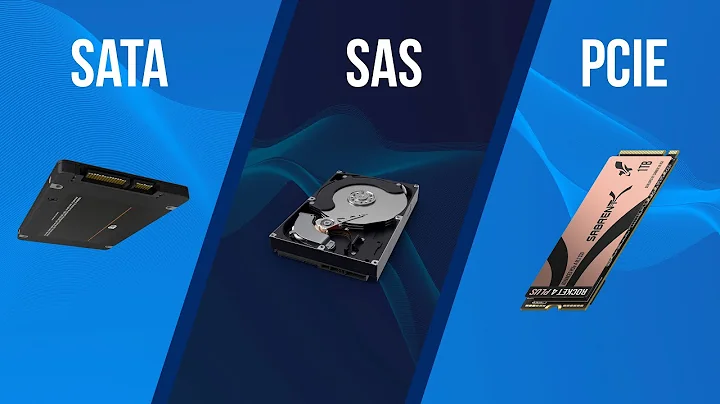

SATA vs SAS vs SSD for Virtual Machine Automation

Solution 1

It's a balance between price and performance.

Mechanical SATA drives obviously have better prices but are in no way designed for such jobs, so for a serious business it's a no-go

SAS protocol has enhanced features (for example queue re-ordering) that allow them to better manage I/O from multiple VMs and so they are much-more efficient (even with the same mechanical parts) than mechanical SATA for this usage.

SSD have good write performance and very good read performance, but are available in smaller size, or at equal capacity, are much more expensive.

SSD are also (like mechanical drives) divided into consumer-grade and enterprise-grade. The second having, for example, more spare cells to replace used cells, more buffers, better trim management.

Also, most of SSD use a SATA interface but now you will find SSD with SAS interface, making them the top choice regarding pure performance.

So the choice is really about needed capacity / price / performance, but you should select enterprise-grade product, especially if you intend to use RAID.

Solution 2

It sounds like you're running into IO contention. There are a couple ways of fixing it:

- Separating the workloads. Put the testing on one set of disks and the VMs on the other. That way one won't interfere with the other.

- Nuke the problem with SSD. Solid-state is much more I/O friendly. If you can afford the space you need, this will make sure your I/O problems are pushed out well into the future.

The difference between SAS and SATA at the drive-counts you're at aren't that great. Probably the only one that matters is speed. SAS drives are almost always 15K, SATA drives are typically slower. The interface type doesn't matter all that much.

Solution 3

It's important to clear up something: SATA vs SAS vs SSD is like saying APPLES vs ORANGES vs JAM - they are not of the same kind.

SAS is the 'enterprise' standard, with some extra features: most of them come with dual ports (so they can be connected to two HBAs to increase availability) and they also have better diagnostics. SATA is found on the 'cheaper' drives, lacking the two above mentioned enhancements.

Performance-wise they're exactly the same, since SATA devices also have command queuing/reordering support (NCQ).

Now on to the SSD vs HDD comparison. HDDs are OK if your workload consists of long sequential reads or writes. But once the access pattern becomes random, they're virtually useless: if you do some quick math, you'll see that a 7200 rpm drive can do a maximum of 120 seeks/second, which isn't a lot! A 15krpm drive doubles this to 250, but that's still nowhere close to the 100,000 random IOs an SSD is capable of.

If your system is IO-starved, the best way is the SSD way. They're almost on par price-wise with the 15krpm SAS drives, so it's really a no-brainer.

If you're concerned about reliability, get one with a 10 year warranty. One thing to look out for is that SSDs have a limited write capacity, but nowadays the write endurance is so high you don't really have to worry about this.

Related videos on Youtube

Koala Bear

Updated on September 18, 2022Comments

-

Koala Bear over 1 year

Koala Bear over 1 yearWe're using SCVMM and Hyper-V hosts to manage our Automated VM environments. Currently we have two servers with 3 7.2k consumer drives each (one for host OS/Storage, two for VMs). It's hard to say if it's the quality of the drives, but right now we can't get more than two VMs to run concurrently on each drive without it interfering with automated UI testing. I say it's the drives because we have excess memory availability and low CPU usage.

I was looking into some input as to the benefits of SAS vs SSD vs SATA drives for a situation such as this, since we're adding a third server and it's a good time to rework our storage system. The actual automation has fairly low IO demand but since we're attempting to run as many machines per drive as possible, I'm not sure what to go with.

Research has not been terribly helpful as most articles and forum posts regarding this subject revolve around home server use or virtualized server/database use.

-

joeqwerty almost 9 years

joeqwerty almost 9 yearsright now we can't get more than two VMs to run concurrently on each drive without it interfering with automated UI testing- What does that mean exactly? -

CIA almost 9 yearsI'm not familiar with that error. Can you post the whole error? Or a screenshot?

CIA almost 9 yearsI'm not familiar with that error. Can you post the whole error? Or a screenshot? -

Koala Bear over 8 yearsWe're using CodedUI and sometimes the IO bottleneck is causing the automation to fail and not find screen elements properly. It never, ever happens when we're running say, three or four VMs, but when we try and run more than 7 we get inconsistent failures across tests in inconsistent places, usually to do with "codedUI could not find WPF element"

Koala Bear over 8 yearsWe're using CodedUI and sometimes the IO bottleneck is causing the automation to fail and not find screen elements properly. It never, ever happens when we're running say, three or four VMs, but when we try and run more than 7 we get inconsistent failures across tests in inconsistent places, usually to do with "codedUI could not find WPF element" -

Chopper3 over 8 yearsYou've missed off the most significant new enterprise storage technology if recent years - NVMe!

-

eldblz over 8 yearsSince you're using HyperV and therefore windows, have a look at tiered storage: basically you can put together SSD and SATA drives in a virtual disk improving the overall performance. Look at this: blogs.technet.com/b/josebda/archive/2013/08/28/…

-

-

Koala Bear over 8 yearsCapacity is definitely the lowest priority for us, again these are almost exclusively automated testing VMs, none of them are used for production or need to have heavy data retention. None of them are above 40GB, we only load them with the OS, the application for testing, and the lab agent or build agent to run the code (depending). My biggest concern with SSDs is their lifespan given the amount of checkpoint restoration and VM cloning that will occur on them

Koala Bear over 8 yearsCapacity is definitely the lowest priority for us, again these are almost exclusively automated testing VMs, none of them are used for production or need to have heavy data retention. None of them are above 40GB, we only load them with the OS, the application for testing, and the lab agent or build agent to run the code (depending). My biggest concern with SSDs is their lifespan given the amount of checkpoint restoration and VM cloning that will occur on them -

Koala Bear over 8 yearsDo you think that simply adding in two more 7.2k drives per box and configuring them into two RAID 0 arrays would soothe the issue? All the VMs are the ones actually running the codedUI automation, so there's nothing else actually competing on the boxes. And there's no heavy data retention on them, just the IO of the OS, TFS build agent, TFS lab agent, and then the codedUI and the actual applications.

Koala Bear over 8 yearsDo you think that simply adding in two more 7.2k drives per box and configuring them into two RAID 0 arrays would soothe the issue? All the VMs are the ones actually running the codedUI automation, so there's nothing else actually competing on the boxes. And there's no heavy data retention on them, just the IO of the OS, TFS build agent, TFS lab agent, and then the codedUI and the actual applications.