Server-to-Switch Trunking in Procurve switch, what does this mean?

Solution 1

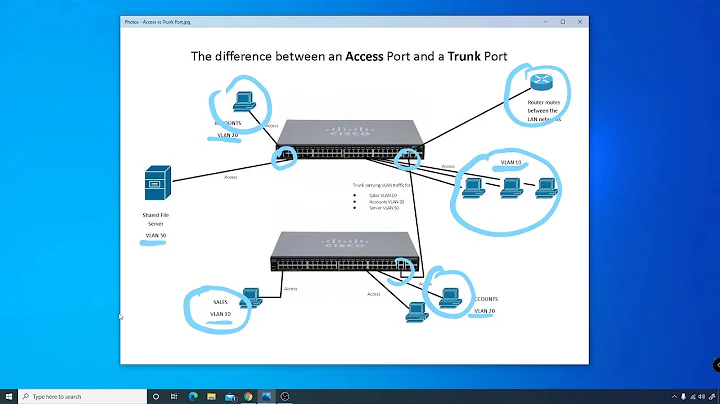

Trunking is a term that encompass several link aggregation technologies. These are in order from most desirable (and highest requirements) to generally least desirable (but least requirements too). trunking may also be called Bonding, EtherChannel, Port Groups, or other names. Be careful not to confuse these technologies with vLAN Trunking (802.1q/802.1ad).

802.3ad LACP is probably the "best" of the bunch. The NIC talks to the Switch, sets up the trunk and data is load balanced for both transmit and receive over all available links. It's common for managed switches (L2 or higher) to support this; most/cheaper models are limited to the one switch.

Splitting ports over multiple switches are called Inter-Switch Trunks. It's common for "stacked" or modular switches to support this. Some switches use a form of shared management and call it stacking, these generally do not support IST. Check for technologies like Cisco VSL, Brocade/Dell ISL, or SMLT/DSMLT (the industry standard extension to 802.3ad).

SLB (Swich-Assisted load balancing) is the predecessor to LACP. You manually configure the trunk at both ends. You get load balancing of transmit and receive, and redundancy; but it's all manually configured, and you have to have switches and NICs that support it. IST is generally support as in LACP above.

TLB (Transmit load balancing) is technology that does not need the switch to speak any particular protocol to coordinate the trunk. You simply plug the NICs into the switch, configure the trunking on the computer and it's good to go. The drawback: data will be load balanced for transmitting only. Receiving will be assigned to one "primary" NIC. if the Primary goes down, one of the secondaries will be promoted. This may confuse some really old switches because multiple ports are sending from the same MAC address. This protocol can span multiple switches without IST support or additional configuration.

This method is sometimes called Round Robin. There are multiple ways to schedule the sending of packets on the trunked NICs, RR being one that simple puts one packet to each port in succession. Some NICs also support more complicated schemes like Lease Queue Depth, Weight Round Robin, and Primarily with Spilover.

NFT (Network Fault Tolerance) just uses one NIC at a time. There's no load balancing at all. This is the only one that works with hubs and some really ancient switches that don't support multiple links with the same MAC Addy. The server will use the primary NIC for everything, if it goes down, it'll switch all traffic to a secondary NIC seamlessly.

Depending on what brand NIC you have, these may be named slightly differently. If you read the descriptions in the NIC's manual however, all of it's options should match up to one of these.

Solution 2

I believe Inter-Switch-Trunk (IST) still isn't quite standardized across vendors. So as cool as it is, if all you need is the redundancy across two switches (and not load balancing, ie ~2GB from 2 1GB ports) you can just use a fail-over mode when boding the NICs to achieve what you want. It will be simpler I think as you can do it with any switches really.

In Linux, this is called Active-Backup mode, and is pretty easy to set up using bonding:

active-backup or 1 Active-backup policy: Only one slave in the bond is active. A different slave becomes active if, and only if, the active slave fails. The bond's MAC address is externally visible on only one port (network adapter) to avoid confusing the switch.

In windows you do it with the utilities that come from the card vendor. I forget their names, but it can be done with Broadcom and Intel.

Related videos on Youtube

MattUebel

Updated on September 17, 2022Comments

-

MattUebel almost 2 years

MattUebel almost 2 yearsI am looking to set up switch redundancy in a new datacenter environment. IEEE 802.3ad seems to be the go-to concept on this, at least when paired with a technology that gets around the "single switch" limitation for the link aggregation. Looking through the brochure for a procurve switch I see:

Server-to-Switch Distributed Trunking, which allows a server to connect to two switches with one logical trunk; increases resiliency and enables load sharing in virtualized data centers

http://www.procurve.com/docs/products/brochures/5400_3500%20Product%20Brochure4AA0-4236ENW.pdf

I am trying to figure out how this relates to the 802.3a standard, as it seems that it would give me what I want (one server has 2 nics, each of which is connected to separate switches, together forming a single logical nic which would provide the happy redundancy we want), but I guess I am looking for someone familiar with this concept and could add to it.

-

Kyle Brandt about 14 years

Kyle Brandt about 14 years -

Kyle Brandt about 14 years/me is jealous again of Chopper3's redundant gig links :-)

Kyle Brandt about 14 years/me is jealous again of Chopper3's redundant gig links :-) -

Chopper3 about 14 years@kyle - who uses gig anymore anyway? TenG is are the only way dude :)

-

Philip almost 14 years

Philip almost 14 years -

Chopper3 almost 14 yearsNice, didn't know anyone was actually shipping yet - thanks.

-

hookenz almost 11 years@Chopper, who uses 10G anymore anyway? 56Gbit is the only way dude :)

-

Chopper3 almost 11 years@Matt - actually we have started using 40Gbps since this post originally came up

![[giaiphapit.vn] Trunking between a Cisco switch and an HP Procurve](https://i.ytimg.com/vi/rezh5EOC33g/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAWrrDyvUwVnVovIvCfWMZo8UsaZg)