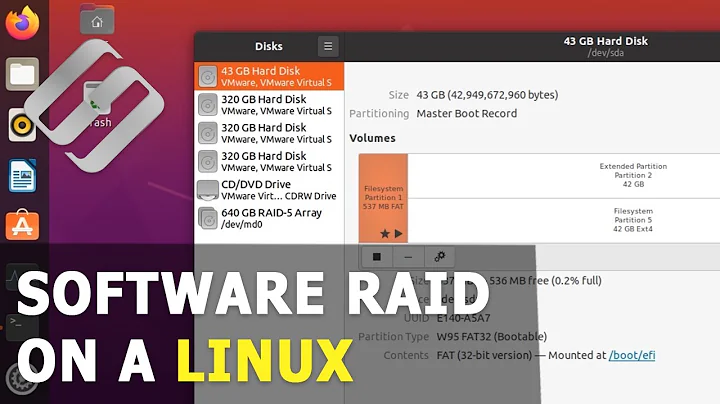

Software RAID 10 on Linux

Solution 1

I would be inclined to go for RAID10 in this instance, unless you needed the extra space offered by the single+RAID5 arrangement. You get the same guaranteed redundancy (any one drive can fail and the array will survive) and slightly better redundancy in worse cases (RAID10 can survive 4 of the 6 "two drives failed at once" scenarios), and don't have the write penalty often experienced with RAID5.

You are likely to have trouble booting off RAID10, either implemented as a traditional nested array (two RAID1s in a RAID0) or using Linux's recent all-in-one RAID10 driver as both LILO and GRUB expect to have all the information needed to boot on one drive which it may not be with RAID0 or 10 (or software RAID5 for that matter - it works in hardware as the boot loader only sees one drive and the controller deals with where the data it actually spread amongst the drives).

There is an easy way around this though: just have a small partition (128MB should be more than enough - you only need room for a few kernel images and associated initrd files) at the beginning of each of the drives and set these up as a RAID1 array which is mounted as /boot. You just need to make sure that the boot loader is correctly installed on each drive, and all will work fine (once the kernel and initrd are loaded, they will cope with finding the main array and dealing with it properly).

The software RAID10 driver has a number of options for tweaking block layout that can bring further performance benefits depending on your I/O load pattern (see here for some simple benchmarks) though I'm not aware of any distributions that support this for of RAID 10 from install yet (only the more traditional nested arrangement). If you want to try the RAID10 driver, and your distro doesn't support it at install time, you could install the entire base system into a RAID1 array as described for /boot above and build the RAID10 array with the rest of the disk space once booted into that.

Solution 2

For up to 4 drives, or as many SATA-drives you can connect to the motherboard, you are in many cases better served by using the motherboard SATA connectors and Linux MD software RAID than HW raid. For one thing, the on-board SATA connections go directly to the southbridge, with a speed of about 20 Gbit/s. Many HW controllers are slower. And then Linux MD RAID software is often faster and much more flexible and versatile than HW RAID. For example the Linux MD RAID10-far layout gives you almost RAID0 reading speed. And you can have multiple partitions of different RAID types with Linux MD RAID, for example a /boot with RAID1, and then /root and other partitions in raid10-far for speed, or RAID5 for space. A further argument is cost - buying an extra RAID controller is often more costly than just using the on-board SATA connections;-)

A setup with /boot on raid can be found on https://raid.wiki.kernel.org/index.php/Preventing_against_a_failing_disk .

More info on Linux RAID can be found on the Linux RAID kernel group wiki at https://raid.wiki.kernel.org/

Related videos on Youtube

Manorama

Entrepreneur with a passion for technology and system architecture.

Updated on September 17, 2022Comments

-

Manorama over 1 year

Manorama over 1 yearFor a long time, I've been thinking about switching to RAID 10 on a few servers. Now that Ubuntu 10.04 LTS is live, it's time for an upgrade. The servers I'm using are HP Proliant ML115 (very good value). It has four internal 3.5" slots. I'm currently using one drive for the system and a RAID5 array (software) for the remaining three disks.

The problem is that this creates a single-point-of-failure on the boot drive. Hence I'd like to switch to a RAID10 array, as it would give me both better I/O performance and more reliability. The problem is only that good controller cards that supports RAID10 (such as 3Ware) cost almost as much as the server itself. Moreover software-RAID10 does not seem to work very well with Grub.

What is your advice? Should I just keep running RAID5? Have anyone been able to successfully install a software RAID10 without boot issues?

-

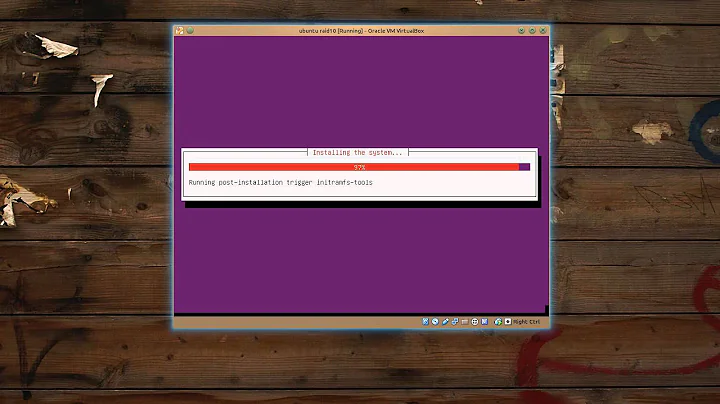

Manorama about 14 yearsI can actually confirm that Ubuntu 10.04 can boot with software RAID. Did a lab in VMware with 4 x 20GB SCSI disks. I partitioned them as follows: 1GB (boot), 5GB (swap) and 16GB (/). I used RAID1 for the boot, RAID0 for the swap and RAID10 for the /. It booted without any problem, unlike Ubuntu 10.04 Beta 1.

Manorama about 14 yearsI can actually confirm that Ubuntu 10.04 can boot with software RAID. Did a lab in VMware with 4 x 20GB SCSI disks. I partitioned them as follows: 1GB (boot), 5GB (swap) and 16GB (/). I used RAID1 for the boot, RAID0 for the swap and RAID10 for the /. It booted without any problem, unlike Ubuntu 10.04 Beta 1.

-

-

Manorama about 14 yearsThanks Erik. It's not that I can't afford a RAID10 controller. I just have a hard time justifying the expense when I can get a half extra server for the price =). Perhaps a hardware RAID5 controller would be better. What are the most reliable ones? Is 3Ware the only choice?

Manorama about 14 yearsThanks Erik. It's not that I can't afford a RAID10 controller. I just have a hard time justifying the expense when I can get a half extra server for the price =). Perhaps a hardware RAID5 controller would be better. What are the most reliable ones? Is 3Ware the only choice? -

Axel about 14 yearsGrub can boot from software RAID1 if you ensure that its initial stage is properly installed on all the constituent drives (it isn't actually aware it is booting of RAID, but it works as each drive in the RAID1 array has a full copy of everything as it it were a single drive). You can use a small RAID1 array for

/bootand have everything else on array types that Grub doesn't like, in order to have a system installed on software RAID 5/6/10 arrays. -

pauska about 14 yearsRegarding the boot loader: Do you have to manually update it on 4 disks every time you upgrade grub, kernel etc?

-

pauska about 14 yearsvpetersson: You really can't compare server prices to storage prices. Servers are dirt cheap if you configure them to be dirt cheap - once you add raid, dual PSU, this, that ++ it gets expensive. You get what you pay for :)

-

Manorama about 14 years@David I actually tried that inside VMware with Ubuntu 10.04 Beta 2 without luck. It still refused to boot. Perhaps it has been resolved in the final version.

Manorama about 14 years@David I actually tried that inside VMware with Ubuntu 10.04 Beta 2 without luck. It still refused to boot. Perhaps it has been resolved in the final version. -

Axel about 14 yearsOnce installed you shouldn't need to do anything extra unless Grub's first stage needs changing - the RAID1 array will take care of keeping everything else in sync over the array's constituent partitions. The installer should get it right too (I've installed Debian/Lenny this way recently with no extra effort needed) though there are problems reported with the Ubuntu/0910 and 0904 installers in this regard.

-

Axel about 14 years@vpetersson: when I experimented with running my netbook off a pair of fast microSD cards as RAID0 with RAID1 for /boot with Ubuntu 9.04, via the alternate install ISO, installing grub errored and I had to manually install it to both the drives - it worked perfectly fine from then until the day one of the SD cards died. Installing and booting from a RAID1 /boot (with RAID-something-else for everything else) has worked fine for me with a number of times Debian/Lenny, and Debian/Etch before that. I've not tried with other Ubuntu releases.