Are file edits in Linux directly saved into disk?

Solution 1

if I turn off the computer immediately after I edit and save a file, my changes will be most likely lost?

They might be. I wouldn't say "most likely", but the likelihood depends on a lot of things.

An easy way to increase performance of file writes, is for the OS to just cache the data, tell (lie to) the application the write went through, and then actually do the write later. This is especially useful if there's other disk activity going on at the same time: the OS can prioritize reads and do the writes later. It can also remove the need for an actual write completely, e.g., in the case where a temporary file is removed quickly afterwards.

The caching issue is more pronounced if the storage is slow. Copying files from a fast SSD to a slow USB stick will probably involve a lot of write caching, since the USB stick just can't keep up. But your cp command returns faster, so you can carry on working, possibly even editing the files that were just copied.

Of course caching like that has the downside you note, some data might be lost before it's actually saved. The user will be miffed if their editor told them the write was successful, but the file wasn't actually on the disk. Which is why there's the fsync() system call, which is supposed to return only after the file has actually hit the disk. Your editor can use that to make sure the data is fine before reporting to the user that the write succeeded.

I said, "is supposed to", since the drive itself might tell the same lies to the OS and say that the write is complete, while the file really only exists in a volatile write cache within the drive. Depending on the drive, there might be no way around that.

In addition to fsync(), there are also the sync() and syncfs() system calls that ask the system to make sure all system-wide writes or all writes on a particular filesystem have hit the disk. The utility sync can be used to call those.

Then there's also the O_DIRECT flag to open(), which is supposed to "try to minimize cache effects of the I/O to and from this file." Removing caching reduces performance, so that's mostly used by applications (databases) that do their own caching and want to be in control of it.

(O_DIRECT isn't without its issues, the comments about it in the man page are somewhat amusing.)

What happens on a power-out also depends on the filesystem. It's not just the file data that you should be concerned about, but the filesystem metadata. Having the file data on disk isn't much use if you can't find it. Just extending a file to a larger size will require allocating new data blocks, and they need to be marked somewhere.

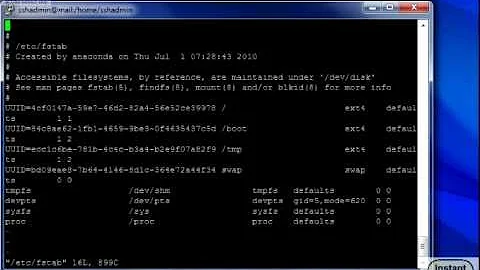

How a filesystem deals with metadata changes and the ordering between metadata and data writes varies a lot. E.g., with ext4, if you set the mount flag data=journal, then all writes – even data writes – go through the journal and should be rather safe. That also means they get written twice, so performance goes down. The default options try to order the writes so that the data is on the disk before the metadata is updated. Other options or other filesystem may be better or worse; I won't even try a comprehensive study.

In practice, on a lightly loaded system, the file should hit the disk within a few seconds. If you're dealing with removable storage, unmount the filesystem before pulling the media to make sure the data is actually sent to the drive, and there's no further activity. (Or have your GUI environment do that for you.)

Solution 2

There is an extremely simple way to prove that it cannot be true that file edits are always directly saved to disk, namely the fact that there are filesystems that aren't backed by a disk in the first place. If a filesystem doesn't have a disk in the first place, then it cannot possibly write the changes to disk, ever.

Some examples are:

-

tmpfs, a file system that only exists in RAM (or more precisely, in the buffer cache) -

ramfs, a file system that only exists in RAM - any network file system (NFS, CIFS/SMB, AFS, AFP, …)

-

any virtual filesystem (

sysfs,procfs,devfs,shmfs, …)

But even for disk-backed file systems this is usually not true. The page How To Corrupt An SQLite Database has a chapter called Failure to sync which describes many different ways in which writes (in this cases commits to an SQLite database) can fail to arrive on disk. SQLite also has a white paper explaining the many hoops you have to jump through to guarantee Atomic Commit In SQLite. (Note that Atomic Write is a much harder than problem than just Write, but of course writing to disk is sub-problem of atomic writing, and you can learn a lot about that problem, too, from this paper.) This paper has a section on Things That Can Go Wrong which includes a subsection about Incomplete Disk Flushes that give some examples of subtle intricacies that might prevent a write from reaching the disk (such as the HDD controller reporting that it has written to disk when it fact it hasn't – yes, there are HDD manufacturers that do this, and it might even be legal according to the ATA spec, because it is ambiguously worded in this respect).

Solution 3

It is true that most operating systems, including Unix, Linux and Windows use a write cache to speed up operations. That means that turning a computer off without shutting it down is a bad idea and may lead to data loss. The same is true if you remove an USB storage before it is ready to be removed.

Most systems also offer the option to make writes synchronous. That means that the data will be on disk before an application receives a success confirmation, at the cost of being slower.

In short, there is a reason why you should properly shut down your computer and properly prepare USB storage for removal.

Solution 4

1. Flash-based storage

Does it depend upon the disk type (traditional hard drives vs. solid-state disks) or any other variable that I might not be aware of? Does it happen (if it does) only in Linux or is this present in other OSes?

When you have a choice, you should not allow flash-based storage to lose power without a clean shutdown.

On low-cost storage like SD cards, you can expect to lose entire erase-blocks (several times larger than 4KB), losing data which could belong to different files or essential structures of the filesystem.

Some expensive SSDs may claim to offer better guarantees in the face of power failure. However third-party testing suggests that many expensive SSDs fail to do so. The layer that remaps blocks for "wear levelling" is complex and proprietary. Possible failures include loss of all data on the drive.

Applying our testing framework, we test 17 commodity SSDs from six different vendors using more than three thousand fault injection cycles in total. Our experimental results reveal that 14 of the 17 tested SSD devices exhibit surprising failure behaviors under power faults, including bit corruption, shorn writes, unserializable writes, metadata corruption, and total device failure.

2017: https://dl.acm.org/citation.cfm?id=2992782&preflayout=flat

2. Spinning hard disk drives

Spinning HDDs have different characteristics. For safety and simplicity, I recommend assuming they have the same practical uncertainty as flash-based storage.

Unless you have specific evidence, which you clearly don't. I don't have comparative figures for spinning HDDs.

A HDD might leave one incompletely written sector with a bad checksum, which will give us a nice read failure later on. Broadly speaking, this failure mode of HDDs is entirely expected; native Linux filesystems are designed with it in mind. They aim to preserve the contract of fsync() in the face of this type of power loss fault. (We'd really like to see this guaranteed on SSDs).

However I'm not sure whether Linux filesystems achieve this in all cases, or whether that's even possible.

The next boot after this type of fault may require a filesystem repair. This being Linux, it is possible that the filesystem repair will ask some questions that you do not understand, where you can only press Y and hope that it will sort itself out.

2.1 If you don't know what the fsync() contract is

The fsync() contract is a source of both good news and bad news. You must understand the good news first.

Good news: fsync() is well-documented as the correct way to write file data e.g. when you hit "save". And it is widely understood that e.g. text editors must replace existing files atomically using rename(). This is meant to make sure that you always either keep the old file, or get the new file (which was fsync()ed before the rename). You don't want to be left with a half-written version of the new file.

Bad news: for many years, calling fsync() on the most popular Linux filesystem could effectively leave the whole system hanging for tens of seconds. Since applications can do nothing about this, it was very common to optimistically use rename() without fsync(), which appeared to be relatively reliable on this filesystem.

Therefore, applications exist which do not use fsync() correctly.

The next version of this filesystem generally avoided the fsync() hang - at the same time as it started relying on the correct use of fsync().

This is all pretty bad. Understanding this history is probably not helped by the dismissive tone and invective which was used by many of the conflicting kernel developers.

The current resolution is that the current most popular Linux filesystem defaults to supporting the rename() pattern without requiring fsync() implements "bug-for-bug compatibility" with the previous version. This can be disabled with the mount option noauto_da_alloc.

This is not a complete protection. Basically it flushes the pending IO at rename() time, but it doesn't wait for the IO to complete before renaming. This is much better than e.g. a 60 second danger window though! See also the answer to Which filesystems require fsync() for crash-safety when replacing an existing file with rename()?

Some less popular filesystems do not provide protection. XFS refuses to do so. And UBIFS has not implemented it either, apparently it could be accepted but needs a lot of work to make it possible. The same page points out that UBIFS has several other "TODO" issues for data integrity, including on power loss. UBIFS is a filesystem used directly on flash storage. I imagine some of the difficulties UBIFS mentions with flash storage could be relevant to the SSD bugs.

Solution 5

On a lightly loaded system, the kernel will let newly-written file data sit in the page-cache for maybe 30 seconds after a write(), before flushing it to disk, to optimize for the case where it's deleted or modified again soon.

Linux's dirty_expire_centisecs defaults to 3000 (30 seconds), and controls how long before newly-written data "expires". (See https://lwn.net/Articles/322823/).

See https://www.kernel.org/doc/Documentation/sysctl/vm.txt for more related tunables, and google for lots more. (e.g. google on dirty_writeback_centisecs).

The Linux default for /proc/sys/vm/dirty_writeback_centisecs is 500 (5 seconds), and PowerTop recommends setting it to 1500 (15 seconds) to reduce power consumption.

Delayed write-back also gives time for the kernel to see how big a file will be, before starting to write it to disk. Filesystems with delayed allocation (like XFS, and probably others these days) don't even choose where on disk to put a newly-written file's data until necessary, separately from allocating space for the inode itself. This reduces fragmentation by letting them avoid putting the start of a large file in a 1 meg gap between other files, for example.

If lots of data is being written, then writeback to disk can be triggered by a threshold for how much dirty (not yet synced to disk) data can be in the pagecache.

If you aren't doing much else, though, your hard-drive activity light won't go on for 5 (or 15) seconds after hitting save on a small file.

If your editor used fsync() after writing the file, the kernel will write it to disk without delay. (And fsync won't return until the data has actually been sent to disk).

Write caching within the disk can also be a thing, but disks normally try to commit their write-cache to permanent storage ASAP, unlike Linux's page-cache algorithms. Disk write caches are more of a store buffer to absorb small bursts of writes, but maybe also to delay writes in favour of reads, and give the disks firmware room to optimize a seek pattern (e.g. do two nearby writes or reads instead of doing one, then seeking far away, then seeking back.)

On a rotating (magnetic) disk, you might see a few seek delays of 7 to 10 ms each before data from a SATA write command is actually safe from power-off, if there were pending reads/writes ahead of your write. (Some other answers on this question go into more detail about disk write caches and write barriers that journalled FSes can use to avoid corruption.)

Related videos on Youtube

JuanRocamonde

Long you live and high you fly And smiles you'll give and tears you'll cry And all you touch and all you see Is all your life will ever be.

Updated on September 18, 2022Comments

-

JuanRocamonde almost 2 years

I used to think that file changes are saved directly into the disk, that is, as soon as I close the file and decide to click/select save. However, in a recent conversation, a friend of mine told me that is not usually true; the OS (specifically we were talking about Linux systems) keeps the changes in memory and it has a daemon that actually writes the content from memory to the disk.

He even gave the example of external flash drives: these are mounted into the system (copied into memory) and sometimes data loss happens because the daemon did not yet save the contents into the flash memory; that is why we unmount flash drives.

I have no knowledge about operating systems functioning, and so I have absolutely no idea whether this is true and in which circumstances. My main question is: does this happen like described in Linux/Unix systems (and maybe other OSes)? For instance, does this mean that if I turn off the computer immediately after I edit and save a file, my changes will be most likely lost? Perhaps it depends on the disk type -- traditional hard drives vs. solid-state disks?

The question refers specifically to filesystems that have a disk to store the information, even though any clarification or comparison is well received.

-

Admin almost 6 yearsFAO: Close Vote queue reviewers. This is not a request for learning materials. See unix.meta.stackexchange.com/q/3892/22812

Admin almost 6 yearsFAO: Close Vote queue reviewers. This is not a request for learning materials. See unix.meta.stackexchange.com/q/3892/22812 -

Admin almost 6 yearsThe cache is opaque to the user, in the best case you must

Admin almost 6 yearsThe cache is opaque to the user, in the best case you mustsync, and applications mustflushto guarantee caches are written back, but even a sucessfullsyncdoes not guarantee write back to physical disk only that kernel caches are flushed to disk, which may have latency in the driver or disk hardware (e.g. on-drive cache that you lose) -

Admin almost 6 yearsAll you say is true, and more. Just pulling the power plug on your computer has always been a BAD IDEA in capitals on *nix machines and even on PCs. What's much worse than simply losing edits to a file is that pulling the rug from under your operating system that way may corrupt the whole file system. Administrative information like allocated blocks and directory contents is scattered throughout the disk; if it is only partly updated, resulting in an inconsistent before/after mix, it's "corrupt" and may stop working altogether, worst case.

Admin almost 6 yearsAll you say is true, and more. Just pulling the power plug on your computer has always been a BAD IDEA in capitals on *nix machines and even on PCs. What's much worse than simply losing edits to a file is that pulling the rug from under your operating system that way may corrupt the whole file system. Administrative information like allocated blocks and directory contents is scattered throughout the disk; if it is only partly updated, resulting in an inconsistent before/after mix, it's "corrupt" and may stop working altogether, worst case. -

Admin almost 6 yearsAs an aside, Joerg Mittag below is correctly pointing out that modern disks have their own cache which is opaque (transparent? whatever) to the operating system. Just pulling the plug from a disk during a write can corrupt its contents, and the OS can do nothing.

Admin almost 6 yearsAs an aside, Joerg Mittag below is correctly pointing out that modern disks have their own cache which is opaque (transparent? whatever) to the operating system. Just pulling the plug from a disk during a write can corrupt its contents, and the OS can do nothing. -

Admin almost 6 yearsA last issue concerns what you mean by "turning off" the computer. Exactly for the reasons discussed, modern machines' power button triggers an orderly operating system shutdown both on Linux and Windows, which includes syncing the disks. (The "real" power button of the transformer is on the back side and normally not used.) If you "turn off" your computer the official way -- as opposed to pulling the plug --, all changes to file systems are physically written to disk; this means that all drives, including USB sticks, are synced and can be safely removed.

Admin almost 6 yearsA last issue concerns what you mean by "turning off" the computer. Exactly for the reasons discussed, modern machines' power button triggers an orderly operating system shutdown both on Linux and Windows, which includes syncing the disks. (The "real" power button of the transformer is on the back side and normally not used.) If you "turn off" your computer the official way -- as opposed to pulling the plug --, all changes to file systems are physically written to disk; this means that all drives, including USB sticks, are synced and can be safely removed. -

Admin almost 6 yearsWhile I don't agree that it's a request for learning materials, I do think the question is a little Broad in its current form. Limit the scope to Linux distributions (or whatever specific OS) and possibly limit it to certain storage technologies and filesystems.

Admin almost 6 yearsWhile I don't agree that it's a request for learning materials, I do think the question is a little Broad in its current form. Limit the scope to Linux distributions (or whatever specific OS) and possibly limit it to certain storage technologies and filesystems. -

Admin almost 6 yearsAs @AnthonyGeoghegan pointed out, I don't consider this question a request for learning materials. I think it's rather specific; I did not ask for a long and deep explanation or a manual about Linux filesystems; only about a brief idea that I wanted to clear out.

Admin almost 6 yearsAs @AnthonyGeoghegan pointed out, I don't consider this question a request for learning materials. I think it's rather specific; I did not ask for a long and deep explanation or a manual about Linux filesystems; only about a brief idea that I wanted to clear out. -

Admin almost 6 yearsIt is true that as it is it may be a bit broad, @JeffSchaller; I'm going to try to edit it a bit; however, honestly if the site is not for this type of questions, that directly address Linux functioning, then what is it for?

Admin almost 6 yearsIt is true that as it is it may be a bit broad, @JeffSchaller; I'm going to try to edit it a bit; however, honestly if the site is not for this type of questions, that directly address Linux functioning, then what is it for? -

Admin almost 6 yearsAlso, judging by the great interest of users on it, my impression is that they do see it as a rather beneficial question, if that is the way to put it.

Admin almost 6 yearsAlso, judging by the great interest of users on it, my impression is that they do see it as a rather beneficial question, if that is the way to put it. -

Admin almost 6 yearsthis applies to any modern OS and would be better posted on superuser.com

Admin almost 6 yearsthis applies to any modern OS and would be better posted on superuser.com -

Admin almost 6 yearsIf I may add so, some files may be memory mapped ( with

Admin almost 6 yearsIf I may add so, some files may be memory mapped ( withmmap()syscall), which I think is often used on large files for improved performance, and edits may occur there in memory.

-

-

JuanRocamonde almost 6 yearsThank you for your reply! Is there a way to force disk writing of a specific file in Linux? Maybe a link to a tutorial or a docs page, even an SE question would just be fine :)

-

RalfFriedl almost 6 yearsYou can force the write of the file with the

fsync()syscall from a program. From a shell, just use thesynccommand. -

Ruslan almost 6 yearsYour

Ruslan almost 6 yearsYoursome cases wherelink doesn't appear to say about any such cases — it's instead saying that there were problems when the apps didn't usefsync. Or should I look into the comments to find these cases you are pointing at? -

ilkkachu almost 6 years@Ruslan, ah, mhmm, you're right, I was too quick to link that. I maybe mixing things up, so I guess I'll just strike that part. The issue I was thinking about was the one where you write a new file, fsync it and rename it into place, and then the filesystem doesn't sync the directory, so the rename is lost and you lose the file too. Which you didn't expect. Of course with hard links, the FS would need to know which directory to sync, so it might be that that issue can't be fixed within the filesystem at all. But I can't remember the details and it's getting late.

ilkkachu almost 6 years@Ruslan, ah, mhmm, you're right, I was too quick to link that. I maybe mixing things up, so I guess I'll just strike that part. The issue I was thinking about was the one where you write a new file, fsync it and rename it into place, and then the filesystem doesn't sync the directory, so the rename is lost and you lose the file too. Which you didn't expect. Of course with hard links, the FS would need to know which directory to sync, so it might be that that issue can't be fixed within the filesystem at all. But I can't remember the details and it's getting late. -

Jörg W Mittag almost 6 yearsThere are (or at least were) some filesystems in some versions of Linux, where

syncwas implemented as a no-op. And even for filesystems that do correctly implementsync, there is still the problem that some disk firmwares implementFLUSH CACHEas a no-op or immediately return from it and perform it in the background. -

crasic almost 6 yearsYou can also use

syncdirectly as a system shell command to poke the kernel to flush all caches. -

JuanRocamonde almost 6 yearsAs @pipe pointed out, the fact that there are filesystems that don't save data into a disk because they don't use a disk to store data, does not decide whether those who do have it may or may not save it directly. However, answer looks interesting

-

Peter Cordes almost 6 yearsIn practice, on a lightly loaded system, the file will hit the disk within a moment. Only if your editor uses

Peter Cordes almost 6 yearsIn practice, on a lightly loaded system, the file will hit the disk within a moment. Only if your editor usesfsync()after writing the file. The Linux default for/proc/sys/vm/dirty_writeback_centisecsis 500 (5 seconds), and PowerTop recommends setting it to 1500 (15 seconds). (kernel.org/doc/Documentation/sysctl/vm.txt). On a lightly loaded system, the kernel will just let it sit dirty in the page-cache that long afterwrite()before flushing to disk, to optimize for the case where it's deleted or modified again soon. -

studog almost 6 years+1 for since the drive itself might make the same lies to the OS. My understanding is that drives doing that kind of caching also have enough power capacitance to allow their caches to be saved even on catastrophic power loss. This isn't OS-specific; Windows has the "Safely remove USB" mechanism to perform cache flushing before the user unplugs.

-

ilkkachu almost 6 years@PeterCordes, well I did write "within a moment" ;) But yeah, edited.

ilkkachu almost 6 years@PeterCordes, well I did write "within a moment" ;) But yeah, edited. -

ilkkachu almost 6 years@studog, I wouldn't be so sure, especially on consumer hardware. But it might be just paranoia. It would be interesting to test, though.

ilkkachu almost 6 years@studog, I wouldn't be so sure, especially on consumer hardware. But it might be just paranoia. It would be interesting to test, though. -

Volker Siegel almost 6 years@pipe I'm pretty sure using the term "besserwissering" is besserwissering! Saying that as a German Besserwisser with authority.

-

Bereket Yisehak almost 5 yearsNote:

Bereket Yisehak almost 5 yearsNote:O_DIRECTtries to minimize the effects of the kernel's cache and doesn't make strong guarantees about other (volatile) caches (e.g. in the RAID controller or the disk itself). Even in the kernel cache case it may not always succeed and can quitely fall back to buffered I/O.