Failover pacemaker cluster with two network interfaces?

Solution 1

So, I resolve my issue with ocf:pacemaker:ping, thanks to @Dok.

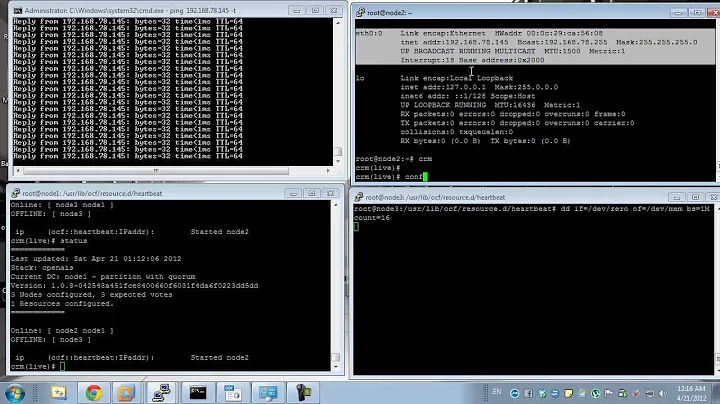

# crm configure show

node srv1

node srv2

primitive P_INTRANET ocf:pacemaker:ping \

params host_list="10.10.10.11 10.10.10.12" multiplier="100" name="ping_intranet" \

op monitor interval="5s" timeout="5s"

primitive cluster-ip ocf:heartbeat:IPaddr2 \

params ip="10.10.10.100" cidr_netmask="24" \

op monitor interval="5s"

primitive ha-nginx lsb:nginx \

op monitor interval="5s"

clone CL_INTRANET P_INTRANET \

meta globally-unique="false"

location L_CLUSTER_IP_PING_INTRANET cluster-ip \

rule $id="L_CLUSTER_IP_PING_INTRANET-rule" ping_intranet: defined ping_intranet

location L_HA_NGINX_PING_INTRANET ha-nginx \

rule $id="L_HA_NGINX_PING_INTRANET-rule" ping_intranet: defined ping_intranet

location L_INTRANET_01 CL_INTRANET 100: srv1

location L_INTRANET_02 CL_INTRANET 100: srv2

colocation nginx-and-cluster-ip 1000: ha-nginx cluster-ip

property $id="cib-bootstrap-options" \

dc-version="1.1.6-9971ebba4494012a93c03b40a2c58ec0eb60f50c" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

no-quorum-policy="ignore" \

stonith-enabled="false"

Solution 2

The ping monitor is not sufficient for this scenario for multiple reasons. what do you ping? In linux, the local ip still pings when the interface is down (which is BAD, it should not), but what if you ping the default gateway? There are a bunch of things outside of the cluster that can cause default gateway ping loss, almost all of which have nothing to do with health of the cluster's local network connection.

Pacemaker needs to have a method to monitor the network interface UP/DOWN status. That is the best indication of local network problems. I haven't found any way to do this yet, and it's a horrible flaw in pacemaker clusters as far as i can tell.

Solution 3

The ocf:pacemaker:pingd resource was designed precisely to failover a node over upon loss of connectivity. You may find a very brief example of this on the cluster labs wiki here: http://clusterlabs.org/wiki/Example_configurations#Set_up_pingd

Somewhat unrelated, but I have seen issues in the past with using ifconfig down to test loss of connectivity. I would strongly encourage that you instead use iptables to drop traffic to test loss of connectivity.

Related videos on Youtube

mr.The

Updated on September 18, 2022Comments

-

mr.The almost 2 years

So, i have two test servers in one vlan.

srv1 eth1 10.10.10.11 eth2 10.20.10.11 srv2 eth1 10.10.10.12 eth2 10.20.10.12 Cluster VIP - 10.10.10.100Corosync config with two interfaces:

rrp_mode: passive interface { ringnumber: 0 bindnetaddr: 10.10.10.0 mcastaddr: 226.94.1.1 mcastport: 5405 } interface { ringnumber: 1 bindnetaddr: 10.20.10.0 mcastaddr: 226.94.1.1 mcastport: 5407 }Pacemaker config:

# crm configure show node srv1 node srv2 primitive cluster-ip ocf:heartbeat:IPaddr2 \ params ip="10.10.10.100" cidr_netmask="24" \ op monitor interval="5s" primitive ha-nginx lsb:nginx \ op monitor interval="5s" location prefer-srv-2 ha-nginx 50: srv2 colocation nginx-and-cluster-ip +inf: ha-nginx cluster-ip property $id="cib-bootstrap-options" \ dc-version="1.1.6-9971ebba4494012a93c03b40a2c58ec0eb60f50c" \ cluster-infrastructure="openais" \ expected-quorum-votes="2" \ no-quorum-policy="ignore" \ stonith-enabled="false"Status:

# crm status ============ Last updated: Thu Jan 29 13:40:16 2015 Last change: Thu Jan 29 12:47:25 2015 via crmd on srv1 Stack: openais Current DC: srv2 - partition with quorum Version: 1.1.6-9971ebba4494012a93c03b40a2c58ec0eb60f50c 2 Nodes configured, 2 expected votes 2 Resources configured. ============ Online: [ srv1 srv2 ] cluster-ip (ocf::heartbeat:IPaddr2): Started srv2 ha-nginx (lsb:nginx): Started srv2Rings:

# corosync-cfgtool -s Printing ring status. Local node ID 185207306 RING ID 0 id = 10.10.10.11 status = ring 0 active with no faults RING ID 1 id = 10.20.10.11 status = ring 1 active with no faultsAnd, if i do

srv2# ifconfig eth1 down, pacemaker still works over eth2, and that's ok. But nginx not available on 10.10.10.100 (becouse eth1 down, ya), and pacemeker says, that everything ok.But, I want the nginx moves to srv1 after eth1 dies on srv2.

So, what can i do for that?

-

c4f4t0r over 9 yearsyour colocation "colocation nginx-and-cluster-ip +inf: ha-nginx cluster-ip" is strange, positive score need to be inf: without a plush in front

c4f4t0r over 9 yearsyour colocation "colocation nginx-and-cluster-ip +inf: ha-nginx cluster-ip" is strange, positive score need to be inf: without a plush in front -

mr.The over 9 years@c4f4t0r, it's ok, i tested it event without a plus, and it works the same: na-nginx and cluster-ip on one node.

-

mr.The over 9 years@c4f4t0r It will be 2 or 3 nodes, so thanks for advice.

-

-

mr.The over 9 yearsI tried it, and it works good, but only if it a 100% connectivity loss. But when i only ~50% packet loss - it works awful - pacemaker change active node about 5 times a minute. How can i fix it?

-

Erik Amundson almost 4 yearsah HA! I found the 'ocf:heartbeat:ethmonitor' resource in CentOS 8 pacemaker. THIS looks promising. I will test...