How do I (re)build/create/assemble an IMSM RAID-0 array from disk images instead of disk drives using mdadm?

Looking at the partition table for /dev/loop0 and the disk image sizes reported for /dev/loop0 and /dev/loop1, I'm inclined to suggest that the two disks were simply bolted together and then the partition table was built for the resulting virtual disk:

Disk /dev/loop0: 298.1 GiB, 320072933376 bytes, 625142448 sectors Device Boot Start End Sectors Size Id Type /dev/loop0p1 * 2048 4196351 4194304 2G 7 HPFS/NTFS/exFAT /dev/loop0p2 4196352 1250273279 1246076928 594.2G 7 HPFS/NTFS/exFAT

and

Disk /dev/loop1: 298.1 GiB, 320072933376 bytes, 625142448 sectors

If we take the two disks at 298.1 GiB and 298.1 GiB we get 596.2 GiB total. If we then take the sizes of the two partitions 2G + 594.2G we also get 596.2 GiB. (This assumes the "G" indicates GiB.)

You have already warned that you cannot get mdadm to recognise the superblock information, so purely on the basis of the disk partition labels I would attempt to build the array like this:

mdadm --build /dev/md0 --raid-devices=2 --level=0 --chunk=128 /dev/loop0 /dev/loop1

cat /proc/mdstat

I have a chunk size of 128KiB to match the chunk size described by the metadata still present on the disks.

If that works you can then proceed to access the partition in the resulting RAID0.

ld=$(losetup --show --find --offset=$((4196352*512)) /dev/md0)

echo loop device is $ld

mkdir -p /mnt/dsk

mount -t ntfs -o ro $ld /mnt/dsk

We already have a couple of loop devices in use, so I've avoided assuming the name of the next free loop device and instead asked the losetup command to tell me the one it's used; this is put into $ld. The offset of 4196532 sectors (each of 512 bytes) corresponds to the offset into the image of the second partition. We could equally have omitted the offset from the losetup command and added it to the mount options.

Related videos on Youtube

Ryan

I'm a programmer who focuses on web development. Occasionally I work in RHEL or Ubuntu.

Updated on September 18, 2022Comments

-

Ryan almost 2 years

The question: Using Linux and mdadm, how can I read/copy data as files from disk images made from hard disks used in an Intel Rapid Storage Technology RAID-0 array (formatted as NTFS, Windows 7 installed)?

The problem: One of the drives in the array is going bad, so I'd like to copy as much data as possible before replacing the drive (and thus destroying the array).

I am open to alternative solutions to this question if they solve my problem.

Background

I have a laptop with an Intel Rapid Storage Technology controller (referred to in various contexts as RST, RSTe, or IMSM) that has two (2) hard disks configured in RAID-0 (FakeRAID-0). RAID-0 was not my choice as the laptop was delivered to me in this configuration. One of the disks seems to have accumulated a lot of bad sectors, while the other disk is perfectly healthy. Together, the disks are still healthy enough to boot into the OS (Windows 7 64-bit), but the OS will sometimes hang when accessing damaged disk areas, and it seems like a bad idea to continue trying to use damaged disks. I'd like to copy as much data as possible off of the disks and then replace the damaged drive. Since operating live on the damaged disk is considered bad, I decided to image both disks so I could later mount the images using mdadm or something equivalent. I've spent a lot of time and done a lot of reading, but I still haven't successfully managed to mount the disk images as a (Fake)RAID-0 array. I'll try to recall the steps I performed here. Grab some snacks and a beverage, because this is lengthy.

First, I got a USB external drive to run Ubuntu 15.10 64-bit off of a partition. Using a LiveCD or small USB thumb drive was easier to boot, but slower than an external (and a LiveCD isn't a persistent install). I installed ddrescue and used it to produce an image of each hard disk. There were no notable issues with creating the images.

Once I got the images, I installed mdadm using apt. However, this installed an older version of mdadm from 2013. The changelogs for more recent versions indicated better support for IMSM, so I compiled and installed mdadm 3.4 using this guide, including upgrading to a kernel at or above 4.4.2. The only notable issue here was that some tests did not succeed, but the guide seemed to indicate that that was acceptable.

After that, I read in a few places that I would need to use loopback devices to be able to use the images. I mounted the disk images as /dev/loop0 and /dev/loop1 with no issue.

Here is some relevant info at this point of the process...

mdadm --detail-platform:

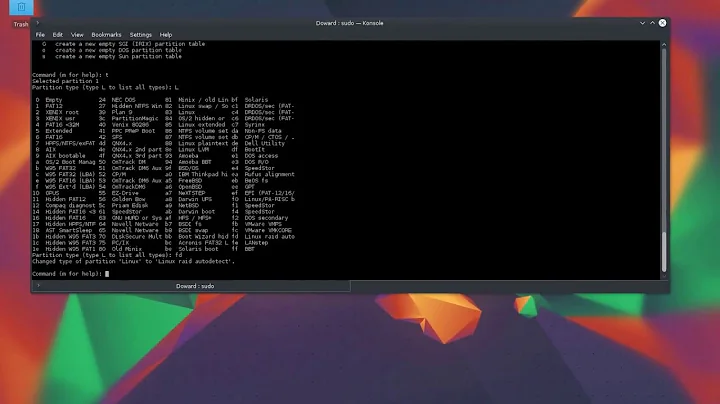

$ sudo mdadm --detail-platform Platform : Intel(R) Rapid Storage Technology Version : 10.1.0.1008 RAID Levels : raid0 raid1 raid5 Chunk Sizes : 4k 8k 16k 32k 64k 128k 2TB volumes : supported 2TB disks : not supported Max Disks : 7 Max Volumes : 2 per array, 4 per controller I/O Controller : /sys/devices/pci0000:00/0000:00:1f.2 (SATA) Port0 : /dev/sda (W0Q6DV7Z) Port3 : - non-disk device (HL-DT-ST DVD+-RW GS30N) - Port1 : /dev/sdb (W0Q6CJM1) Port2 : - no device attached - Port4 : - no device attached - Port5 : - no device attached -fdisk -l:

$ sudo fdisk -l Disk /dev/loop0: 298.1 GiB, 320072933376 bytes, 625142448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x2bd2c32a Device Boot Start End Sectors Size Id Type /dev/loop0p1 * 2048 4196351 4194304 2G 7 HPFS/NTFS/exFAT /dev/loop0p2 4196352 1250273279 1246076928 594.2G 7 HPFS/NTFS/exFAT Disk /dev/loop1: 298.1 GiB, 320072933376 bytes, 625142448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sda: 298.1 GiB, 320072933376 bytes, 625142448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: dos Disk identifier: 0x2bd2c32a Device Boot Start End Sectors Size Id Type /dev/sda1 * 2048 4196351 4194304 2G 7 HPFS/NTFS/exFAT /dev/sda2 4196352 1250273279 1246076928 594.2G 7 HPFS/NTFS/exFAT Disk /dev/sdb: 298.1 GiB, 320072933376 bytes, 625142448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytesmdadm --examine --verbose /dev/sda:

$ sudo mdadm --examine --verbose /dev/sda /dev/sda: Magic : Intel Raid ISM Cfg Sig. Version : 1.0.00 Orig Family : 81bdf089 Family : 81bdf089 Generation : 00001796 Attributes : All supported UUID : acf55f6b:49f936c5:787fa66e:620d7df0 Checksum : 6cf37d06 correct MPB Sectors : 1 Disks : 2 RAID Devices : 1 [ARRAY]: UUID : e4d3f954:2f449bfd:43495615:e040960c RAID Level : 0 Members : 2 Slots : [_U] Failed disk : 0 This Slot : ? Array Size : 1250275328 (596.18 GiB 640.14 GB) Per Dev Size : 625137928 (298.09 GiB 320.07 GB) Sector Offset : 0 Num Stripes : 2441944 Chunk Size : 128 KiB Reserved : 0 Migrate State : idle Map State : normal Dirty State : clean Disk00 Serial : W0Q6DV7Z State : active failed Id : 00000000 Usable Size : 625136142 (298.09 GiB 320.07 GB) Disk01 Serial : W0Q6CJM1 State : active Id : 00010000 Usable Size : 625136142 (298.09 GiB 320.07 GB)mdadm --examine --verbose /dev/sdb:

$ sudo mdadm --examine --verbose /dev/sdb /dev/sdb: Magic : Intel Raid ISM Cfg Sig. Version : 1.0.00 Orig Family : 81bdf089 Family : 81bdf089 Generation : 00001796 Attributes : All supported UUID : acf55f6b:49f936c5:787fa66e:620d7df0 Checksum : 6cf37d06 correct MPB Sectors : 1 Disks : 2 RAID Devices : 1 Disk01 Serial : W0Q6CJM1 State : active Id : 00010000 Usable Size : 625137928 (298.09 GiB 320.07 GB) [ARRAY]: UUID : e4d3f954:2f449bfd:43495615:e040960c RAID Level : 0 Members : 2 Slots : [_U] Failed disk : 0 This Slot : 1 Array Size : 1250275328 (596.18 GiB 640.14 GB) Per Dev Size : 625137928 (298.09 GiB 320.07 GB) Sector Offset : 0 Num Stripes : 2441944 Chunk Size : 128 KiB Reserved : 0 Migrate State : idle Map State : normal Dirty State : clean Disk00 Serial : W0Q6DV7Z State : active failed Id : 00000000 Usable Size : 625137928 (298.09 GiB 320.07 GB)Here is where I ran into difficulty. I tried to assemble the array.

$ sudo mdadm --assemble --verbose /dev/md0 /dev/loop0 /dev/loop1 mdadm: looking for devices for /dev/md0 mdadm: Cannot assemble mbr metadata on /dev/loop0 mdadm: /dev/loop0 has no superblock - assembly abortedI get the same result by using --force or by swapping /dev/loop0 and /dev/loop1.

Since IMSM is a CONTAINER type FakeRAID, I'd seen some indications that you have to create the container instead of assembling it. I tried...

$ sudo mdadm -CR /dev/md/imsm -e imsm -n 2 /dev/loop[01] mdadm: /dev/loop0 is not attached to Intel(R) RAID controller. mdadm: /dev/loop0 is not suitable for this array. mdadm: /dev/loop1 is not attached to Intel(R) RAID controller. mdadm: /dev/loop1 is not suitable for this array. mdadm: create abortedAfter reading a few more things, it seemed that the culprit here were IMSM_NO_PLATFORM and IMSM_DEVNAME_AS_SERIAL. After futzing around with trying to get environment variables to persist with sudo, I tried...

$ sudo IMSM_NO_PLATFORM=1 IMSM_DEVNAME_AS_SERIAL=1 mdadm -CR /dev/md/imsm -e imsm -n 2 /dev/loop[01] mdadm: /dev/loop0 appears to be part of a raid array: level=container devices=0 ctime=Wed Dec 31 19:00:00 1969 mdadm: metadata will over-write last partition on /dev/loop0. mdadm: /dev/loop1 appears to be part of a raid array: level=container devices=0 ctime=Wed Dec 31 19:00:00 1969 mdadm: container /dev/md/imsm prepared.That's something. Taking a closer look...

$ ls -l /dev/md total 0 lrwxrwxrwx 1 root root 8 Apr 2 05:32 imsm -> ../md126 lrwxrwxrwx 1 root root 8 Apr 2 05:20 imsm0 -> ../md127/dev/md/imsm0 and /dev/md127 are associated with the physical disk drives (/dev/sda and /dev/sdb). /dev/md/imsm (pointing to /dev/md126) is the newly created container based on the loopback devices. Taking a closer look at that...

$ sudo IMSM_NO_PLATFORM=1 IMSM_DEVNAME_AS_SERIAL=1 mdadm -Ev /dev/md/imsm /dev/md/imsm: Magic : Intel Raid ISM Cfg Sig. Version : 1.0.00 Orig Family : 00000000 Family : ff3cb556 Generation : 00000001 Attributes : All supported UUID : 00000000:00000000:00000000:00000000 Checksum : 7edb0f81 correct MPB Sectors : 1 Disks : 1 RAID Devices : 0 Disk00 Serial : /dev/loop0 State : spare Id : 00000000 Usable Size : 625140238 (298.09 GiB 320.07 GB) Disk Serial : /dev/loop1 State : spare Id : 00000000 Usable Size : 625140238 (298.09 GiB 320.07 GB) Disk Serial : /dev/loop0 State : spare Id : 00000000 Usable Size : 625140238 (298.09 GiB 320.07 GB)That looks okay. Let's try to start the array. I found information (here and here) that said to use Incremental Assembly mode to start a container.

$ sudo IMSM_NO_PLATFORM=1 IMSM_DEVNAME_AS_SERIAL=1 mdadm -I /dev/md/imsmThat gave me nothing. Let's use the verbose flag.

$ sudo IMSM_NO_PLATFORM=1 IMSM_DEVNAME_AS_SERIAL=1 mdadm -Iv /dev/md/imsm mdadm: not enough devices to start the containerOh, bother. Let's check /proc/mdstat.

$ sudo cat /proc/mdstat Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md126 : inactive loop1[1](S) loop0[0](S) 2210 blocks super external:imsm md127 : inactive sdb[1](S) sda[0](S) 5413 blocks super external:imsm unused devices: <none>Well, that doesn't look right - the number of blocks don't match. Looking closely at the messages from when I tried to assemble, it seems mdadm said "metadata will over-write last partition on /dev/loop0", so I'm guessing that the image file associated with /dev/loop0 is hosed. Thankfully, I have backup copies of these images, so I can grab those and start over, but it takes a while to re-copy 300-600GB even over USB3.

Anyway, at this point, I'm stumped. I hope someone out there has an idea, because at this point I've got no clue what to try next.

Is this the right path for addressing this problem, and I just need to get some settings right? Or is the above approach completely wrong for mounting IMSM RAID-0 disk images?

-

Matej about 8 yearsHey, did you manage to find some sort of solution to this? I'm having almost exactly the same symptoms as you. I bought an MSI Stealth Pro with two 256GB ssds in RAID-0, dual booted Ubuntu 14.04 and Windows 10 on it for nearly a year now, and yesterday Ubuntu just refused to boot, warning that the array could not be assembled.

Matej about 8 yearsHey, did you manage to find some sort of solution to this? I'm having almost exactly the same symptoms as you. I bought an MSI Stealth Pro with two 256GB ssds in RAID-0, dual booted Ubuntu 14.04 and Windows 10 on it for nearly a year now, and yesterday Ubuntu just refused to boot, warning that the array could not be assembled. -

Ryan about 8 yearsI did not. I ended up just booting from a Windows Recovery Disc so I could access the array and used robocopy to copy all files that I could access. I could not continue to justify not having a working computer for the sake of my curiosity. I do still have the disk images though to test possible future solutions. I'll gladly drop another bounty on here to see if I can attract some attention.

-

Ryan about 8 years@Matej Check roaima's answer below. That solved my problem. Hopefully it helps you out!

-

Matej about 8 yearsThanks a lot for letting me know, looks promising! I'll try it out as soon as I can and report back here.

Matej about 8 yearsThanks a lot for letting me know, looks promising! I'll try it out as soon as I can and report back here. -

Ryan about 8 yearsStandard cautionary disclaimer: Keep in mind that I operated on disk images created by ddrescue and not on the physical disks themselves. Working directly with live physical disks may make things worse if you do something wrong. Good luck!

-

-

Ryan about 8 years"I'm inclined to suggest that the two disks were simply bolted together and then the partition table was built for the resulting virtual disk" That is my guess as well, I just couldn't figure out how to make mdadm recognize the configuration. I'll try to get a chance this week to try out your suggestion here.

-

roaima about 8 years@Ryan I've taken the approach that "if it looks like a duck, walks like a duck, quacks like a duck, then we can assume it is a duck". Far simpler than all this IMSM stuff but quite possibly entirely wrong, too. Either way, good luck with the result.

roaima about 8 years@Ryan I've taken the approach that "if it looks like a duck, walks like a duck, quacks like a duck, then we can assume it is a duck". Far simpler than all this IMSM stuff but quite possibly entirely wrong, too. Either way, good luck with the result. -

Ryan about 8 yearsI got up to the last step of your instructions:

mount ... -o ro $p2 /mnt/dsk, but I seem to be doing something wrong. When I run that, I just get themountusage message in terminal. Was that a literal "..." or was I meant to fill something in there? -

Ryan about 8 yearsAlso, the output of

cat /proc/mdstatwas:md0 : active raid0 loop1[1] loop0[0] 625142400 blocks super non-persistent 64k chunksIf the RAID was using 128K stripes, should I have specified that in the mdadm command? -

roaima about 8 years@Ryan fill in the

roaima about 8 years@Ryan fill in the...to match your filesystem type -

roaima about 8 years@Ryan somehow I missed the chunk size. Yes, use

roaima about 8 years@Ryan somehow I missed the chunk size. Yes, use--chunk=128for the 128KiB chunk size. Answer also updated, thank you -

Ryan about 8 yearsSorry, I'm not particularly good at mount commands. Would replacing "..." with

-t ntfssuffice, or is there more to it? -

Ryan about 8 yearsThat did the trick! After running into a few minor errors (had to get the chunk size right, had to create /mnt/dsk first, had to specify

-t ntfswithmountcommand), I successfully mounted the disk image and opened it and there are all of my old files, seemingly intact. Thank you so much @roaima ! -

Ryan about 8 yearsIf you could also briefly explain the flags/options that you used for

losetupandmount, that would be helpful for others who come by this post later. Thanks! -

roaima about 8 years@Ryan further explanations added as requested

roaima about 8 years@Ryan further explanations added as requested