How to find and delete duplicate files within the same directory?

Solution 1

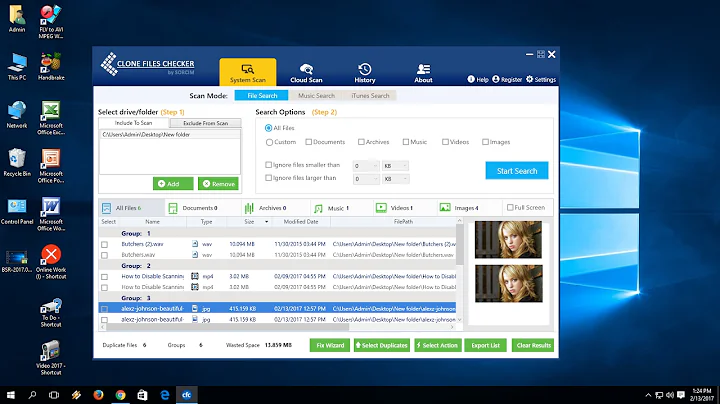

If you're happy to simply use a command line tool, and not have to create a shell script, the fdupes program is available on most distros to do this.

There's also the GUI based fslint tool that has the same functionality.

Solution 2

This solution will find duplicates in O(n) time. Each file has a checksum generated for it, and each file in turn is compared to the set of known checksums via an associative array.

#!/bin/bash

#

# Usage: ./delete-duplicates.sh [<files...>]

#

declare -A filecksums

# No args, use files in current directory

test 0 -eq $# && set -- *

for file in "$@"

do

# Files only (also no symlinks)

[[ -f "$file" ]] && [[ ! -h "$file" ]] || continue

# Generate the checksum

cksum=$(cksum <"$file" | tr ' ' _)

# Have we already got this one?

if [[ -n "${filecksums[$cksum]}" ]] && [[ "${filecksums[$cksum]}" != "$file" ]]

then

echo "Found '$file' is a duplicate of '${filecksums[$cksum]}'" >&2

echo rm -f "$file"

else

filecksums[$cksum]="$file"

fi

done

If you don't specify any files (or wildcards) on the command line it will use the set of files in the current directory. It will compare files in multiple directories but it is not written to recurse into directories themselves.

The "first" file in the set is always considered the definitive version. No consideration is taken of file times, permissions or ownerships. Only the content is considered.

Remove the echo from the rm -f "$file" line when you're sure it does what you want. Note that if you were to replace that line with ln -f "${filecksums[$cksum]}" "$file" you could hard-link the content. Same saving in disk space but you wouldn't lose the file names.

Solution 3

The main issue in your script seems to be that i takes the actual file names as values, while j is just a number. Taking the names to an array and using both i and j as indices should work:

files=(*)

count=${#files[@]}

for (( i=0 ; i < count ;i++ )); do

for (( j=i+1 ; j < count ; j++ )); do

if diff -q "${files[i]}" "${files[j]}" >/dev/null ; then

echo "${files[i]} and ${files[j]} are the same"

fi

done

done

(Seems to work with Bash and the ksh/ksh93 Debian has.)

The assignment a=(this that) would initialize the array a with the two elements this and that (with indices 0 and 1). Wordsplitting and globbing works as usual, so files=(*) initializes files with the names of all files in the current directory (except dotfiles). "${files[@]}" would expand to all elements of the array, and the hash sign asks for a length, so ${#files[@]} is the number of elements in the array. (Note that ${files} would be the first element of the array, and ${#files} is the length of the first element, not the array!)

for i in `/folder/*`

The backticks here are surely a typo? You'd be running the first file as a command and giving the rest as arguments to it.

Solution 4

There are tools that do this, and do it more efficiently. Your solution when it is working is O(n²) that is the time it takes to run is proportional to n² where n is the size of the problem in total bytes in files. The best algorithm could do this in close to O(n). (A am discussing big-O notation, a way to summarise how efficient an algorithm is.)

First you would create a hash of each file, and only compare these: this saves a lot of time if you have a lot of large files that are almost the same.

Second you would use short cut methods: If files have different sizes, then they are not the same. Unless there is another file of same size, don't even open it.

Related videos on Youtube

Su_scriptingbee

Updated on September 18, 2022Comments

-

Su_scriptingbee over 1 year

I want to find duplicate files, within a directory, and then delete all but one, to reclaim space. How do I achieve this using a shell script?

For example:

pwd folderFiles in it are:

log.bkp log extract.bkp extractI need to compare log.bkp with all the other files and if a duplicate file is found (by it's content), I need to delete it. Similarly, file 'log' has to be checked with all other files, that follow, and so on.

So far, I have written this, But it's not giving desired result.

#!/usr/bin/env ksh count=`ls -ltrh /folder | grep '^-'|wc -l` for i in `/folder/*` do for (( j=i+1; j<=count; j++ )) do echo "Current two files are $i and $j" sdiff -s $i $j if [ `echo $?` -eq 0 ] then echo "Contents of $i and $j are same" fi done done-

ctrl-alt-delor almost 7 yearsnote

ctrl-alt-delor almost 7 yearsnoteecho $?is equivalent to$?. Also$(command)is preferred to using back ticks. -

baptx over 5 yearsHere is a one-line script to find duplicate files with subfolders support, it may help others also:

baptx over 5 yearsHere is a one-line script to find duplicate files with subfolders support, it may help others also:md5sum `find -type f` | sort | uniq -D -w 32(superuser.com/questions/259148/…) It does not automatically delete duplicates but it could be changed if you really want to.

-

-

Su_scriptingbee almost 7 yearsThank you!! Its giving me the output. Could you pls elaborate on first two lines?

-

ilkkachu almost 7 years@Su_scriptingbee, elaborated. Bash's manual has a page on arrays if you need a reference. I think it should be similar in ksh, except that bash uses

ilkkachu almost 7 years@Su_scriptingbee, elaborated. Bash's manual has a page on arrays if you need a reference. I think it should be similar in ksh, except that bash usesdeclarein place of ksh'stypeset. (I may have missed some differences, though.) -

Su_scriptingbee almost 7 yearsCool, this is working. I just tested on dir with less than 10 files.

-

Su_scriptingbee almost 7 yearsIt will be helpful if you elaborate on "test 0 -eq $# && set -- * "

-

Su_scriptingbee almost 7 yearsBoth of these utilities are not installed in my systems!

-

einonm almost 7 years@Su_scriptingbee If you let us know what your system is, a way to install it could possible be suggested.

einonm almost 7 years@Su_scriptingbee If you let us know what your system is, a way to install it could possible be suggested. -

Olivier Dulac almost 7 years@Su_scriptingbee: could be rewritten as :

[ 0 -eq $# ] && set -- *. To explain: test 0 -eq "$#" compares (numerically, with -eq) "0" with "$#" (which is the number of arguments passed to the shell script). If 0, there was no arguments passed, and then (&&) it creates an argument list withset -- *(*which will expand to all the files and dirs in the current directory (as the script as no "cd" before that, it will be the directory the person was in when launching the script, or the home-dir of the user starting the script if the script is launched remotely or via something like cron). -

roaima almost 7 years@Su_scriptingbee

roaima almost 7 years@Su_scriptingbeetest 0 -eq $#asks if there are any command-line arguments.set -- *sets the command-line arguments to be the set of items (files, directories, etc.) in the current directory. The&&allows the second part to run only if the the first part succeeds. -

Lasith Niroshan over 6 years

Lasith Niroshan over 6 yearsfinddupcan find duplicates. please read the manual page. As well as you can usefdupes. -

A.G. almost 3 years@Su_scriptingbee you can

brew install fdupesif you havebrewinstalled.