script to count files in a directory

Solution 1

If you want only regular files,

With GNU find:

find . -maxdepth 1 -type f -printf . | wc -c

Other finds:

find . ! -name . -prune -type f -print | grep -c /

(you don't want -print | wc -l as that wouldn't work if there are file names with newline characters).

With zsh:

files=(*(ND.)); echo $#files

With ls:

ls -Anq | grep -c '^-'

To include symlinks to regular files, change -type f to -xtype f with GNU find, or -exec test -f {} \; with other finds, or . with -. with zsh, or add the -L option to ls. Note however that you may get false negatives in cases where the type of the target of the symlink can't be determined (for instance because it lies in a directory you don't have access to).

If you want any type of file (symlink, directory, pipes, devices...), not only regular one:

find . ! -name . -prune -printf . | wc -c

(change to -print | grep -c / with non-GNU find, (ND.) to (ND) with zsh, grep -c '^-' with wc -l with ls).

That will however not count . or .. (generally, one doesn't really care about those as they are always there) unless you replace -A with -a with ls.

If you want all types of files except directories, Replace -type f with ! -type d (or ! -xtype d to also exclude symlinks to directories), and with zsh, replace . with ^/, and with ls, replace grep -c '^-' with grep -vc '^d'.

If you want to exclude hidden files, add a ! -name '.*' or with zsh, remove the D or with ls, remove the A.

Solution 2

To iterate over everything in the current directory, use

for f in ./*; do

Then use either

[ -f "$f" ]

or

test -f "$f"

to test whether the thing you got is a regular file (or a symbolic link to one) or not.

Note that this will exclude files that are sockets or special in other ways.

To just exclude directories, use

[ ! -d "$f" ]

instead.

The quoting of the variable is important as it would otherwise miscount if there, for example, exists a directory called hello world and a file called hello.

To also match hidden files (files with a leading . in their filenames), either set the dotglob shell option with shopt -s dotglob (in bash), or use

for f in ./* ./.*; do

It may be wise also to set the nullglob shell option (in bash) if you want the shell to return nothing when these glob patterns match nothing (otherwise you'll get the literal strings ./* and/or ./.* in f).

Note: I avoid giving a complete script as this is an assignment.

Solution 3

To exclude subdirectories

find . -maxdepth 1 -type f | wc -l

This assumes that none of the filenames contain newline characters, and that your implementation of find supports the -maxdepth option.

Solution 4

#!/bin/bash

# initialize counter

count=0;

# go through the whole directory listing, including hidden files

for name in * .*

do

if [[ ! -d $name ]]

then

# not a directory so count it

count=$(($count+1))

fi

done

echo $count

Solution 5

Here's a bash script that will count up and then echo the number of (non-directory) files in the current directory:

#!/usr/bin/env bash

i=0

shopt -s nullglob dotglob

for file in *

do

[[ ! -d "$file" ]] && i=$((i+1))

done

echo "$i"

Setting "nullglob" gets the count right when there are no files (hidden or otherwise) in the current directory; leaving nullglob unset would mean that the for loop would (incorrectly) see one item: *.

Setting "dotglob" tells bash to also include hidden files (those start with a .) when globbing with *. By default, bash will not include . or .. (see: GLOBIGNORE) when generating the list of hidden files. If your assignment does not want to count hidden files, then do not set dotglob.

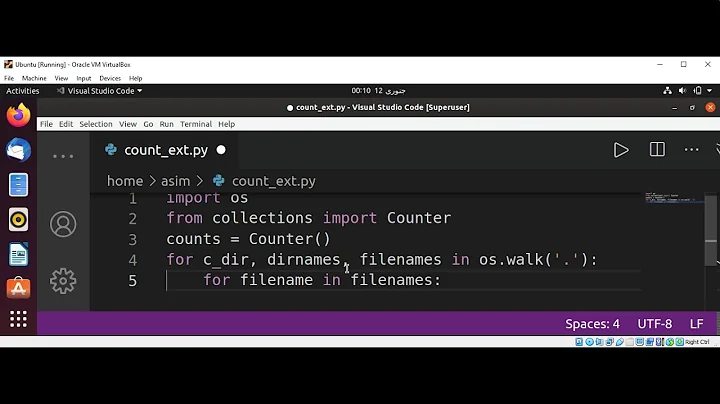

Related videos on Youtube

user182477

Updated on September 18, 2022Comments

-

user182477 over 1 year

I'm looking for a script that counts files in the current directory (excluding sub directories). It should iterate through each file and if not a directory increment a count. The output should just be an integer representing the number of files.

I've come up with

find . -type f | wc -lBut I don't really think it does the whole counting bit. This is for an assignment so if you only want point me in the right direction that would be great.

-

roaima almost 8 yearsIt's very close indeed. All you need is an extra operator to tell

roaima almost 8 yearsIt's very close indeed. All you need is an extra operator to tellfindnot to descend into subdirectories. The-maxdepth 1will do that for you. -

Jeff Schaller almost 8 yearsThere's been some updates in the answers as to whether "hidden" files should count or not - do they? Also,

Jeff Schaller almost 8 yearsThere's been some updates in the answers as to whether "hidden" files should count or not - do they? Also,find-based answers do not explicitly increment a counter -- is that a requirement? -

G-Man Says 'Reinstate Monica' almost 8 years… although the correct solution doesn’t appear until the last six lines of slm’s answer. Stéphane Chazelas also provides a very inclusive answer.

G-Man Says 'Reinstate Monica' almost 8 years… although the correct solution doesn’t appear until the last six lines of slm’s answer. Stéphane Chazelas also provides a very inclusive answer.

-

-

Kusalananda almost 8 years

Kusalananda almost 8 years -

Kusalananda almost 8 years@JeffSchaller Thanks. I think I fixed that now.

Kusalananda almost 8 years@JeffSchaller Thanks. I think I fixed that now. -

Stéphane Chazelas almost 8 yearsThat doesn't work if there are filenames with newline characters. Replace

Stéphane Chazelas almost 8 yearsThat doesn't work if there are filenames with newline characters. Replacewc -lwithgrep -c /. You can't assume a file path to be a line of text, you can't even assume it to be text at all as generally on Unix-like systems, file paths are just arrays of non-0 bytes, with nothing enforcing those bytes to form valid characters in the current locale. In that regardgrep -c /is not even guaranteed to work (though in practice it generally does) asgrepis only required to work properly on valid text. -

roaima almost 8 years@StéphaneChazelas given that the context is an assignment this Answer is almost certainly sufficient.

roaima almost 8 years@StéphaneChazelas given that the context is an assignment this Answer is almost certainly sufficient. -

Stéphane Chazelas almost 8 years@roaima, on the contrary, if that's about teaching, you want to teach the right thing and good practice. We don't want the next generation of coders making those incorrect assumption and write unreliable/vulnerable code.

Stéphane Chazelas almost 8 years@roaima, on the contrary, if that's about teaching, you want to teach the right thing and good practice. We don't want the next generation of coders making those incorrect assumption and write unreliable/vulnerable code. -

user4556274 almost 8 yearsNot sure of stackexchange etiquette, but choosing not to edit my answer in response to @StéphaneChazelas comment, as his own answer covers this improvement and more.

-

Stéphane Chazelas almost 8 yearsQuoting only disables the split+glob operator, it does nothing to change the meaning of

Stéphane Chazelas almost 8 yearsQuoting only disables the split+glob operator, it does nothing to change the meaning of-(unless-is in$IFS).test -f -xortest -f "-x"are the same thing (and are generally not a problem except in very old shells, but then you'd solve the problem withfor f in ./*, not with quoting). Also note thattest -f hello worldwould give you an error regardless of whetherhellois a regular file or not (except in AT&T ksh) -

Kusalananda almost 8 years@StéphaneChazelas I should write all of these things down... Thanks again! I'll remove the passage about

Kusalananda almost 8 years@StéphaneChazelas I should write all of these things down... Thanks again! I'll remove the passage about-as I'm bypassing it with./*. -

Stéphane Chazelas almost 8 years@Kusalananda,

Stéphane Chazelas almost 8 years@Kusalananda,ls -aq | wc -lwould be OK as then filenames are guaranteed to be on single lines (since a\nin the filename is rendered as?). So the first example is almost right. The rest have more issues. -

Stéphane Chazelas almost 8 years@Kusalananda,

Stéphane Chazelas almost 8 years@Kusalananda,lsonly does (may do) that when the output goes to a tty device. Here, it's going to a pipe or socketpair depending on the shell. The issues would be more about filenames containing IFS or glob characters (try aftertouch '*' 'a b'for instance. -

Kusalananda almost 8 years@StéphaneChazelas My comment was in this case a comment on the recommendation to use

Kusalananda almost 8 years@StéphaneChazelas My comment was in this case a comment on the recommendation to uselsfor these sort of things. It's not the tool for the job really. -

Kusalananda almost 8 years@StéphaneChazelas Thanks for putting me straight again.

Kusalananda almost 8 years@StéphaneChazelas Thanks for putting me straight again. -

Kusalananda almost 8 years@KonradRudolph You know, that's a good suggestion. In my own scripts (and I rarely write

Kusalananda almost 8 years@KonradRudolph You know, that's a good suggestion. In my own scripts (and I rarely writebashscripts) I tend to not rely onshoptsvery much as they change the behaviour of basic things so radically. But in this case, just for doing this, it's a viable alternative. I'll mention it. -

roaima almost 8 years@StéphaneChazelas when ones teaches, one starts with the basics and glosses over complexities. As an example in mathematics, young children are taught only integer arithmetic. As time progresses they are taught that only positive numbers have have a square root. Yet further on, one is introduced to i. By all means provide a professionally competent solution, but I urge that "sufficient" answers should also be offered, particularly when the asker explains that they are working on an (introductory) assignment. Unlike ServerFault we don't require a minimum standard of competence to participate.

roaima almost 8 years@StéphaneChazelas when ones teaches, one starts with the basics and glosses over complexities. As an example in mathematics, young children are taught only integer arithmetic. As time progresses they are taught that only positive numbers have have a square root. Yet further on, one is introduced to i. By all means provide a professionally competent solution, but I urge that "sufficient" answers should also be offered, particularly when the asker explains that they are working on an (introductory) assignment. Unlike ServerFault we don't require a minimum standard of competence to participate. -

Stéphane Chazelas almost 8 years@roaima. I don't agree. What first grades learn about arithmetic is correct. They don't learn that

Stéphane Chazelas almost 8 years@roaima. I don't agree. What first grades learn about arithmetic is correct. They don't learn that1+1=2only to learn later that it's incorrect and that they should forget about it. Here,find . | wc -lis incorrect or at least is only correct if you make some assumptions (which in practice you can rarely enforce). So, at the very least, those assumptions should be stated here. But IMO, it's better to teach a correct solution instead so people don't have to unlearn what they've be taught before when they move to a more advanced grade. -

Julie Pelletier almost 8 yearsJust added support for hidden files.

-

Kusalananda almost 8 yearsThat last one will definitely be wrong if any filenames contains newlines. It will also count the "header" (

Kusalananda almost 8 yearsThat last one will definitely be wrong if any filenames contains newlines. It will also count the "header" (total NN) thatls -lprints. -

Kusalananda almost 8 yearsAnd the first if there are newlines or spaces in filenames.

Kusalananda almost 8 yearsAnd the first if there are newlines or spaces in filenames. -

G-Man Says 'Reinstate Monica' almost 8 yearsYou mentioned that

G-Man Says 'Reinstate Monica' almost 8 yearsYou mentioned thatgrep -c /is not guaranteed to work — so wouldn’t it be better, in your POSIX example, to do-exec echo ";"or-exec dirname -- {} +, and then count lines (withwc -l)? Granted, thedirnameexample will crash and burn if the name of the starting directory contains newline(s) (e.g.,find $'foo\nbar' …), but! name .assumes that you’re doingfind . …, doesn’t it? I would suggest-exec stat -c "" -- {} +, butstatisn’t in POSIX either (right?). -

Stéphane Chazelas almost 8 years@G-Man, that's theoretical. POSIX leaves the behaviour of

Stéphane Chazelas almost 8 years@G-Man, that's theoretical. POSIX leaves the behaviour ofgrepunspecified on non-text input. Here, it could be non-text if filenames contain non-characters (LC_ALL=Cwould work around that), or if the file paths are larger than LINE_MAX. Here for one level of directory, in practice, that won't happen. Even if that happened, in practice, what I see in implementations that have a LINE_MAX limit forgrepis it only looking at the first LINE_MAX bytes of the line which is OK here as the / is in 2nd position. That / would also appear before the first invalid character if any. -

Stéphane Chazelas almost 8 years@G-Man,

Stéphane Chazelas almost 8 years@G-Man,statis the archetype of the non-portable command. And among the numerous incompatible implementations, the GNU one (which you seem to be refering to with that-csyntax) is one of the worst IMO (especially considering that GNUfindhad a much better interface (with the-printfsyntax different from GNUstat's (!?)) long before GNUstatwas created. Here you could dofind . -exec printf '%.1s' {} + | wc -c, but that potentially runs more commands than thegrep -c /approach and is less efficient as it means holding the data and running potentially huge command lines. -

flickerfly about 7 yearsI think

flickerfly about 7 yearsI thinkls -A1 |wc -lis a bit more intuitive for the ls options and will probably be a bit faster. Bring the steak sauce. -

Stéphane Chazelas about 7 years@flickerfly, that counts all directory entries but

Stéphane Chazelas about 7 years@flickerfly, that counts all directory entries but.and..not only the regular files or non-directory files. That also doesn't work properly for file names with newline characters. Note that-1is superflous with POSIX compliantlsimplementations.