How to find frequency of occurrences of strings contained in a file?

Solution 1

Original input file

Assuming the following input format:

http://www.google.com,

www.google.com,

google.com

yahoo.com

With a result looking like this:

google.com : 3

yahoo.com : 1

It's hard to determine the entire situation you're in but given the output you're showing us I'd be inclined to convert the input file first so that all the lines are of the form:

google.com

google.com

google.com

yahoo.com

And then run this file through the following set of commands:

$ grep -v "^$" data.txt | \

sed -e 's/,$//' -e 's/.*\.\(.*\)\.\(.*\)$/\1.\2/' | \

sort | uniq -c

3 google.com

1 yahoo.com

You can clean up the format of the output so it matches what you want like this:

$ grep -v "^$" data.txt | \

sed -e 's/,$//' -e 's/.*\.\(.*\)\.\(.*\)$/\1.\2/' | \

sort | uniq -c | \

awk '{printf "%s : %s\n", $1, $2}'

google.com : 3

yahoo.com : 1

EDIT #1

The OP had a follow-up question where he changed the inputs in the example. So to count this type of input:

http://www.google.com/absd/siidfs/kfd837382$%^$&,

www.google.com,

google.com

yahoo.com/list/page/jhfjkshdjf...

You could use this adapted one-liner from the first example:

$ grep -v "^$" data2.txt | \

sed -e 's/,$//' \

-e 's#\(http://[^/]\+\).*#\1#' \

-e '/^[^http]/ s/^www\.//' \

-e '/^[^http]/ s#\([^/]\+\).*$#\1#' | \

sort | uniq -c | \

awk '{printf "%s : %s\n", $1, $2}'

2 : google.com

1 : http://www.google.com

1 : yahoo.com

Solution 2

You probably want to use sort and uniq -c to get the counts correct, then use sed or awk to do final formatting. Something like this:

sort file | uniq -c | awk '{printf "%s : %s\n", $1, $2}'

Your original question could probably be answered with the same basic pipeline, but first editing the input:

sed -e 's/http:\/\///' -e 's/^www\.//' file | sort | uniq -c |

awk '{printf "%s : %s\n", $1, $2}'

If that's not exactly correct, you can tinker with the sed and awk commands to get hostname forms and output format correct. For example, to clean off the right-hand-side of longer URLs:

sed -e 's/http:\/\///' -e 's/^www\.//' -e 's/\/..*$//' file |

sort | uniq -c |

awk '{printf "%s : %s\n", $1, $2}'

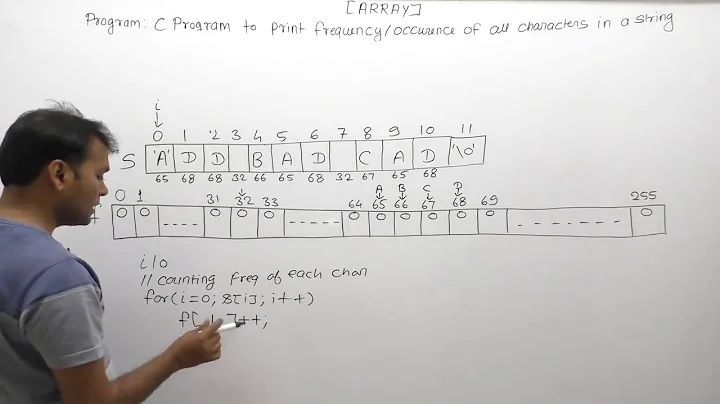

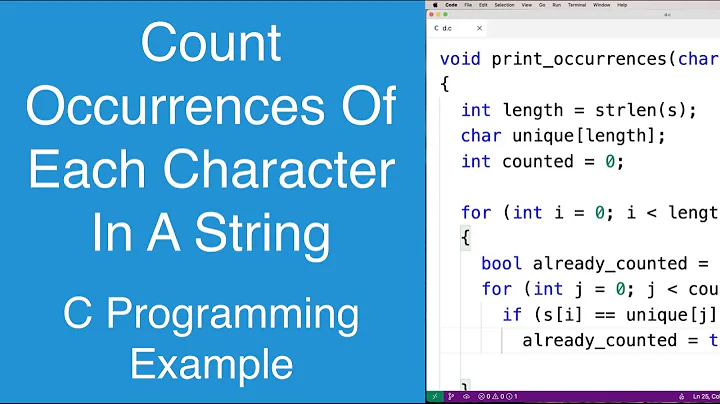

Related videos on Youtube

coder

Updated on September 18, 2022Comments

-

coder over 1 year

I have a file that contains a list of URLs of the form

EDIT

http://www.google.com/absd/siidfs/kfd837382$%^$&,

www.google.com,

google.com

yahoo.com/list/page/jhfjkshdjf...

I want to write a script that will display the following output

google.com : 2 http://www.google.com: 1 yahoo.com : 1I am stuck with the part that I have to read the URLs from the file and check the whole file again. I am new to bash scripting and hence I don't know how to do this.

-

coder over 10 yearswhat to do if I have longer URLs as asked in the edited question?

-

coder over 10 yearswhat to do if I have longer URLs as asked in the edited question?

-

slm over 10 years@coder - you probably should've asked that as a different question. This one was asked and answered, you're breaking all the answers here and making a lot of clean up work for everyone. In the 2nd Q you can reference this one and say you need to expand the solution. Please ask it as a 2nd question and roll the edits back on this one so that it's as it was before. I can help if you don't know what to do.

slm over 10 years@coder - you probably should've asked that as a different question. This one was asked and answered, you're breaking all the answers here and making a lot of clean up work for everyone. In the 2nd Q you can reference this one and say you need to expand the solution. Please ask it as a 2nd question and roll the edits back on this one so that it's as it was before. I can help if you don't know what to do. -

slm over 10 years@coder - I've provided a solution but I'd still like you to split this Q into 2 please. I'll move the portion of my answer from here to there after you've created the Q. It messes with the continuity of the site if we have overly complex Q's intermixed within the same one.

slm over 10 years@coder - I've provided a solution but I'd still like you to split this Q into 2 please. I'll move the portion of my answer from here to there after you've created the Q. It messes with the continuity of the site if we have overly complex Q's intermixed within the same one. -

Admin over 10 years@coder - see second script. I think that works.

Admin over 10 years@coder - see second script. I think that works.