How to force mdadm to stop RAID5 array?

Solution 1

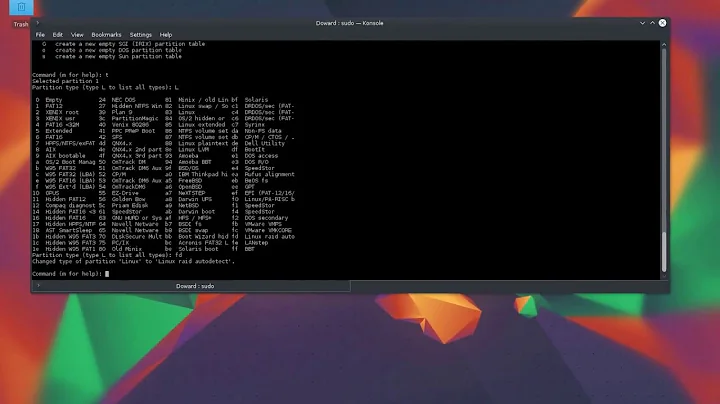

I realize that this is an old question and the original poster believed that SAMBA was the issue, but I experienced the same exact problem and think that very likely the issue was not SAMBA (I actually don’t even have SAMBA), since it didn’t show up in the lsof output, but rather the user was already in the RAID mount-point directory when they switched to root or did a sudo.

In my case, the problem was that I started my root shell when my regular user was in a directory located on that mounted /dev/md127 drive.

user1@comp1:/mnt/md127_content/something$ su -

root@comp1:~# umount /dev/md127

umount: /dev/md127: target is busy

Here is the output of lsof in my case:

root@comp1:root@comp1:~# lsof | grep /dev/md127

md127_rai 145 root cwd DIR 253,0 4096 2 /

md127_rai 145 root rtd DIR 253,0 4096 2 /

md127_rai 145 root txt unknown /proc/145/exe

Even though lsof | grep md125 didn’t show any processes except [md127_raid1], I could not unmount /dev/md127. And while umount -l /dev/md127 does hide /dev/md127 from the output of mount, the drive is apparently still busy, and when mdadm --stop /dev/md127 is attempted, the same error is shown:

mdadm: Cannot get exclusive access to /dev/md127:Perhaps a running process, mounted filesystem or active volume group?

SOLUTION is simple: check if there are any users logged in who are still in a directory on that drive. Especially, check if the root shell you are using was started when your regular user's current directory was on that drive. Switch to that users shell (maybe just exit your root shall), move somewhere else, and umount and mdadm --stop will work:

root@comp1:~# exit

user1@comp1:/mnt/md127_content/something$ cd /

user1@comp1:/$ su -

root@comp1:~# umount /dev/md127

root@comp1:~# mdadm --stop /dev/md127

mdadm: stopped /dev/md127

Solution 2

If you're using LVM on top of mdadm, sometimes LVM will not delete the Device Mapper devices when deactivating the volume group. You can delete it manually.

- Ensure there's nothing in the output of

sudo vgdisplay. - Look in

/dev/mapper/. Aside from thecontrolfile, there should be a Device Mapper device named after your volume group, e.g.VolGroupArray-name. - Run

sudo dmsetup remove VolGroupArray-name(substitutingVolGroupArray-namewith the name of the Device Mapper device). - You should now be able to run

sudo mdadm --stop /dev/md0(or whatever the name of themdadmdevice is).

Solution 3

I was running into similar issues but I didn't have the raid device mounted in any way. Stopping SAMBA didn't seem to help either. lsof showed nothing.

Everything just resulted in:

# mdadm --stop /dev/md2

mdadm: Cannot get exclusive access to /dev/md2:Perhaps a running process, mounted filesystem or active volume group?

What finally fixed it for me was remembering that this was a swap partition - so I just had to swapoff /dev/md2 - this allowed me to mdadm --stop /dev/md2 successfully.

Related videos on Youtube

matt

Updated on September 18, 2022Comments

-

matt almost 2 years

I have

/dev/md127RAID5 array that consisted of four drives. I managed to hot remove them from the array and currently/dev/md127does not have any drives:cat /proc/mdstat Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid1 sdd1[0] sda1[1] 304052032 blocks super 1.2 [2/2] [UU] md1 : active raid0 sda5[1] sdd5[0] 16770048 blocks super 1.2 512k chunks md127 : active raid5 super 1.2 level 5, 512k chunk, algorithm 2 [4/0] [____] unused devices: <none>and

mdadm --detail /dev/md127 /dev/md127: Version : 1.2 Creation Time : Thu Sep 6 10:39:57 2012 Raid Level : raid5 Array Size : 8790402048 (8383.18 GiB 9001.37 GB) Used Dev Size : 2930134016 (2794.39 GiB 3000.46 GB) Raid Devices : 4 Total Devices : 0 Persistence : Superblock is persistent Update Time : Fri Sep 7 17:19:47 2012 State : clean, FAILED Active Devices : 0 Working Devices : 0 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Number Major Minor RaidDevice State 0 0 0 0 removed 1 0 0 1 removed 2 0 0 2 removed 3 0 0 3 removedI’ve tried to do

mdadm --stop /dev/md127but:mdadm --stop /dev/md127 mdadm: Cannot get exclusive access to /dev/md127:Perhaps a running process, mounted filesystem or active volume group?I made sure that it’s unmounted,

umount -l /dev/md127and confirmed that it indeed is unmounted:umount /dev/md127 umount: /dev/md127: not mountedI’ve tried to zero superblock of each drive and I get (for each drive):

mdadm --zero-superblock /dev/sde1 mdadm: Unrecognised md component device - /dev/sde1Here's output of

lsof | grep md127:lsof|grep md127 md127_rai 276 root cwd DIR 9,0 4096 2 / md127_rai 276 root rtd DIR 9,0 4096 2 / md127_rai 276 root txt unknown /proc/276/exeWhat else can I do? LVM is not even installed so it can't be a factor.

After much poking around I finally found what was preventing me from stoping the array. It was SAMBA process. After service smbd stop I was able to stop the array. It’s strange though because although the array was mounted and shared via SAMBA at one point in time, when I tried to stop it it was already unmounted.

-

user1984103 almost 12 yearsAt this point, I think it's safe to use the

--forceoption if you haven't tried it already. Also, what is process276on your system?[mdadm]? -

matt almost 12 yearsIt doesn't work, I get the same message: "mdadm: Cannot get exclusive access to /dev/md127:Perhaps a running process, mounted filesystem or active volume group?"

-

nvja almost 11 years

sudo fuser -vm /dev/md127might show what process has a handle on the array.

-

-

matt over 8 years@sd1074 You might be on something, but I'm not sure if my issue was caused by spawning root shell from the path in question - I would have to stop the samba service, exit the root shell, change directory as regular user, start root shell again and only then stop the array. Which is possible, but not likely. I think that acceptable answer should show how to determine which process is blocking mdadm array from stopping.

lsofclearly fails here for some reason. -

matt over 8 years@sd1074 If you have reproducible test case could you check if any of devices used as mdadm array component have open files reported by lsof? ( You need to compare numbers reported by

lsofin DEVICE column against minor/major numbers of those devices). -

sd1074 over 8 years@matt, I kept the log of my adventures with that raid. I added the output of

lsofto my answer. -

Giacomo1968 over 8 years@matt Kudos for coming back to this question nearly 3 years later!

Giacomo1968 over 8 years@matt Kudos for coming back to this question nearly 3 years later! -

matt over 7 yearsAfter two more years the issue hit me again and I can confirm now that it was the cause of lazily unmounted device (

umount -l /dev/md127) AND bash process with current working directory still somewhere in mounted (now lazily unmounted) filesystem. To find which process exactly is responsible for the issue check this question: unix.stackexchange.com/q/345422/59666 -

keithpjolley over 4 yearsThis was the track that finally worked for me. Thanks @Vladimir

-

geerlingguy over 3 yearsSame thing here too—I had an old session that was inside one of the drive's directories. In my case, I just rebooted the server and it was all good after that.