Intel Matrix Storage RAID and Linux mdadm

Ok, silly me. I figured it out.

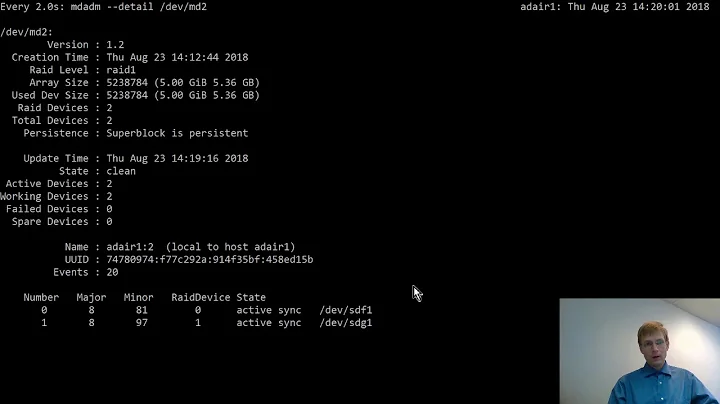

After running mdadm --assemble --scan which finds and assembles the container, running mdadm -I /dev/md/imsm0 assembles the arrays inside the container. D'oh!

# mdadm --assemble --scan

mdadm: Container /dev/md/imsm0 has been assembled with 3 drives

# mdadm -I /dev/md/imsm0

mdadm: Started /dev/md/NMWVolume with 3 devices

# ls -l /dev/md*

brw-rw---- 1 root disk 9, 126 Jan 24 02:22 /dev/md126

brw-rw---- 1 root disk 259, 0 Jan 24 02:22 /dev/md126p1

brw-rw---- 1 root disk 9, 127 Jan 24 02:22 /dev/md127

/dev/md:

total 0

lrwxrwxrwx 1 root root 8 Jan 24 02:22 imsm0 -> ../md127

lrwxrwxrwx 1 root root 8 Jan 24 02:22 NMWVolume -> ../md126

/dev/md126p1 has my RAID5'd 1TB NTFS volume.

Related videos on Youtube

Bill Fraser

Updated on September 17, 2022Comments

-

Bill Fraser almost 2 years

I've got a 3-disk RAID 5 array that was set up under Windows that I'd like to get working in Linux using mdadm. I've done some basic fakeraid stuff before, but this was set up with the Intel Matrix Storage utility in the BIOS, and it's not working like I expected.

Here's my disk setup:

/dev/sda - 32GB SSD, Windows boot drive. /dev/sda1 - Windows system partition /dev/sda2 - Windows C:, 29GB /dev/sdb } /dev/sdc } the RAID5 array: 3x500GB; contains one partition, a 1TB NTFS filesystem /dev/sdd } /dev/sde - 128GB SSD, Linux's drive /dev/sde1 - /boot /dev/sde2 - LVM PV /dev/dm0 - / /dev/dm1 - /home /dev/dm2 - <swap>So the first thing I did was run

mdadm --assemble --scan, which produced the following:mdadm: /dev/sdd is identified as a member of /dev/md/imsm0, slot -1. mdadm: /dev/sdc is identified as a member of /dev/md/imsm0, slot -1. mdadm: /dev/sdb is identified as a member of /dev/md/imsm0, slot -1. mdadm: added /dev/sdc to /dev/md/imsm0 as -1 mdadm: added /dev/sdb to /dev/md/imsm0 as -1 mdadm: added /dev/sdd to /dev/md/imsm0 as -1 mdadm: Container /dev/md/imsm0 has been assembled with 3 drivesSo it found the IMSM info on the disks just fine and assembled a container:

# mdadm --examine /dev/md/imsm0 /dev/md/imsm0: Magic : Intel Raid ISM Cfg Sig. Version : 1.2.02 Orig Family : ee19d190 Family : ee19d190 Generation : 0009ab28 UUID : ab31031b:2d9f8489:5d87a6c4:e87e3826 Checksum : f09a3edc correct MPB Sectors : 2 Disks : 3 RAID Devices : 1 Disk00 Serial : 3PM1DZBD State : active Id : 00010000 Usable Size : 976768654 (465.76 GiB 500.11 GB) [NMWVolume]: UUID : c192a859:14874bab:06ca8af9:d3e8b52e RAID Level : 5 Members : 3 This Slot : 0 Array Size : 1953536000 (931.52 GiB 1000.21 GB) Per Dev Size : 976768264 (465.76 GiB 500.11 GB) Sector Offset : 0 Num Stripes : 7631000 Chunk Size : 64 KiB Reserved : 0 Migrate State : idle Map State : normal Dirty State : clean Disk01 Serial : 3PM222TL State : active Id : 00020000 Usable Size : 976768654 (465.76 GiB 500.11 GB) Disk02 Serial : 3PM22GFQ State : active Id : 00030000 Usable Size : 976768654 (465.76 GiB 500.11 GB)It shows the Raid5 volume, "NMWVolume" in there, but how do I get to it? That container device doesn't do me anything. I can't read anything off it, i.e.

fdisk /dev/md/imsm0just returnsUnable to read /dev/md/imsm0.If I try to run

mdadm --create --verbose --level=5 --raid-devices=3 --chunk=64 --auto=mdp /dev/md0 /dev/sd[bcd]I get these errors:mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: super1.x cannot open /dev/sdb: Device or resource busy mdadm: not enough space after merge (0 < 0) mdadm: device /dev/sdb not suitable for any style of arrayReplacing

/dev/sd[bcd]with/dev/md/imsm0gives the errormdadm: not enough devices with space to create array..I tried stopping the

/dev/md/imsm0array and re-running themdadm --createcommand with the sd[bcd] drives, and it gives me this:mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: /dev/sdb appears to be part of a raid array: level=container devices=0 ctime=Wed Dec 31 18:00:00 1969 mdadm: partition table exists on /dev/sdb but will be lost or meaningless after creating array mdadm: layout defaults to left-symmetric mdadm: /dev/sdc appears to be part of a raid array: level=container devices=0 ctime=Wed Dec 31 18:00:00 1969 mdadm: layout defaults to left-symmetric mdadm: /dev/sdd appears to be part of a raid array: level=container devices=0 ctime=Wed Dec 31 18:00:00 1969 mdadm: partition table exists on /dev/sdd but will be lost or meaningless after creating array mdadm: size set to 488385408K Continue creating array? ^CSo I'm not doing that...

I'm out of ideas. How do I assemble this array?

-

Carlos Garcia over 13 yearsNice solution. I market it for future' search.