Linux software RAID 1 - root filesystem becomes read-only after a fault on one disk

Your dmesg output should give you an indication as to why it's signalling failure to the PV; that shouldn't be happening. As far as getting the system writable again, kicking the VG and LV to read-only and then back to read-write works from memory, but the real resolution is getting md to stop worrying LVM unnecessarily.

Related videos on Youtube

Comments

-

DrStalker almost 2 years

Linux software RAID 1 locking to read-only mode

The setup:

Centos 5.2, 2x 320 GB sata drives in RAID 1.- /dev/md0 (/dev/sda1 + /dev/sdb1) is /boot

- /dev/md1 (/dev/sda1 + /dev/sdb1) is an LVM partition which contains /, /data and swap partitions

All filesystems other than swap are ext3

We've had problem on several systems where a fault on one drive has locked the root filesystem as readonly, which obviously causes problems.

[root@myserver /]# mount | grep Root /dev/mapper/VolGroup00-LogVolRoot on / type ext3 (rw) [root@myserver /]# touch /foo touch: cannot touch `/foo': Read-only file systemI can see that one of the partitions in the array is faulted:

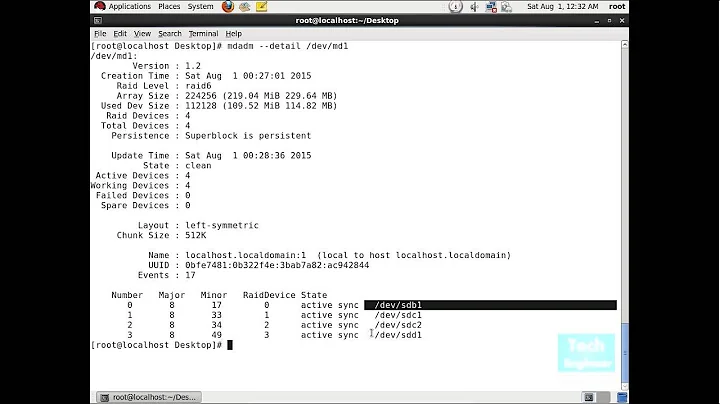

[root@myserver /]# mdadm --detail /dev/md1 /dev/md1: [...] State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 1 Spare Devices : 0 [...] Number Major Minor RaidDevice State 0 0 0 0 removed 1 8 18 1 active sync /dev/sdb2 2 8 2 - faulty spare /dev/sda2Remounting as rw fails:

[root@myserver /]# mount -n -o remount / mount: block device /dev/VolGroup00/LogVolRoot is write-protected, mounting read-onlyThe LVM tools give an error unless --ignorelockingfailure is used (because they can't write to /var) but show the volume group as rw:

[root@myserver /]# lvm vgdisplay Locking type 1 initialisation failed. [root@myserver /]# lvm pvdisplay --ignorelockingfailure --- Physical volume --- PV Name /dev/md1 VG Name VolGroup00 PV Size 279.36 GB / not usable 15.56 MB Allocatable yes (but full) [...] [root@myserver /]# lvm vgdisplay --ignorelockingfailure --- Volume group --- VG Name VolGroup00 System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 4 VG Access read/write VG Status resizable [...] [root@myserver /]# lvm lvdisplay /dev/VolGroup00/LogVolRoot --ignorelockingfailure --- Logical volume --- LV Name /dev/VolGroup00/LogVolRoot VG Name VolGroup00 LV UUID PGoY0f-rXqj-xH4v-WMbw-jy6I-nE04-yZD3Gx LV Write Access read/write [...]In this case /boot (seperate RAID meta-device) and /data (a different logical volume in the same volume group) are still writtable. From the previous occurances I know that a restart will bring the system back up with a read/write root filesystem and a properly degraded RAID array.

So, I have two questions:

1) When this occurs, how can I get the root filesystem back to read/write without a system restart?

2) What needs to be changed to stop this filesystem locking? With a RAID 1 failure on a single disk we don't want the filesystems to lockup, we want the system to keep running until we can replace the bad disk.

Edit: I can see this in teh dmesg output - doe sthis indicate a failure of /dev/sda, then a seperate failure on /dev/sdb that lead to the filesystem being set to read only?

sda: Current [descriptor]: sense key: Aborted Command Add. Sense: Recorded entity not found Descriptor sense data with sense descriptors (in hex): 72 0b 14 00 00 00 00 0c 00 0a 80 00 00 00 00 00 00 03 ce 85 end_request: I/O error, dev sda, sector 249477 raid1: Disk failure on sda2, disabling device. Operation continuing on 1 devices ata1: EH complete SCSI device sda: 586072368 512-byte hdwr sectors (300069 MB) sda: Write Protect is off sda: Mode Sense: 00 3a 00 00 SCSI device sda: drive cache: write back RAID1 conf printout: --- wd:1 rd:2 disk 0, wo:1, o:0, dev:sda2 disk 1, wo:0, o:1, dev:sdb2 RAID1 conf printout: --- wd:1 rd:2 disk 1, wo:0, o:1, dev:sdb2 ata2.00: exception Emask 0x0 SAct 0x0 SErr 0x0 action 0x0 ata2.00: irq_stat 0x40000001 ata2.00: cmd ea/00:00:00:00:00/00:00:00:00:00/a0 tag 0 res 51/04:00:34:cf:f3/00:00:00:f3:40/a3 Emask 0x1 (device error) ata2.00: status: { DRDY ERR } ata2.00: error: { ABRT } ata2.00: configured for UDMA/133 ata2: EH complete sdb: Current [descriptor]: sense key: Aborted Command Add. Sense: Recorded entity not found Descriptor sense data with sense descriptors (in hex): 72 0b 14 00 00 00 00 0c 00 0a 80 00 00 00 00 00 01 e3 5e 2d end_request: I/O error, dev sdb, sector 31677997 Buffer I/O error on device dm-0, logical block 3933596 lost page write due to I/O error on dm-0 ata2: EH complete SCSI device sdb: 586072368 512-byte hdwr sectors (300069 MB) sdb: Write Protect is off sdb: Mode Sense: 00 3a 00 00 SCSI device sdb: drive cache: write back ata2.00: exception Emask 0x0 SAct 0x1 SErr 0x0 action 0x0 ata2.00: irq_stat 0x40000008 ata2.00: cmd 61/38:00:f5:d6:03/00:00:00:00:00/40 tag 0 ncq 28672 out res 41/10:00:f5:d6:03/00:00:00:00:00/40 Emask 0x481 (invalid argument) <F> ata2.00: status: { DRDY ERR } ata2.00: error: { IDNF } ata2.00: configured for UDMA/133 sd 1:0:0:0: SCSI error: return code = 0x08000002 sdb: Current [descriptor]: sense key: Aborted Command Add. Sense: Recorded entity not found Descriptor sense data with sense descriptors (in hex): 72 0b 14 00 00 00 00 0c 00 0a 80 00 00 00 00 00 00 03 d6 f5 end_request: I/O error, dev sdb, sector 251637 ata2: EH complete SCSI device sdb: 586072368 512-byte hdwr sectors (300069 MB) sdb: Write Protect is off sdb: Mode Sense: 00 3a 00 00 SCSI device sdb: drive cache: write back Aborting journal on device dm-0. journal commit I/O error ext3_abort called. EXT3-fs error (device dm-0): ext3_journal_start_sb: Detected aborted journal Remounting filesystem read-only-

Admin over 6 yearsDid you ever find a solution to your two questions? I'm currently facing a very similar problem.

Admin over 6 yearsDid you ever find a solution to your two questions? I'm currently facing a very similar problem.

-

DrStalker almost 15 yearsIts not possible to set the logical volume to read-only because lvchange -p coesn't support the --ignorelockingfailure parameter (only -a does), and I can't find any equivilent command for read-only mode on a volume group - any other suggestions for getting this running without a restart?