mdadm: Growing a RAID0 Array Weirdness

I have hit his problem as well and after some testing can grow a Software RAID 0 array.

I found an option which if you think about it makes sense to include in the grow command, the option is, --backup-file. If you think about it to realistically grow a RAID 0 array as you would if you did it in hardware is you'd need to copy the data elsewhere to destroy and recreate the array, if not I'd like to see the math to grow a RAID 0 array without the extra space (really).

Another thing I found is I was not able to just add one drive to the RAID 0 array, it only works if I add two drives, haven't tried more than that yet.

So the command to grow a RAID 0 array would be (for your case):

mdadm --grow /dev/md0 --raid-devices=5 \

--backup-file=/drive/With/Enough/Space/growth.bak --add /dev/xvdf1 /dev/xvdg1

Related videos on Youtube

Ken S.

Updated on September 18, 2022Comments

-

Ken S. almost 2 years

I have created 3 RAID0 arrays using mdadm on 3 Rackspace CloudServers using Rackspace's CloudBlockStorage as their devices. Each array has their own set of CBS volumes. RS-CBS has an upper limit of 1TB for their drives and I needed larger storage. One of the servers is for data storage, the second is a live mirror of that server, and the third is a nightly snapshot. I created the array on each of the servers using this command:

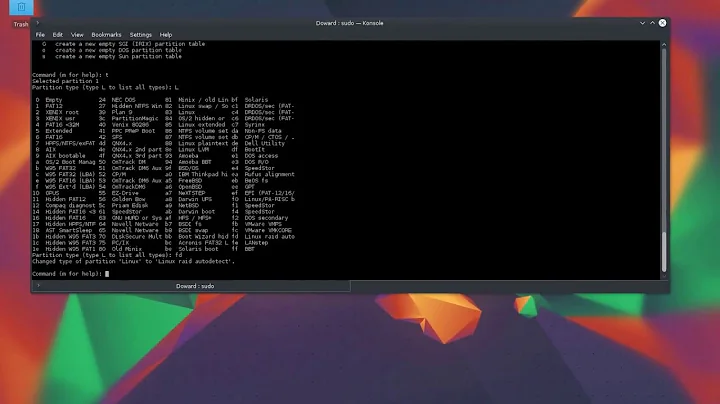

mdadm --create /dev/md0 --level=0 --raid-devices=3 /dev/xvdb1 /dev/xvdd1 /dev/xvde1When it came time to grow the array, and since this was my first time growing one, I started on the least critical server: the snapshot server. I added a new volume and, with a little fiddling, came up with this command:

mdadm --grow /dev/md0 --raid-devices=4 --add /dev/xvdf1According to the mdadm man page it said you are not able to grow a RAID0 so mdadm converts it to RAID4, grows it, and then converts back. (It never converts back, but that is another issue). The array reshapes, but the size never changes. My assumption is that the new drive just became the parity drive for the array. So to get the space I need, I added another volume:

mdadm --grow /dev/md0 --raid-devices=5 --add /dev/xvdg1This reshapes and gives me the extra 500GB I was hoping for so I do the

resize2fs /dev/md0and am good to go; 2TB of space.So this is where the weirdness happens. I switch to grow the array on the mirror server. Knowing from the previous steps that I will need to add two volumes to get the extra space I need, I use this command:

mdadm --grow /dev/md0 --raid-devices=5 --add /dev/xvdf1 /dev/xvdg1It reshapes and things look fine, but when I do the

resize2fs /dev/md0followed by adf -halI see that it did grow and is reporting 2.5TB of space, not the 2.0TB I was expecting. It's as if it didn't create the parity drive I assumed it did from the first run and used all available space for the array.One thing that looked different was the output of

mdadm -D /dev/md0after the reshape on the mirror server. I saw this for the RAID devices:Number Major Minor RaidDevice State 0 202 17 0 active sync /dev/xvdb1 1 202 49 1 active sync /dev/xvdd1 2 202 65 2 active sync /dev/xvde1 5 202 97 3 active sync /dev/xvdg1 4 202 81 4 active sync /dev/xvdf1 5 0 0 5 removedThe output of

cat /proc/mdstatshows that it did convert to RAID4:Personalities : [raid0] [raid6] [raid5] [raid4] md0 : active raid4 xvdg1[5] xvdf1[4] xvdd1[1] xvdb1[0] xvde1[2] 2621432320 blocks super 1.2 level 4, 512k chunk, algorithm 5 [6/5] [UUUUU_] unused devices: <none>So my concerns are ... did I grow the first array (snapshot server) incorrectly and it should've gotten 2.5TB, but my error only gave it 2.0TB? Or did I grow the second array (mirror server) incorrectly and don't have the parity drive that I thought I was going to get? What can I do, or what should have I done, to grow these arrays consistently?

-

Maziyar over 10 yearsreally interesting question, I always have a problem in growing RAID 0 on mdadm. I use EBS volumes on EC2 and every time I use all the commands on the internet and end up removing everything and build the array with new volumes all over again and sync with other replica sets. Really painful, hope someone gives us an straight forward way to grow RAID 0.

Maziyar over 10 yearsreally interesting question, I always have a problem in growing RAID 0 on mdadm. I use EBS volumes on EC2 and every time I use all the commands on the internet and end up removing everything and build the array with new volumes all over again and sync with other replica sets. Really painful, hope someone gives us an straight forward way to grow RAID 0.

-

-

John Marston over 4 yearsThe backup file is always only used to backup the critical section. That is, a 2 MB block.

-

Marki555 over 4 yearsFor my tests with 1 GB loop devices, the critical section was 16 MB to 64 MB depending on the number of devices in raid.