Performance of HashMap with different initial capacity and load factor

Solution 1

In the absence of a perfect hashing function for your data, and assuming that this is really not a micro-optimization of something that really doesn't matter, I would try the following:

Assume the default load capacity (.75) used by HashMap is a good value in most situations. That being the case, you can use it, and set the initial capacity of your HashMap based on your own knowledge of how many items it will hold - set it so that initial-capacity x .75 = number of items (round up).

If it were a larger map, in a situation where high-speed lookup was really critical, I would suggest using some sort of trie rather than a hash map. For long strings, in large maps, you can save space, and some time, by using a more string-oriented data structure, such as a trie.

Solution 2

Assuming that your hash function is "good", the best thing to do is to set the initial size to the expected number of elements, assuming that you can get a good estimate cheaply. It is a good idea to do this because when a HashMap resizes it has to recalculate the hash values for every key in the table.

Leave the load factor at 0.75. The value of 0.75 has been chosen empirically as a good compromise between hash lookup performance and space usage for the primary hash array. As you push the load factor up, the average lookup time will increase significantly.

If you want to dig into the mathematics of hash table behaviour: Donald Knuth (1998). The Art of Computer Programming'. 3: Sorting and Searching (2nd ed.). Addison-Wesley. pp. 513–558. ISBN 0-201-89685-0.

Solution 3

I find it best not to fiddle around with default settings unless I really really need to.

Hotspot does a great job of doing optimizations for you.

In any case; I would use a profiler (Say Netbeans Profiler) to measure the problem first.

We routinely store maps with 10000s of elements and if you have a good equals and hashcode implementation (and strings and Integers do!) this will be better than any load changes you may make.

Solution 4

Assuming (and this is a stretch) that the hash function is a simple mod 5 of the integer keys

It's not. From HashMap.java:

static int hash(int h) {

// This function ensures that hashCodes that differ only by

// constant multiples at each bit position have a bounded

// number of collisions (approximately 8 at default load factor).

h ^= (h >>> 20) ^ (h >>> 12);

return h ^ (h >>> 7) ^ (h >>> 4);

}

I'm not even going to pretend I understand that, but it looks like that's designed to handle just that situation.

Note also that the number of buckets is also always a power of 2, no matter what size you ask for.

Solution 5

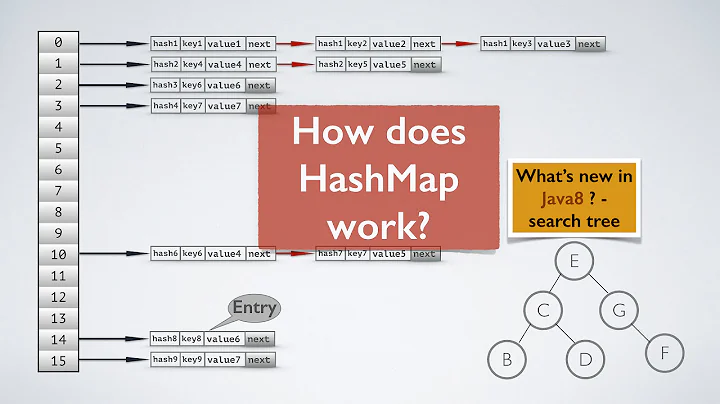

Entries are allocated to buckets in a random-like way. So even if you as many buckets as entries, some of the buckets will have collisions.

If you have more buckets, you'll have fewer collisions. However, more buckets means spreading out in memory and therefore slower. Generally a load factor in the range 0.7-0.8 is roughly optimal, so it is probably not worth changing.

As ever, it's probably worth profiling before you get hung up on microtuning these things.

Related videos on Youtube

Comments

-

jW. over 4 years

Here is my situation. I am using two java.util.HashMap to store some frequently used data in a Java web app running on Tomcat. I know the exact number of entries into each Hashmap. The keys will be strings, and ints respectively.

My question is, what is the best way to set the initial capacity and loadfactor?

Should I set the capacity equal to the number of elements it will have and the load capacity to 1.0? I would like the absolute best performance without using too much memory. I am afraid however, that the table would not fill optimally. With a table of the exact size needed, won't there be key collision, causing a (usually short) scan to find the correct element?

Assuming (and this is a stretch) that the hash function is a simple mod 5 of the integer keys, wouldn't that mean that keys 5, 10, 15 would hit the same bucket and then cause a seek to fill the buckets next to them? Would a larger initial capacity increase performance?

Also, if there is a better datastructure than a hashmap for this, I am completely open to that as well.

-

Avi almost 15 yearsHow many entries are in the map, and what is the average length of the string key?

-

jW. almost 15 yearsthe total entries will range between 20 - 50 and the string key length will have a character count between 10-30

-

starblue almost 15 yearsThat's rather small, are you sure you even need to worry about it? Unless you have a lot of instances just go with the default HashMap parameters.

-

Tom Neyland almost 15 yearsif( (Time_saved_per_operation_because_of_this_Optimization x Number_of_Operations) < (Time_spent_on_optimization) ) { return toOtherWork; }

-

jW. almost 15 yearsThis will be highly highly utilized and the time spent working on this is and making changes is pretty minimal. Thanks for all the comments!

-

Pacerier over 12 years@instanceofTom you forgot to add

knowledge gainedinto your formula

-

-

Stephen almost 15 years"more buckets means spreading out in memory and therefore slower". Unless you're talking about nano-optimisation, I'm pretty sure this is very incorrect. A key is looked up by doing the respective hash calculations (constant time), then a modulo to find the bucket, then iterating through the bucket's contents until the requested key equals() the stored one. So larger is faster (in all but the most bizarre hashing situations).

-

jW. almost 15 yearsThe assumption on the hash was simply to guess at the fact that there will be collisions, and the chance of getting a perfect hash of the data is probably impossible. Even with this function (which I don't understand either) I would guess there is a good chance it will not perfectly hash the strings I pass it. Thank you for the response!

-

Brett almost 15 yearsCache locality is very important in modern systems. If the array is overly long, then it is more likely to cause a cache miss. Moving the load factor way out has little effect on bucket collisions. Presumably this effect is more pronounce in languages such as C++ were everything (first link of list, hash, key and value) can be stored within the array.

-

Ashwin about 12 years@TomHawtin-tackline : I dont' get your point. If the number of buckets is equal to the number of elements, you said "spreading out in memory". If you use fewer buckets then each bucket will have to hold many elements. Any way the memory remains the same right?

-

Brett about 12 years@Ashwin Memory used by the array.

-

Ashwin about 12 years@TomHawtin-tackline : I am sorry. What are you trying to say?

-

Brett about 12 years@Ashwin What don't you understand? A larger array uses more memory used.

-

Ashwin about 12 years@TomHawtin-tackline : Similarly more elements in a linked list means more memory.

-

Arjan about 8 yearsI think there's something wrong about this answer. If you're so concerned about the resizing of HashMap, you should not set the initial capacity to the expected number of elements (e.g. 100) and the load factor to 0.75, because that means the HashMap will always resize once at some point (e.g. 75th element). If you keep the load factor at 0.75 and want to prevent the HashMap from resizing, you'll need to set the initial capacity to

(expectedSize / 0.75) + 1.