Traffic shaping on Linux with HTB: weird results

Solution 1

I think I kinda sorta fixed the issue: I needed to tie the qdiscs/classes to an IMQ device rather than an ETH device. Once I did that, the shaper started working.

However!

While I could get the shaper to limit traffic incoming to a machine, I couldn't get it to split traffic fairly (even though I've attached a SFQ to my HTB).

What happened is this: I started a download; it got limited to 75Kbyte/s. Now, when I started a second download, instead of evenly splitting traffic between the 2 DL sessions (35Kbyte/s + 35Kbyte/s), it just barely dropped speed on session one and gave session two a meager 500b/s. After a couple minutes, the split settled on something like 65Kbyte/s + 10 Kbyte/s. indignantly That's not fair! :)

So I dismantled my config, went ahead and set up ClearOS 5.2 (a Linux distro with a pre-built firewall system) that has a traffic shaper module. The module uses an HTB + SFQ setup very similar to what I configured by hand.

Same fairness issue! The overall limit is enforced well, but there is no fairness! - two downloads share in the same weird proportion 65/15 proportion rather than 35/35.

Any ideas, guys?

Solution 2

Try using this example instead:

# tc qdisc add dev eth1 root handle 1: htb default 10

# tc class add dev eth1 parent 1: classid 1:1 htb rate 75Kbit

# tc class add dev eth1 parent 1:1 classid 1:10 htb rate 1Kbit ceil 35Kbit

# tc class add dev eth1 parent 1:1 classid 1:20 htb rate 35kbit

# tc qdisc add dev eth1 parent 1:10 handle 10: sfq perturb 10

# tc qdisc add dev eth1 parent 1:20 handle 20: sfq perturb 10

# tc filter add dev eth1 parent 1:0 protocol ip prio 1 u32 \

match ip dst 10.41.240.240 flowid 1:20

This creates an htb bucket with rate limit of 75Kbit/s, then it creates two sfq (a fair queing qdisc) underneath that.

By default, everyone will be in the first queue, with a guaranteed rate of 1Kbit and a max rate of 30Kbit. Now, your ip of 10.41.240.240 will be guaranteed 35Kbit and can take as much as 75Kbit if the non-selected traffic is utilized. Two connections from .240 should average out and be the same per connection, and a connection between a .240 and a non .240 will parallel at a 35:1 ratio between queues.

I see this has been dead since Apr... so hopefully this info is still of value to you.

Solution 3

This may be related to this:

From: http://www.shorewall.net/traffic_shaping.htm

A Warning to Xen Users

If you are running traffic shaping in your dom0 and traffic shaping doesn't seem to be limiting outgoing traffic properly, it may be due to "checksum offloading" in your domU(s). Check the output of "shorewall show tc". Here's an excerpt from the output of that command:

class htb 1:130 parent 1:1 leaf 130: prio 3 quantum 1500 rate 76000bit ceil 230000bit burst 1537b/8 mpu 0b overhead 0b cburst 1614b/8 mpu 0b overhead 0b level 0

Sent 559018700 bytes 75324 pkt (dropped 0, overlimits 0 requeues 0)

rate 299288bit 3pps backlog 0b 0p requeues 0

lended: 53963 borrowed: 21361 giants: 90174

tokens: -26688 ctokens: -14783

There are two obvious problems in the above output:

- The rate (299288) is considerably larger than the ceiling (230000).

- There are a large number (90174) of giants reported.

This problem will be corrected by disabling "checksum offloading" in your domU(s) using the ethtool utility. See the one of the Xen articles for instructions.

Related videos on Youtube

DADGAD

Updated on September 18, 2022Comments

-

DADGAD over 1 year

I'm trying to have some simple bandwidth throttling set up on a Linux server and I'm running into what seems to be very weird stuff despite a seemingly trivial config.

I want to shape traffic coming to a specific client IP (10.41.240.240) to a hard maximum of 75Kbit/s. Here's how I set up the shaping:

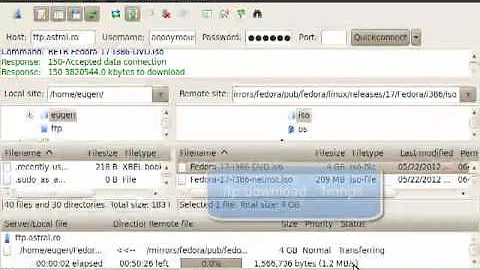

# tc qdisc add dev eth1 root handle 1: htb default 1 r2q 1 # tc class add dev eth1 parent 1: classid 1:1 htb rate 75Kbit # tc class add dev eth1 parent 1:1 classid 1:10 htb rate 75kbit # tc filter add dev eth1 parent 1:0 protocol ip prio 1 u32 match ip dst 10.41.240.240 flowid 1:10To test, I start a file download over HTTP from the said client machine and measure the resulting speed by looking at Kb/s in Firefox.

Now, the behaviour is rather puzzling: the DL starts at about 10Kbyte/s and proceeds to pick up speed until it stabilizes at about 75Kbytes/s (Kilobytes, not Kilobits as configured!). Then, If I start several parallel downloads of that very same file, each download stabilizes at about 45Kbytes/s; the combined speed of those downloads thus greatly exceeds the configured maximum.

Here's what I get when probing tc for debug info

[root@kup-gw-02 /]# tc -s qdisc show dev eth1 qdisc htb 1: r2q 1 default 1 direct_packets_stat 1 Sent 17475717 bytes 1334 pkt (dropped 0, overlimits 2782 requeues 0) rate 0bit 0pps backlog 0b 12p requeues 0 [root@kup-gw-02 /]# tc -s class show dev eth1 class htb 1:1 root rate 75000bit ceil 75000bit burst 1608b cburst 1608b Sent 14369397 bytes 1124 pkt (dropped 0, overlimits 0 requeues 0) rate 577896bit 5pps backlog 0b 0p requeues 0 lended: 1 borrowed: 0 giants: 1938 tokens: -205561 ctokens: -205561 class htb 1:10 parent 1:1 prio 0 **rate 75000bit ceil 75000bit** burst 1608b cburst 1608b Sent 14529077 bytes 1134 pkt (dropped 0, overlimits 0 requeues 0) **rate 589888bit** 5pps backlog 0b 11p requeues 0 lended: 1123 borrowed: 0 giants: 1938 tokens: -205561 ctokens: -205561What I can't for the life of me understand is this: how come I get a "rate 589888bit 5pps" with a config of "rate 75000bit ceil 75000bit"? Why does the effective rate get so much higher than the configured rate? What am I doing wrong? Why is it behaving the way it is?

Please help, I'm stumped. Thanks guys.

-

coredump about 13 yearsHow are the interfaces configured on the shaping machine? Two interfaces, one interface, what one is the downstream for the client, etc.

-

DADGAD about 13 yearsTwo interfaces; eth0 is the WAN iface, eth1 is the LAN interface.

-

coredump about 13 yearsAnd you are starting the download from the machine where the

tcis being run or from a client connected to it? Just to check :) It's really strange. Have you considered using a script likehtb.initto create those rules and see if they do anything different from yours? -

Zdenek over 7 yearsFirst of all, a line starting

tc qdiscalso belongs to the end leaf of the rule tree, that's missing. Then classid 1:1 shouldn't have the limit filled in because that means that everybody would get limited, not just the targer user. And lastly,r2q 1is a horrible choice, it starts behaving erratic with anything lower than 4 in my experience. The default is 10, I have good results with 6.

-

-

David Schwartz over 12 yearsThat's typical. Neither TCP nor HTB have any mechanism to ensure a 'fair' split.

-

Zdenek over 7 yearsThere are several ways. First of all, try a different congestion control, like Hybla. It's a tunable. The other thing is the

-m connbytesmatching and you will use this to throw connections with large downloads into a higher-number priority (lower actual priority) bucket. This will give a boost to the next new connection.