What archive compression makes .mp4 files the smallest?

First, a minor aside about terminology: ZIP is the only archive format you used. Gzip and Bzip2 are compression formats, not archive formats. To be a bit more specific:

An archive format aggregates multiple files and/or directories, usually including metadata such as ownership, timestamps, and possibly other data, into a single file. Tar is an example of a pure archive format, it does no inherent compression,

A compresion format just compresses data, but does not inherently combine multiple files into one. Gzip, Bzip2, Brotli, LZ4, LZOP, XZ, PAQ, and Zstandard are all compression formats. Some of them (such as Gzip and LZ4) may support compressing multiple files and concatenating them into one file that can then be uncompressed into the multiple original files (which is what's happening when you gzip a directory), but they don't' store paths or other metadata, so they are not archive formats.

Some formats, such as ZIP, 7z, or RAR, combine archiving and compression (although ZIP can store files uncompressed as well).

Now, with that out of the way, let's move on to your main question:

The comment by music2myear is correct. Your results will vary widely depending on the exact specifics of the MP4 encoding used. This is because MP4 itself includes data compression, in this case optimized for compressing audio and video data without significantly lowering the perceived quality. The processes it uses for this are actually somewhat complicated (too complicated to explain here), but because of the constraint that it doesn't lower perceived quality, combined with the fact that it compresses frame-by-frame instead of as a single long stream, there's sometimes significant room for improvement (as you can see from your test).

Now, while I can't give a conclusive answer without lots of further details, I can however give you some general advice on file compression:

ZIP and Gzip show very similar results in this case because they use variants of the same compression algorithm, more specifically a derivative of the LZW algorithm known as DEFLATE. DEFLATE is not a particularly great compression algorithm, but it's ubiquitous (there are even hardware implementations of it), so it's often used as a standard of comparison. Outside of usage as a component of other file formats (such as ZIP), it's not very widely used for storage anymore. Pretty much anything based on DEFLATE (or LZW in general) isn't going to win in any respect when comparing compression algorithms.

Bzip2 by contrast does some complex transformations on the data to make it compress more efficiently, and then uses Huffman coding for the actual compression. In most cases, it compresses better than DEFLATE-based compressors, but it's slower than DEFLATE too. Because of some assumptions made in how it transforms the input data prior to Huffman coding, it is also somewhat more sensitive than many other options to how the input data is structured.

XZ uses a different algorithm called LZMA. Like the LZW algorithm that DEFLATE is derived from, LZMA is ultimately derived from an algorithm known as LZ77, though it gets insanely better compression ratios than DEFLATE-based options, and significantly better ratios than Bzip2 in most cases. In addition to LZMA, it does some transformations that make it a bit better at compressing executables than the other options. The cost of this however, is that it takes a long time to compress data. 7zip also uses LZMA, but without the data transformations, so it's often not quite as good in terms of ratios as XZ.

LZOP uses the LZO algorithm, and generally compresses worse than DEFLATE, but works much faster. Just like Gzip, it's not widely used anymore, as people tend to favor alternatives that either give a better compression ratio, or better performance.

LZ4 is a newer standard developed by Google that runs insanely fast (near memory bandwidth speed for decompression), but gets even worse compression ratios than LZO. It's been slowly supplanting LZO, as most of the stuff that used LZO used it for speed.

Brotli is another new one from Google. It's part of the HTTP/2 standard, and is specifically optimized for streaming, and can actually get both better compression ratios and performance than DEFLATE-based options do. However, it's not widely supported for plain file compression, so it may not be a viable option for your usage.

PAQ is for those who are really insanely worried about maximizing compression ratios. It uses complex combination of statistical models to achieve absolutely bonkers compression ratios (depending on the original data, it's not unusual for a file compressed with PAQ to be less than 1/10 of the original size, whereas DEFLATE averages closer to 1/2). The cost of this is, of course, that it takes an insanely long time to compress anything with PAQ. With the high compression settings, it would likely take at least half an hour to compress that sample video with PAQ. Because of the amount of time it takes, almost nobody uses PAQ, and the few who do rarely use it for anything other than archival purposes (that is, they only use it on files they are not likely to ever change).

Zstandard is the newest of the lot, and was developed by Facebook. It uses a mix of older methods and newer ones (including some machine learning techniques) to achieve compression ratios comparable to or better than bzip2 (and sometimes even better than XZ), while running significantly faster than most of the other ones I've listed except LZ4. It probably wouldn't beat XZ for your usage (and definitely won't beat PAQ), but it may get good enough ratios that the significantly better performance is worth it.

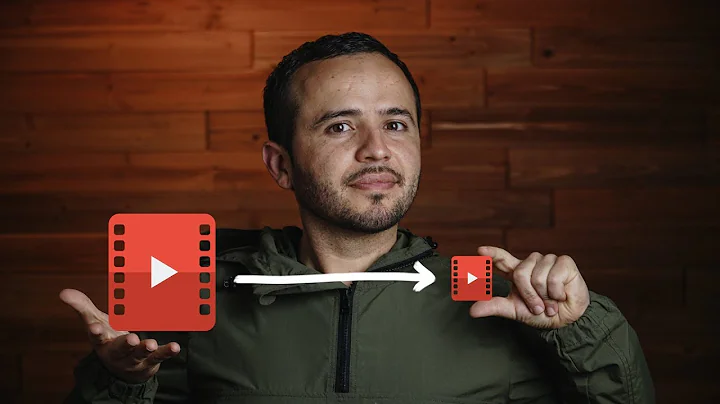

Related videos on Youtube

O.rka

I am an academic researcher studying machine-learning and microorganisms

Updated on September 18, 2022Comments

-

O.rka almost 2 years

O.rka almost 2 yearsIs there an archive-based compression format that is known to make the smallest .mp4 videos?

I did a test 1080p 0:12 duration video with the only archival formats I know about:

(python3) Joshs-MBP:testing_movie mu$ ls -lS total 12712 -rw-r--r-- 1 mu staff 2145528 Jun 6 09:26 testing.mov -rw-r--r-- 1 mu staff 1790044 Jun 6 09:26 testing.mov.zip -rw-r--r--@ 1 mu staff 1789512 Jun 6 09:25 testing.mov.gz -rw-r--r-- 1 mu staff 775138 Jun 6 09:26 testing.mov.bz2Looks like

bzip2is the best. Is there anything else that is better in terms of making the files smaller? It's ok if it takes a lot longer.Also, I noticed you can't

bzip2a directory.-

Ravindra Bawane about 6 yearsI'm going to guess that any such compression will vary wildly based on how the MP4 was encoded and packaged. MP4 is already compressed, and that can be adjusted by the settings used to create the MP4. Unless we know the settings used to create this MP4 file it is unlikely we'll be able to provide an answer any more authoritative than the one you've already discovered yourself.

Ravindra Bawane about 6 yearsI'm going to guess that any such compression will vary wildly based on how the MP4 was encoded and packaged. MP4 is already compressed, and that can be adjusted by the settings used to create the MP4. Unless we know the settings used to create this MP4 file it is unlikely we'll be able to provide an answer any more authoritative than the one you've already discovered yourself. -

Daniel B about 6 yearsA 12 second video is not a representative sample for videos in general. The shorter the video the higher the ratio of non-frame data (which is probably more compressible).

-

Daniel B about 6 yearsSo, just for fun, I tried GZip, BZip2, 7z (LZMA2), Zip and LZ4 with maximum compression on 12s, 60s and 120s length cuts of an action scene. The best compression ratio overall was a mere 100.014%. That’s not worth the time and power invested.

-

-

Gyan about 6 yearsit compresses frame-by-frame instead of as a single long stream --> true only for intra codecs. Most video codecs use temporal compression, so almost all frames are predicted from other frames (+ error residual). In particular, identical frames are whittled down to a few dozen bytes. After the main compression step of the video payload, the resulting bitstream and control data undergoes lossless entropy coding. For MP4s with such coded streams, 7zip ultra will yield a ratio of 98-99%

-

Arturs Radionovs almost 5 yearsAs someone who knows nothing about the inner workings of compression or video encoding, it's surprising to me that further compression can't be performed if we're willing to leave the video file in an unplayable format that must be specifically decompressed first as opposed to video compression which can do it in real time as you play it.

-

Austin Hemmelgarn almost 5 years@xr280xr The issue is that data that's already been compressed with any reasonable compression algorithm doesn't compress very well (if at all) when passed through another compression algorithm. Because of this and the fact that most of the data in a video file is audio and video data that's already compressed, video files tend not to compress at all when run through another compression algorithm.

-

drmuelr over 4 years@AustinHemmelgarn Is there a reasonably straightforward way to re-expand a compressed video back out into some kind of temporary uncompressed format, which is then more suitable for more aggressive compression, like xr280xr mentioned? I'm thinking specifically for the case of putting video files into archival storage... polar opposite of immediate playback. Something like what you said about PAQ, geared toward video.

-

Austin Hemmelgarn over 4 years@drmuelr Generally not. If you've got the original video, you can save that in a lossless format, but it will still almost always be compressed (video data is huge, 1 minute of uncompressed Full HD video at 60 FPS is at least in the range of double digit gigabytes in size). Outside of the multimedia industry though, most videos you're likely to encounter are almost certainly going to be encoded using some lossy compression algorithm (such as Theora or one of the various MPEG standards), and those can't be completely reconstructed to full quality (if they could, they'd be lossless).